Writing and running code in Jupyter Notebooks

Overview

Teaching: 30 min

Exercises: 0 minQuestions

How can I easily write, test and run Python programs?

Objectives

Create a Jupyter notebook.

Understand the difference between a Python script and a Jupyter notebook.

Create Markdown cells in a notebook.

Create and run Python cells in a notebook.

Use the Jupyter Notebook for editing and running Python.

Python scripts are text files containing lines of Python code which are run sequentially by a Python interpreter. While it’s common to write Python scripts using a text editor, which can be run from the command line, we are going to use the Jupyter Notebook for this course. This has several advantages:

- You can easily type, edit, and copy and paste blocks of code, which can then be run to produce output, either within the notebook or separately (as for a regular Python script).

- Tab complete allows you to easily access the names of things you are using and learn more about them.

- It allows you to annotate your code with links, different sized text, bullets, etc. to make it more accessible to you and your collaborators.

- It allows you to display figures next to the code that produces them to tell a complete story of the analysis.

- Each notebook contains one or more cells that contain code, text, or images.

Notebook files have the extension .ipynb to distinguish them from plain-text Python programs. Howeve, they can be exported as Python scripts that can be run from the command line.

How It’s Stored

- The notebook file is stored in a format called JSON.

- Just like a webpage, what’s saved looks different from what you see in your browser.

- But this format allows Jupyter to mix source code, text, and images, all in one file.

Code vs. Text

Jupyter mixes code and text in different types of blocks, called cells. We often use the term “code” to mean “the source code of software written in a language such as Python”. A “code cell” in a Notebook is a cell that contains software; a “text cell” is one that contains ordinary prose written for human beings.

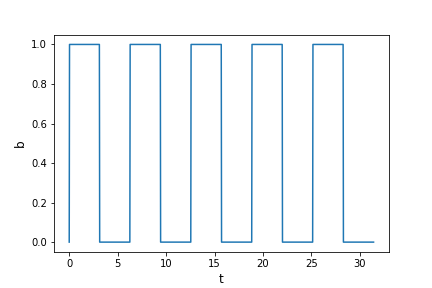

The Notebook has Command and Edit modes.

- If you press Esc and Return alternately, the outer border of your code cell will change from gray to blue.

- These are the Command (gray) and Edit (blue) modes of your notebook.

- Command mode allows you to edit notebook-level features, and Edit mode changes the content of cells.

- When in Command mode (esc/gray),

- The b key will make a new cell below the currently selected cell.

- The a key will make one above.

- The x key will delete the current cell.

- The z key will undo your last cell operation (which could be a deletion, creation, etc).

- All actions can be done using the menus, but there are lots of keyboard shortcuts to speed things up.

Command Vs. Edit

In the Jupyter notebook page are you currently in Command or Edit mode?

Switch between the modes. Use the shortcuts to generate a new cell. Use the shortcuts to delete a cell. Use the shortcuts to undo the last cell operation you performed.Solution

Command mode has a grey border and Edit mode has a blue border. Use Esc and Return to switch between modes. You need to be in Command mode (Press Esc if your cell is blue). Type b or a. You need to be in Command mode (Press Esc if your cell is blue). Type x. You need to be in Command mode (Press Esc if your cell is blue). Type z.

Use the keyboard and mouse to select and edit cells.

- Pressing the Return key turns the border blue and engages Edit mode, which allows you to type within the cell.

- Because we want to be able to write many lines of code in a single cell, pressing the Return key when in Edit mode (blue) moves the cursor to the next line in the cell just like in a text editor.

- We need some other way to tell the Notebook we want to run what’s in the cell.

- Pressing Shift+Return together will execute the contents of the cell.

- Notice that the Return and Shift keys on the right of the keyboard are right next to each other.

The Notebook will turn Markdown into formatted text.

- Notebooks can also render Markdown.

- A simple plain-text format for writing lists, links, and other things that might go into a web page.

- Equivalently, a subset of HTML that looks like what you’d send in an old-fashioned email.

- Turn the current cell into a Markdown cell by entering the Command mode (Esc/gray) and press the M key.

In [ ]:will disappear to show it is no longer a code cell and you will be able to write in Markdown.- Markdown cells have to be executed similar to Python cells with Shift+Return.

- Turn the current cell into a Code cell by entering the Command mode (Esc/gray) and press the y key.

Markdown does most of what HTML does.

* Use asterisks

* to create

* bullet lists.

- Use asterisks

- to create

- bullet lists.

1. Use numbers

1. to create

1. numbered lists.

- Use numbers

- to create

- numbered lists.

* You can use indents

* To create sublists

* of the same type

* Or sublists

1. Of different

1. types

- You can use indents

- To create sublists

- of the same type

- Or sublists

- Of different

- types

# A Level-1 Heading

A Level-1 Heading

## A Level-2 Heading (etc.)

A Level-2 Heading (etc.)

Line breaks

don't matter.

But blank lines

create new paragraphs.

Line breaks don’t matter.

But blank lines create new paragraphs.

[Create links](http://software-carpentry.org) with `[...](...)`.

Or use [named links][data_carpentry].

[data_carpentry]: http://datacarpentry.org

Create links with [...](...).

Or use named links.

Creating Lists in Markdown

Create a nested list in a Markdown cell in a notebook that looks like this:

- Get funding.

- Do work.

- Design experiment.

- Collect data.

- Analyze.

- Write up.

- Publish.

Solution

This challenge integrates both the numbered list and bullet list. Note that the bullet list is indented 2 spaces so that it is inline with the items of the numbered list.

1. Get funding. 2. Do work. * Design experiment. * Collect data. * Analyze. 3. Write up. 4. Publish.

More Math

What is displayed when a Python cell in a notebook that contains several calculations is executed? For example, what happens when this cell is executed?

7 * 3 2 + 1Solution

Python returns the output of the last calculation.

3

Change an Existing Cell from Code to Markdown

What happens if you write some Python in a code cell and then you switch it to a Markdown cell? For example, put the following in a code cell:

x = 6 * 7 + 12 print(x)And then run it with Shift+Return to be sure that it works as a code cell. Now go back to the cell and use Esc then m to switch the cell to Markdown and “run” it with Shift+Return. What happened and how might this be useful?

Solution

The Python code gets treated like Markdown text. The lines appear as if they are part of one contiguous paragraph. This could be useful to temporarily turn on and off cells in notebooks that get used for multiple purposes.

x = 6 * 7 + 12 print(x)

Equations

Standard Markdown (such as we’re using for these notes) won’t render equations, but the Notebook will. Create a new Markdown cell and enter the following:

$\sum_{i=1}^{N} 2^{-i} \approx 1$(It’s probably easier to copy and paste.) What does it display? What do you think the underscore,

_, circumflex,^, and dollar sign,$, do?Solution

The notebook shows the equation as it would be rendered from LaTeX equation syntax. The dollar sign,

$, is used to tell Markdown that the text in between is a LaTeX equation. If you’re not familiar with LaTeX, underscore,_, is used for subscripts and circumflex,^, is used for superscripts. A pair of curly braces,{and}, is used to group text together so that the statementi=1becomes the subscript andNbecomes the superscript. Similarly,-iis in curly braces to make the whole statement the superscript for2.\sumand\approxare LaTeX commands for “sum over” and “approximate” symbols.

Keeping track

It’s important to bear in mind that the notebook remembers the sequence that you run the cells. So even if cells follow a particular order in the notebook, you can run them out of sequence as long as the code allows it (e.g. required variables have been defined or calculated in one of the cells that has been run). The code will behave just as if it is written in the order that the cells are run. This can lead to confusing behaviour and errors if you run one or more cells out of sequence or skip over cells.

If you have run cells out of sequence and want to start from the beginning again, you should Restart the kernel and Clear Output (see below).

Getting stuck: restarting the kernel

Sometimes you may find that the notebook stops running code, or that code you wrote takes far too long to run, so you want to try another approach. In these cases you can interrupt and/or restart the notebook kernel (the computational engine that runs the code). You can do this via the menu bar at the top of the notebook. Interrupt will interrupt the running of the code from the cell that is being run at the time and prevent any new cells from being run. Restart is then required to start running code again. Restart and Clear Output will also clear the memory of all outputs including variables stored in memory and any loaded modules. This can be useful if you are testing the code in a module and need to reload the new version to test again.

Saving and Quitting

When you want to quit a notebook, be sure to save it first using Save and Checkpoint in the File menu. In fact it is wise to save the notebook regularly if you are adding to it or making changes. It is also good practice to exit the notebook cleanly so it doesn’t hang, using Close and Halt in the File menu, before closing the notebook browser tab.

Key Points

Use the Jupyter Notebook for editing and running Python.

The Notebook has Command and Edit modes.

Use the keyboard and mouse to select and edit cells.

The Notebook will turn Markdown into pretty-printed documentation.

Markdown does most of what HTML does.

Keep track of the sequence in which you run cells, and use kernel operations such as Restart and Clear Output to maintain control.

Python Fundamentals

Overview

Teaching: 20 min

Exercises: 10 minQuestions

What basic data types can I work with in Python?

How can I create a new variable in Python?

Can I change the value associated with a variable after I create it?

Objectives

Assign values to variables.

Variables

Any Python interpreter can be used as a calculator:

3 + 5 * 4

23

This is great but not very interesting.

To do anything useful with data, we need to assign its value to a variable.

In Python, we can assign a value to a

variable, using the equals sign =.

For example, to assign value 60 to a variable weight_kg, we would execute:

weight_kg = 60

From now on, whenever we use weight_kg, Python will substitute the value we assigned to

it. In layman’s terms, a variable is a name for a value.

In Python, variable names:

- can include letters, digits, and underscores

- cannot start with a digit

- are case sensitive.

This means that, for example:

weight0is a valid variable name, whereas0weightis notweightandWeightare different variables

Introducing types of data

Python knows various types of data. Three common ones are:

- integer numbers

- floating point numbers, and

- strings.

In the example above, variable weight_kg has an integer value of 60.

To create a variable with a floating point value, we can execute:

weight_kg = 60.0

And to create a string, we add single or double quotes around some text, for example:

weight_kg_text = 'weight in kilograms:'

Using Variables in Python

To display the value of a variable to the screen in Python, we can use the print function:

print(weight_kg)

60.0

We can display multiple things at once using only one print command:

print(weight_kg_text, weight_kg)

weight in kilograms: 60.0

Moreover, we can do arithmetic with variables right inside the print function:

print('weight in pounds:', 2.2 * weight_kg)

weight in pounds: 132.0

The above command, however, did not change the value of weight_kg:

print(weight_kg)

60.0

To change the value of the weight_kg variable, we have to

assign weight_kg a new value using the equals = sign:

weight_kg = 65.0

print('weight in kilograms is now:', weight_kg)

weight in kilograms is now: 65.0

Variables as Sticky Notes

A variable is analogous to a sticky note with a name written on it: assigning a value to a variable is like putting that sticky note on a particular value.

This means that assigning a value to one variable does not change values of other variables. For example, let’s store the subject’s weight in pounds in its own variable:

# There are 2.2 pounds per kilogram weight_lb = 2.2 * weight_kg print(weight_kg_text, weight_kg, 'and in pounds:', weight_lb)weight in kilograms: 65.0 and in pounds: 143.0

Let’s now change

weight_kg:weight_kg = 100.0 print('weight in kilograms is now:', weight_kg, 'and weight in pounds is still:', weight_lb)weight in kilograms is now: 100.0 and weight in pounds is still: 143.0

Since

weight_lbdoesn’t “remember” where its value comes from, it is not updated when we changeweight_kg.

Use meaningful variable names.

- Python doesn’t care what you call variables as long as they obey the rules (alphanumeric characters and the underscore).

var1 = 42

ewr_422_yY = 'Ahmed'

print(ewr_422_yY, 'is', var1, 'years old')

- Use meaningful variable names to help other people understand what the program does.

- The most important “other person” is your future self.

- Python itself proposes a standard style including variable naming style through one of its first Python Enhancement Proposals (PEP), PEP8.

Check Your Understanding

What values do the variables

massandagehave after each statement in the following program? Test your answers by executing the commands.mass = 47.5 age = 122 mass = mass * 2.0 age = age - 20 print(mass, age)Solution

95.0 102

Sorting Out References

What does the following program print out?

first, second = 'Grace', 'Hopper' third, fourth = second, first print(third, fourth)Solution

Hopper Grace

Key Points

Basic data types in Python include integers, strings, and floating-point numbers.

Use

variable = valueto assign a value to a variable in order to record it in memory.Variables are created on demand whenever a value is assigned to them.

Use

print(something)to display the value ofsomething.

Data Types and Type Conversion

Overview

Teaching: 20 min

Exercises: 10 minQuestions

What kinds of data do programs store?

How can I convert one type to another?

Objectives

Explain key differences between integers and floating point numbers.

Explain key differences between numbers and character strings.

Perform some operations using strings.

Use built-in functions to convert between integers, floating point numbers, and strings.

Every value has a type.

- Every value in a program has a specific type.

- Integer (

int): represents positive or negative whole numbers like 3 or -512. - Floating point number (

float): represents real numbers like 3.14159 or -2.5. - Character string (usually called “string”,

str): text.- Written in either single quotes or double quotes (as long as they match).

- The quote marks aren’t printed when the string is displayed.

Use the built-in function type to find the type of a value.

- Use the built-in function

typeto find out what type a value has. - Works on variables as well.

- But remember: the value has the type — the variable is just a label.

print(type(52))

<class 'int'>

fitness = 'average'

print(type(fitness))

<class 'str'>

Types control what operations (or methods) can be performed on a given value.

- A value’s type determines what the program can do to it.

print(5 - 3)

2

print('hello' - 'h')

---------------------------------------------------------------------------

TypeError Traceback (most recent call last)

<ipython-input-2-67f5626a1e07> in <module>()

----> 1 print('hello' - 'h')

TypeError: unsupported operand type(s) for -: 'str' and 'str'

You can use the “+” and “*” operators on strings.

- “Adding” character strings concatenates them.

full_name = 'Ahmed' + ' ' + 'Walsh'

print(full_name)

Ahmed Walsh

- Multiplying a character string by an integer N creates a new string that consists of that character string repeated N times.

- Since multiplication is repeated addition.

separator = '=' * 10

print(separator)

==========

Strings have a length (but numbers don’t).

- The built-in function

lencounts the number of characters in a string.

print(len(full_name))

11

- But numbers don’t have a length (not even zero).

print(len(52))

---------------------------------------------------------------------------

TypeError Traceback (most recent call last)

<ipython-input-3-f769e8e8097d> in <module>()

----> 1 print(len(52))

TypeError: object of type 'int' has no len()

We must convert numbers to strings or vice versa when operating on them.

- Cannot add numbers and strings.

print(1 + '2')

---------------------------------------------------------------------------

TypeError Traceback (most recent call last)

<ipython-input-4-fe4f54a023c6> in <module>()

----> 1 print(1 + '2')

TypeError: unsupported operand type(s) for +: 'int' and 'str'

- Not allowed because it’s ambiguous: should

1 + '2'be3or'12'? - Some types can be converted to other types by using the type name as a function.

print(1 + int('2'))

print(str(1) + '2')

3

12

More operations on strings

- We can operate on strings with specialized functions (there are many more):

a = 'space alpacas'

a.title()

a.upper()

a.startswith('b')

a.isdigit()

'Space Alpacas'

'SPACE ALPACAS'

False

False

Inserting variables into strings

- The older, clunkier approach is to use %-formatting:

name = 'Anna'

age = 24

'Hello, %s. You are %s.' % (name, age)"

'Hello Anna. You are 24.'

- The

%sterms tell the interpreter to insert - as strings - the values in brackets (variables in this case) after the%following the main string. - %-formatting becomes hard to follow when many variables need to be inserted.

- We can use f-strings for a more elegant approach:

name = 'Anna'

age = 24

f'Hello, {name}. You are {age}.'

'Hello Anna. You are 24.'

Escape sequences

- For including a newline or tab in a string we can use the escape sequences,

\nand\trespectively.

We can mix integers and floats freely in operations.

- Integers and floating-point numbers can be mixed in arithmetic.

- Python 3 automatically converts integers to floats as needed. (Integer division in Python 2 will return an integer, the floor of the division.)

print('half is', 1 / 2.0)

print('three squared is', 3.0 ** 2)

half is 0.5

three squared is 9.0

Variables only change value when something is assigned to them.

- If we make one cell in a spreadsheet depend on another, and update the latter, the former updates automatically.

- This does not happen in programming languages.

first = 1

second = 5 * first

first = 2

print('first is', first, 'and second is', second)

first is 2 and second is 5

- The computer reads the value of

firstwhen doing the multiplication, creates a new value, and assigns it tosecond. - After that,

seconddoes not remember where it came from.

Fractions

What type of value is 3.4? How can you find out?

Solution

It is a floating-point number (often abbreviated “float”).

print(type(3.4))<class 'float'>

Automatic Type Conversion

What type of value is 3.25 + 4?

Solution

It is a float: integers are automatically converted to floats as necessary.

result = 3.25 + 4 print(result, 'is', type(result))7.25 is <class 'float'>

Choose a Type

What type of value (integer, floating point number, or character string) would you use to represent each of the following? Try to come up with more than one good answer for each problem. For example, in # 1, when would counting days with a floating point variable make more sense than using an integer?

- Number of days since the start of the year.

- Time elapsed from the start of the year until now in days.

- Serial number of a piece of lab equipment.

- A lab specimen’s age

- Current population of a city.

- Average population of a city over time.

Solution

The answers to the questions are:

- Integer, since the number of days would lie between 1 and 365.

- Floating point, since fractional days are required

- Character string if serial number contains letters and numbers, otherwise integer if the serial number consists only of numerals

- This will vary! How do you define a specimen’s age? whole days since collection (integer)? date and time (string)?

- Choose floating point to represent population as large aggregates (eg millions), or integer to represent population in units of individuals.

- Floating point number, since an average is likely to have a fractional part.

Division Types

In Python 3, the

//operator performs integer (whole-number) floor division, the/operator performs floating-point division, and the ‘%’ (or modulo) operator calculates and returns the remainder from integer division:print('5 // 3:', 5//3) print('5 / 3:', 5/3) print('5 % 3:', 5%3)5 // 3: 1 5 / 3: 1.6666666666666667 5 % 3: 2However in Python2 (and other languages), the

/operator between two integer types perform a floor (//) division. To perform a float division, we have to convert one of the integers to float.print('5 // 3:', 1) print('5 / 3:', 1 ) print('5 / float(3):', 1.6666667 ) print('float(5) / 3:', 1.6666667 ) print('float(5 / 3):', 1.0 ) print('5 % 3:', 2)If

num_subjectsis the number of subjects taking part in a study, andnum_per_surveyis the number that can take part in a single survey, write an expression that calculates the number of surveys needed to reach everyone once.Solution

We want the minimum number of surveys that reaches everyone once, which is the rounded up value of

num_subjects / num_per_survey. This is equivalent to performing an integer division with//and adding 1.num_subjects = 600 num_per_survey = 42 num_surveys = num_subjects // num_per_survey + 1 print(num_subjects, 'subjects,', num_per_survey, 'per survey:', num_surveys)600 subjects, 42 per survey: 15

Strings to Numbers

Where reasonable,

float()will convert a string to a floating point number, andint()will convert a floating point number to an integer:print("string to float:", float("3.4")) print("float to int:", int(3.4))string to float: 3.4 float to int: 3If the conversion doesn’t make sense, however, an error message will occur

print("string to float:", float("Hello world!"))--------------------------------------------------------------------------- ValueError Traceback (most recent call last) <ipython-input-5-df3b790bf0a2> in <module>() ----> 1 print("string to float:", float("Hello world!")) ValueError: could not convert string to float: 'Hello world!'Given this information, what do you expect the following program to do?

What does it actually do?

Why do you think it does that?

print("fractional string to int:", int("3.4"))Solution

What do you expect this program to do? It would not be so unreasonable to expect the Python 3

intcommand to convert the string “3.4” to 3.4 and an additional type conversion to 3. After all, Python 3 performs a lot of other magic - isn’t that part of its charm?However, Python 3 throws an error. Why? To be consistent, possibly. If you ask Python to perform two consecutive typecasts, you must convert it explicitly in code.

int("3.4") int(float("3.4"))In [2]: int("3.4") --------------------------------------------------------------------------- ValueError Traceback (most recent call last) <ipython-input-2-ec6729dfccdc> in <module>() ----> 1 int("3.4") ValueError: invalid literal for int() with base 10: '3.4' 3

Arithmetic with Different Types

Which of the following will return the floating point number

2.0? Note: there may be more than one right answer.first = 1.0 second = "1" third = "1.1"

first + float(second)float(second) + float(third)first + int(third)first + int(float(third))int(first) + int(float(third))2.0 * secondSolution

Answer: 1 and 4

Complex Numbers

Python provides complex numbers, which are written as

1.0+2.0j. Ifvalis a complex number, its real and imaginary parts can be accessed using dot notation asval.realandval.imag.complex = 6 + 2j print(complex.real) print(complex.imag)6.0 2.0

- Why do you think Python uses

jinstead ofifor the imaginary part?- What do you expect

1+2j + 3to produce?- What do you expect

4jto be? What about4 jor4 + j?Solution

- Standard mathematics treatments typically use

ito denote an imaginary number. However, from media reports it was an early convention established from electrical engineering that now presents a technically expensive area to change. Stack Overflow provides additional explanation and discussion.(4+2j)4j,Syntax Error: invalid syntax, in this case j is considered a variable and this depends on if j is defined and if so, its assigned value

Key Points

Every value has a type.

Use the built-in function

typeto find the type of a value.Types control what operations can be done on values.

Strings can be added and multiplied.

Strings have a length (but numbers don’t).

Strings can be elegantly built up from variables by using f-string formatting.

Must convert numbers to strings or vice versa when operating on them.

Can mix integers and floats freely in operations.

Variables only change value when something is assigned to them.

Libraries

Overview

Teaching: 10 min

Exercises: 10 minQuestions

How can I use software that other people have written?

How can I find out what that software does?

Objectives

Explain what software libraries are and why programmers create and use them.

Write programs that import and use modules from Python’s standard library.

Find and read documentation for the standard library interactively (in the interpreter) and online.

Most of the power of a programming language is in its libraries.

- A library is a collection of files (called modules) that contains

functions for use by other programs.

- May also contain data values (e.g., numerical constants) and other things.

- Library’s contents are supposed to be related, but there’s no way to enforce that.

- The Python standard library is an extensive suite of modules that comes with Python itself.

- Many additional libraries are available from PyPI (the Python Package Index).

- We will see later how to write new libraries.

Libraries and modules

A library is a collection of modules, but the terms are often used interchangeably, especially since many libraries only consist of a single module, so don’t worry if you mix them.

A program must import a library module before using it.

- Use

importto load a library module into a program’s memory. - Then refer to things from the module as

module_name.thing_name.- Python uses

.to mean “part of”.

- Python uses

- Using

math, one of the modules in the standard library:

import math

print('pi is', math.pi)

print('cos(pi) is', math.cos(math.pi))

pi is 3.141592653589793

cos(pi) is -1.0

- Have to refer to each item with the module’s name.

math.cos(pi)won’t work: the reference topidoesn’t somehow “inherit” the function’s reference tomath.

Use help to learn about the contents of a library module.

- Works just like help for a function.

help(math)

Help on module math:

NAME

math

MODULE REFERENCE

http://docs.python.org/3/library/math

The following documentation is automatically generated from the Python

source files. It may be incomplete, incorrect or include features that

are considered implementation detail and may vary between Python

implementations. When in doubt, consult the module reference at the

location listed above.

DESCRIPTION

This module is always available. It provides access to the

mathematical functions defined by the C standard.

FUNCTIONS

acos(x, /)

Return the arc cosine (measured in radians) of x.

⋮ ⋮ ⋮

Import specific items from a library module to shorten programs.

- Use

from ... import ...to load only specific items from a library module. - Then refer to them directly without library name as prefix.

from math import cos, pi

print('cos(pi) is', cos(pi))

cos(pi) is -1.0

However, you must be careful here, because of name clashes with functions imported from other libraries with the same name, e.g. numpy.cos. To avoid this problem, we recommend avoiding importing specific items - use aliases to shorten instead.

Create an alias for a library module when importing it to shorten programs.

- Use

import ... as ...to give a library a short alias while importing it. - Then refer to items in the library using that shortened name.

import math as m

print('cos(pi) is', m.cos(m.pi))

cos(pi) is -1.0

- Commonly used for libraries that are frequently used or have long names.

- E.g., the

matplotlibplotting library is often aliased asmpl.

- E.g., the

- But can make programs harder to understand, since readers must learn your program’s aliases.

Exploring the Math Module

- What function from the

mathmodule can you use to calculate a square root without usingsqrt?- Since the library contains this function, why does

sqrtexist?Solution

- Using

help(math)we see that we’ve gotpow(x,y)in addition tosqrt(x), so we could usepow(x, 0.5)to find a square root.The

sqrt(x)function is arguably more readable thanpow(x, 0.5)when implementing equations. Readability is a cornerstone of good programming, so it makes sense to provide a special function for this specific common case.Also, the design of Python’s

mathlibrary has its origin in the C standard, which includes bothsqrt(x)andpow(x,y), so a little bit of the history of programming is showing in Python’s function names.

Locating the Right Module

You want to select a random character from a string:

bases = 'ACTTGCTTGAC'

- Which standard library module could help you?

- Which function would you select from that module? Are there alternatives?

- Try to write a program that uses the function.

Solution

The random module seems like it could help you.

The string has 11 characters, each having a positional index from 0 to 10. You could use

random.randrangefunction (or the aliasrandom.randintif you find that easier to remember) to get a random integer between 0 and 10, and then pick out the character at that position:from random import randrange random_index = randrange(len(bases)) print(bases[random_index])or more compactly:

from random import randrange print(bases[randrange(len(bases))])Perhaps you found the

random.samplefunction? It allows for slightly less typing:from random import sample print(sample(bases, 1)[0])Note that this function returns a list of values. We will learn about lists in episode 11.

There’s also other functions you could use, but with more convoluted code as a result.

Jigsaw Puzzle (Parson’s Problem) Programming Example

Rearrange the following statements so that a random DNA base is printed and its index in the string. Not all statements may be needed. Feel free to use/add intermediate variables.

bases="ACTTGCTTGAC" import math import random ___ = random.randrange(n_bases) ___ = len(bases) print("random base ", bases[___], "base index", ___)Solution

import math import random bases = "ACTTGCTTGAC" n_bases = len(bases) idx = random.randrange(n_bases) print("random base", bases[idx], "base index", idx)

When Is Help Available?

When a colleague of yours types

help(math), Python reports an error:NameError: name 'math' is not definedWhat has your colleague forgotten to do?

Solution

Importing the math module (

import math)

Importing With Aliases

- Fill in the blanks so that the program below prints

90.0.- Rewrite the program so that it uses

importwithoutas.- Which form do you find easier to read?

import math as m angle = ____.degrees(____.pi / 2) print(____)Solution

import math as m angle = m.degrees(m.pi / 2) print(angle)can be written as

import math angle = math.degrees(math.pi / 2) print(angle)Since you just wrote the code and are familiar with it, you might actually find the first version easier to read. But when trying to read a huge piece of code written by someone else, or when getting back to your own huge piece of code after several months, non-abbreviated names are often easier, except where there are clear abbreviation conventions.

There Are Many Ways To Import Libraries!

Match the following print statements with the appropriate library calls.

Print commands:

print("sin(pi/2) =", sin(pi/2))print("sin(pi/2) =", m.sin(m.pi/2))print("sin(pi/2) =", math.sin(math.pi/2))Library calls:

from math import sin, piimport mathimport math as mfrom math import *Solution

- Library calls 1 and 4. In order to directly refer to

sinandpiwithout the library name as prefix, you need to use thefrom ... import ...statement. Whereas library call 1 specifically imports the two functionssinandpi, library call 4 imports all functions in themathmodule.- Library call 3. Here

sinandpiare referred to with a shortened library nameminstead ofmath. Library call 3 does exactly that using theimport ... as ...syntax - it creates an alias formathin the form of the shortened namem.- Library call 2. Here

sinandpiare referred to with the regular library namemath, so the regularimport ...call suffices.

Importing Specific Items

- Fill in the blanks so that the program below prints

90.0.- Do you find this version easier to read than preceding ones?

- Why wouldn’t programmers always use this form of

import?____ math import ____, ____ angle = degrees(pi / 2) print(angle)Solution

from math import degrees, pi angle = degrees(pi / 2) print(angle)Most likely you find this version easier to read since it’s less dense. The main reason not to use this form of import is to avoid name clashes. For instance, you wouldn’t import

degreesthis way if you also wanted to use the namedegreesfor a variable or function of your own. Or if you were to also import a function nameddegreesfrom another library.

Reading Error Messages

- Read the code below and try to identify what the errors are without running it.

- Run the code, and read the error message. What type of error is it?

from math import log log(0)Solution

- The logarithm of

xis only defined forx > 0, so 0 is outside the domain of the function.- You get an error of type “ValueError”, indicating that the function received an inappropriate argument value. The additional message “math domain error” makes it clearer what the problem is.

Key Points

Most of the power of a programming language is in its libraries.

A program must import a library module in order to use it.

Use

helpto learn about the contents of a library module.Import specific items from a library to shorten programs.

Create an alias for a library when importing it to shorten programs.

Analyzing Patient Data

Overview

Teaching: 40 min

Exercises: 20 minQuestions

How can I process tabular data files in Python?

Objectives

Explain what a library is and what libraries are used for.

Import a Python library and use the functions it contains.

Read tabular data from a file into a program.

Select individual values and subsections from data.

Perform operations on arrays of data.

Words are useful, but what’s more useful are the sentences and stories we build with them. Similarly, while a lot of powerful, general tools are built into Python, specialized tools built up from these basic units live in libraries that can be called upon when needed.

Loading data into Python

To begin processing inflammation data, we need to load it into Python. We can do that using a library called NumPy, which stands for Numerical Python. In general, you should use this library when you want to do fancy things with lots of numbers, especially if you have matrices or arrays. To tell Python that we’d like to start using NumPy, we need to import it:

import numpy

Importing a library is like getting a piece of lab equipment out of a storage locker and setting it up on the bench. Libraries provide additional functionality to the basic Python package, much like a new piece of equipment adds functionality to a lab space. Just like in the lab, importing too many libraries can sometimes complicate and slow down your programs - so we only import what we need for each program.

Once we’ve imported the library, we can ask the library to read our data file for us:

numpy.loadtxt(fname='inflammation-01.csv', delimiter=',')

array([[ 0., 0., 1., ..., 3., 0., 0.],

[ 0., 1., 2., ..., 1., 0., 1.],

[ 0., 1., 1., ..., 2., 1., 1.],

...,

[ 0., 1., 1., ..., 1., 1., 1.],

[ 0., 0., 0., ..., 0., 2., 0.],

[ 0., 0., 1., ..., 1., 1., 0.]])

The expression numpy.loadtxt(...) is a function call

that asks Python to run the function loadtxt which

belongs to the numpy library. This dotted notation

is used everywhere in Python: the thing that appears before the dot contains the thing that

appears after.

As an example, John Smith is the John that belongs to the Smith family.

We could use the dot notation to write his name smith.john,

just as loadtxt is a function that belongs to the numpy library.

numpy.loadtxt has two parameters: the name of the file

we want to read and the delimiter that separates values on

a line. These both need to be character strings (or strings

for short), so we put them in quotes.

Since we haven’t told it to do anything else with the function’s output,

the notebook displays it.

In this case,

that output is the data we just loaded.

By default,

only a few rows and columns are shown

(with ... to omit elements when displaying big arrays).

Note that, to save space when displaying NumPy arrays, Python does not show us trailing zeros, so 1.0 becomes 1..

Importing libraries with shortcuts

In this lesson we use the

import numpysyntax to import NumPy. However, shortcuts such asimport numpy as npare frequently used. Importing NumPy this way means that after the inital import, rather than writingnumpy.loadtxt(...), you can now writenp.loadtxt(...). Some people prefer this as it is quicker to type and results in shorter lines of code - especially for libraries with long names! You will frequently see Python code online using a NumPy function withnp, and it’s because they’ve used this shortcut. It makes no difference which approach you choose to take, but you must be consistent as if you useimport numpy as npthennumpy.loadtxt(...)will not work, and you must usenp.loadtxt(...)instead. Because of this, when working with other people it is important you agree on how libraries are imported.

Our call to numpy.loadtxt read our file

but didn’t save the data in memory.

To do that,

we need to assign the array to a variable. In a similar manner to how we assign a single

value to a variable, we can also assign an array of values to a variable using the same syntax.

Let’s re-run numpy.loadtxt and save the returned data:

data = numpy.loadtxt(fname='inflammation-01.csv', delimiter=',')

This statement doesn’t produce any output because we’ve assigned the output to the variable data.

If we want to check that the data have been loaded,

we can print the variable’s value:

print(data)

[[ 0. 0. 1. ..., 3. 0. 0.]

[ 0. 1. 2. ..., 1. 0. 1.]

[ 0. 1. 1. ..., 2. 1. 1.]

...,

[ 0. 1. 1. ..., 1. 1. 1.]

[ 0. 0. 0. ..., 0. 2. 0.]

[ 0. 0. 1. ..., 1. 1. 0.]]

Now that the data are in memory,

we can manipulate them.

First,

let’s ask what type of thing data refers to:

print(type(data))

<class 'numpy.ndarray'>

The output tells us that data currently refers to

an N-dimensional array, the functionality for which is provided by the NumPy library.

These data correspond to arthritis patients’ inflammation.

The rows are the individual patients, and the columns

are their daily inflammation measurements.

Data Type

A Numpy array contains one or more elements of the same type. The

typefunction will only tell you that a variable is a NumPy array but won’t tell you the type of thing inside the array. We can find out the type of the data contained in the NumPy array.print(data.dtype)float64This tells us that the NumPy array’s elements are floating-point numbers.

With the following command, we can see the array’s shape:

print(data.shape)

(60, 40)

The output tells us that the data array variable contains 60 rows and 40 columns. When we

created the variable data to store our arthritis data, we did not only create the array; we also

created information about the array, called members or

attributes. This extra information describes data in the same way an adjective describes a noun.

data.shape is an attribute of data which describes the dimensions of data. We use the same

dotted notation for the attributes of variables that we use for the functions in libraries because

they have the same part-and-whole relationship.

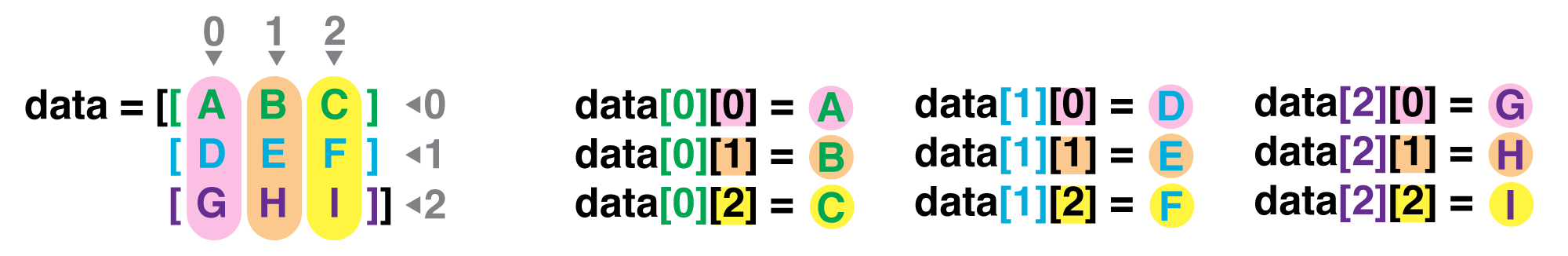

If we want to get a single number from the array, we must provide an index in square brackets after the variable name, just as we do in math when referring to an element of a matrix. Our inflammation data has two dimensions, so we will need to use two indices to refer to one specific value:

print('first value in data:', data[0, 0])

first value in data: 0.0

print('middle value in data:', data[30, 20])

middle value in data: 13.0

The expression data[30, 20] accesses the element at row 30, column 20. While this expression may

not surprise you,

data[0, 0] might.

Programming languages like Fortran, MATLAB and R start counting at 1

because that’s what human beings have done for thousands of years.

Languages in the C family (including C++, Java, Perl, and Python) count from 0

because it represents an offset from the first value in the array (the second

value is offset by one index from the first value). This is closer to the way

that computers represent arrays (if you are interested in the historical

reasons behind counting indices from zero, you can read

Mike Hoye’s blog post).

As a result,

if we have an M×N array in Python,

its indices go from 0 to M-1 on the first axis

and 0 to N-1 on the second.

It takes a bit of getting used to,

but one way to remember the rule is that

the index is how many steps we have to take from the start to get the item we want.

In the Corner

What may also surprise you is that when Python displays an array, it shows the element with index

[0, 0]in the upper left corner rather than the lower left. This is consistent with the way mathematicians draw matrices but different from the Cartesian coordinates. The indices are (row, column) instead of (column, row) for the same reason, which can be confusing when plotting data.

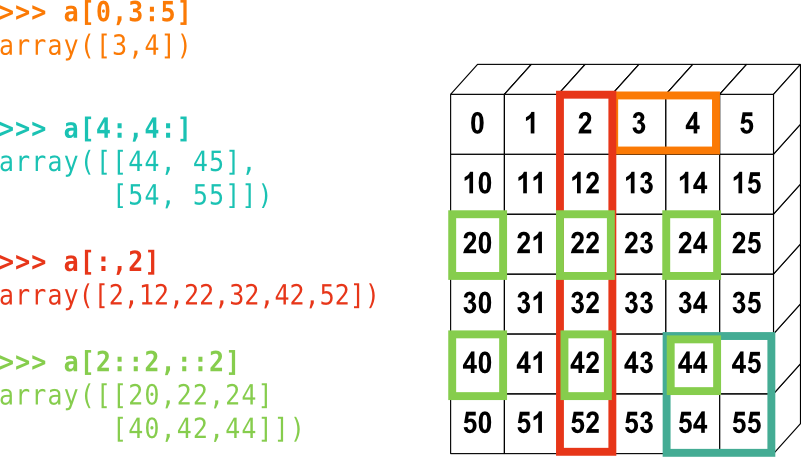

Slicing data

An index like [30, 20] selects a single element of an array,

but we can select whole sections as well.

For example,

we can select the first ten days (columns) of values

for the first four patients (rows) like this:

print(data[0:4, 0:10])

[[ 0. 0. 1. 3. 1. 2. 4. 7. 8. 3.]

[ 0. 1. 2. 1. 2. 1. 3. 2. 2. 6.]

[ 0. 1. 1. 3. 3. 2. 6. 2. 5. 9.]

[ 0. 0. 2. 0. 4. 2. 2. 1. 6. 7.]]

The slice 0:4 means, “Start at index 0 and go up to, but not

including, index 4”. Again, the up-to-but-not-including takes a bit of getting used to, but the

rule is that the difference between the upper and lower bounds is the number of values in the slice.

We don’t have to start slices at 0:

print(data[5:10, 0:10])

[[ 0. 0. 1. 2. 2. 4. 2. 1. 6. 4.]

[ 0. 0. 2. 2. 4. 2. 2. 5. 5. 8.]

[ 0. 0. 1. 2. 3. 1. 2. 3. 5. 3.]

[ 0. 0. 0. 3. 1. 5. 6. 5. 5. 8.]

[ 0. 1. 1. 2. 1. 3. 5. 3. 5. 8.]]

We also don’t have to include the upper and lower bound on the slice. If we don’t include the lower bound, Python uses 0 by default; if we don’t include the upper, the slice runs to the end of the axis, and if we don’t include either (i.e., if we use ‘:’ on its own), the slice includes everything:

small = data[:3, 36:]

print('small is:')

print(small)

The above example selects rows 0 through 2 and columns 36 through to the end of the array.

small is:

[[ 2. 3. 0. 0.]

[ 1. 1. 0. 1.]

[ 2. 2. 1. 1.]]

Analyzing data

NumPy has several useful functions that take an array as input to perform operations on its values.

If we want to find the average inflammation for all patients on

all days, for example, we can ask NumPy to compute data’s mean value:

print(numpy.mean(data))

6.14875

mean is a function that takes

an array as an argument.

Not All Functions Have Input

Generally, a function uses inputs to produce outputs. However, some functions produce outputs without needing any input. For example, checking the current time doesn’t require any input.

import time print(time.ctime())Sat Mar 26 13:07:33 2016For functions that don’t take in any arguments, we still need parentheses (

()) to tell Python to go and do something for us.

Let’s use three other NumPy functions to get some descriptive values about the dataset. We’ll also use multiple assignment, a convenient Python feature that will enable us to do this all in one line.

maxval, minval, stdval = numpy.max(data), numpy.min(data), numpy.std(data)

print('maximum inflammation:', maxval)

print('minimum inflammation:', minval)

print('standard deviation:', stdval)

Here we’ve assigned the return value from numpy.max(data) to the variable maxval, the value

from numpy.min(data) to minval, and so on.

maximum inflammation: 20.0

minimum inflammation: 0.0

standard deviation: 4.61383319712

Mystery Functions in IPython

How did we know what functions NumPy has and how to use them? If you are working in IPython or in a Jupyter Notebook, there is an easy way to find out. If you type the name of something followed by a dot, then you can use tab completion (e.g. type

numpy.and then press Tab) to see a list of all functions and attributes that you can use. After selecting one, you can also add a question mark (e.g.numpy.cumprod?), and IPython will return an explanation of the method! This is the same as doinghelp(numpy.cumprod). Similarly, if you are using the “plain vanilla” Python interpreter, you can typenumpy.and press the Tab key twice for a listing of what is available. You can then use thehelp()function to see an explanation of the function you’re interested in, for example:help(numpy.cumprod).

When analyzing data, though, we often want to look at variations in statistical values, such as the maximum inflammation per patient or the average inflammation per day. One way to do this is to create a new temporary array of the data we want, then ask it to do the calculation:

patient_0 = data[0, :] # 0 on the first axis (rows), everything on the second (columns)

print('maximum inflammation for patient 0:', numpy.max(patient_0))

maximum inflammation for patient 0: 18.0

Everything in a line of code following the ‘#’ symbol is a comment that is ignored by Python. Comments allow programmers to leave explanatory notes for other programmers or their future selves.

We don’t actually need to store the row in a variable of its own. Instead, we can combine the selection and the function call:

print('maximum inflammation for patient 2:', numpy.max(data[2, :]))

maximum inflammation for patient 2: 19.0

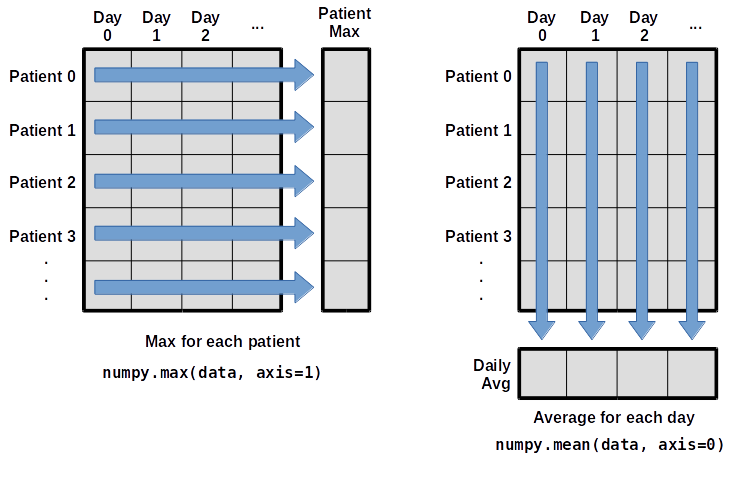

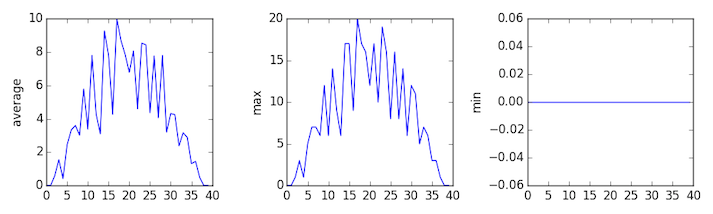

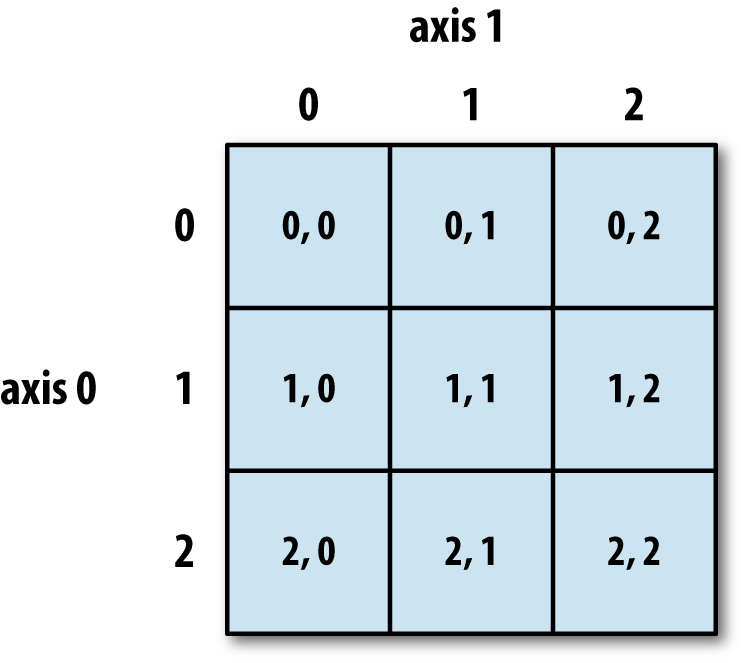

What if we need the maximum inflammation for each patient over all days (as in the next diagram on the left) or the average for each day (as in the diagram on the right)? As the diagram below shows, we want to perform the operation across an axis:

To support this functionality, most array functions allow us to specify the axis we want to work on. If we ask for the average across axis 0 (rows in our 2D example), we get:

print(numpy.mean(data, axis=0))

[ 0. 0.45 1.11666667 1.75 2.43333333 3.15

3.8 3.88333333 5.23333333 5.51666667 5.95 5.9

8.35 7.73333333 8.36666667 9.5 9.58333333

10.63333333 11.56666667 12.35 13.25 11.96666667

11.03333333 10.16666667 10. 8.66666667 9.15 7.25

7.33333333 6.58333333 6.06666667 5.95 5.11666667 3.6

3.3 3.56666667 2.48333333 1.5 1.13333333

0.56666667]

As a quick check, we can ask this array what its shape is:

print(numpy.mean(data, axis=0).shape)

(40,)

The expression (40,) tells us we have an N×1 vector,

so this is the average inflammation per day for all patients.

If we average across axis 1 (columns in our 2D example), we get:

print(numpy.mean(data, axis=1))

[ 5.45 5.425 6.1 5.9 5.55 6.225 5.975 6.65 6.625 6.525

6.775 5.8 6.225 5.75 5.225 6.3 6.55 5.7 5.85 6.55

5.775 5.825 6.175 6.1 5.8 6.425 6.05 6.025 6.175 6.55

6.175 6.35 6.725 6.125 7.075 5.725 5.925 6.15 6.075 5.75

5.975 5.725 6.3 5.9 6.75 5.925 7.225 6.15 5.95 6.275 5.7

6.1 6.825 5.975 6.725 5.7 6.25 6.4 7.05 5.9 ]

which is the average inflammation per patient across all days.

Slicing Strings

A section of an array is called a slice. We can take slices of character strings as well:

element = 'oxygen' print('first three characters:', element[0:3]) print('last three characters:', element[3:6])first three characters: oxy last three characters: genWhat is the value of

element[:4]? What aboutelement[4:]? Orelement[:]?Solution

oxyg en oxygenWhat is

element[-1]? What iselement[-2]?Solution

n eGiven those answers, explain what

element[1:-1]does.Solution

Creates a substring from index 1 up to (not including) the final index, effectively removing the first and last letters from ‘oxygen’

How can we rewrite the slice for getting the last three characters of

element, so that it works even if we assign a different string toelement? Test your solution with the following strings:carpentry,clone,hi.Solution

element = 'oxygen' print('last three characters:', element[-3:]) element = 'carpentry' print('last three characters:', element[-3:]) element = 'clone' print('last three characters:', element[-3:]) element = 'hi' print('last three characters:', element[-3:])last three characters: gen last three characters: try last three characters: one last three characters: hi

Thin Slices

The expression

element[3:3]produces an empty string, i.e., a string that contains no characters. Ifdataholds our array of patient data, what doesdata[3:3, 4:4]produce? What aboutdata[3:3, :]?Solution

array([], shape=(0, 0), dtype=float64) array([], shape=(0, 40), dtype=float64)

Stacking Arrays

Arrays can be concatenated and stacked on top of one another, using NumPy’s

vstackandhstackfunctions for vertical and horizontal stacking, respectively.import numpy A = numpy.array([[1,2,3], [4,5,6], [7, 8, 9]]) print('A = ') print(A) B = numpy.hstack([A, A]) print('B = ') print(B) C = numpy.vstack([A, A]) print('C = ') print(C)A = [[1 2 3] [4 5 6] [7 8 9]] B = [[1 2 3 1 2 3] [4 5 6 4 5 6] [7 8 9 7 8 9]] C = [[1 2 3] [4 5 6] [7 8 9] [1 2 3] [4 5 6] [7 8 9]]Write some additional code that slices the first and last columns of

A, and stacks them into a 3x2 array. Make sure toSolution

A ‘gotcha’ with array indexing is that singleton dimensions are dropped by default. That means

A[:, 0]is a one dimensional array, which won’t stack as desired. To preserve singleton dimensions, the index itself can be a slice or array. For example,A[:, :1]returns a two dimensional array with one singleton dimension (i.e. a column vector).D = numpy.hstack((A[:, :1], A[:, -1:])) print('D = ') print(D)D = [[1 3] [4 6] [7 9]]Solution

An alternative way to achieve the same result is to use Numpy’s delete function to remove the second column of A.

D = numpy.delete(A, 1, 1) print('D = ') print(D)D = [[1 3] [4 6] [7 9]]

Change In Inflammation

The patient data is longitudinal in the sense that each row represents a series of observations relating to one individual. This means that the change in inflammation over time is a meaningful concept. Let’s find out how to calculate changes in the data contained in an array with NumPy.

The

numpy.diff()function takes an array and returns the differences between two successive values. Let’s use it to examine the changes each day across the first week of patient 3 from our inflammation dataset.patient3_week1 = data[3, :7] print(patient3_week1)[0. 0. 2. 0. 4. 2. 2.]Calling

numpy.diff(patient3_week1)would do the following calculations[ 0 - 0, 2 - 0, 0 - 2, 4 - 0, 2 - 4, 2 - 2 ]and return the 6 difference values in a new array.

numpy.diff(patient3_week1)array([ 0., 2., -2., 4., -2., 0.])Note that the array of differences is shorter by one element (length 6).

When calling

numpy.diffwith a multi-dimensional array, anaxisargument may be passed to the function to specify which axis to process. When applyingnumpy.diffto our 2D inflammation arraydata, which axis would we specify?Solution

Since the row axis (0) is patients, it does not make sense to get the difference between two arbitrary patients. The column axis (1) is in days, so the difference is the change in inflammation – a meaningful concept.

numpy.diff(data, axis=1)If the shape of an individual data file is

(60, 40)(60 rows and 40 columns), what would the shape of the array be after you run thediff()function and why?Solution

The shape will be

(60, 39)because there is one fewer difference between columns than there are columns in the data.How would you find the largest change in inflammation for each patient? Does it matter if the change in inflammation is an increase or a decrease?

Solution

By using the

numpy.max()function after you apply thenumpy.diff()function, you will get the largest difference between days.numpy.max(numpy.diff(data, axis=1), axis=1)array([ 7., 12., 11., 10., 11., 13., 10., 8., 10., 10., 7., 7., 13., 7., 10., 10., 8., 10., 9., 10., 13., 7., 12., 9., 12., 11., 10., 10., 7., 10., 11., 10., 8., 11., 12., 10., 9., 10., 13., 10., 7., 7., 10., 13., 12., 8., 8., 10., 10., 9., 8., 13., 10., 7., 10., 8., 12., 10., 7., 12.])If inflammation values decrease along an axis, then the difference from one element to the next will be negative. If you are interested in the magnitude of the change and not the direction, the

numpy.absolute()function will provide that.Notice the difference if you get the largest absolute difference between readings.

numpy.max(numpy.absolute(numpy.diff(data, axis=1)), axis=1)array([ 12., 14., 11., 13., 11., 13., 10., 12., 10., 10., 10., 12., 13., 10., 11., 10., 12., 13., 9., 10., 13., 9., 12., 9., 12., 11., 10., 13., 9., 13., 11., 11., 8., 11., 12., 13., 9., 10., 13., 11., 11., 13., 11., 13., 13., 10., 9., 10., 10., 9., 9., 13., 10., 9., 10., 11., 13., 10., 10., 12.])

Key Points

Import a library into a program using

import libraryname.Use the

numpylibrary to work with arrays in Python.The expression

array.shapegives the shape of an array.Use

array[x, y]to select a single element from a 2D array.Array indices start at 0, not 1.

Use

low:highto specify aslicethat includes the indices fromlowtohigh-1.Use

# some kind of explanationto add comments to programs.Use

numpy.mean(array),numpy.max(array), andnumpy.min(array)to calculate simple statistics.Use

numpy.mean(array, axis=0)ornumpy.mean(array, axis=1)to calculate statistics across the specified axis.

Visualizing Tabular Data

Overview

Teaching: 30 min

Exercises: 20 minQuestions

How can I visualize tabular data in Python?

How can I group several plots together?

Objectives

Plot simple graphs from data.

Group several graphs in a single figure.

Visualizing data

The mathematician Richard Hamming once said, “The purpose of computing is insight, not numbers,” and

the best way to develop insight is often to visualize data. Visualization deserves an entire

lecture of its own, but we can explore a few features of Python’s matplotlib library here. While

there is no official plotting library, matplotlib is the de facto standard. First, we will

import the pyplot module from matplotlib and use two of its functions to create and display a

heat map of our data:

import matplotlib.pyplot

image = matplotlib.pyplot.imshow(data)

matplotlib.pyplot.show()

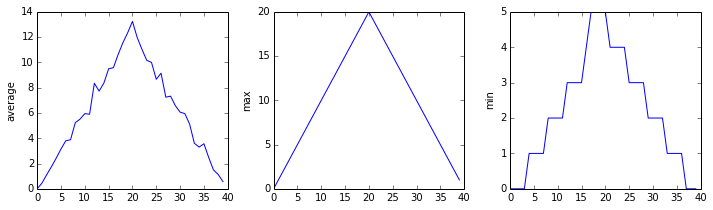

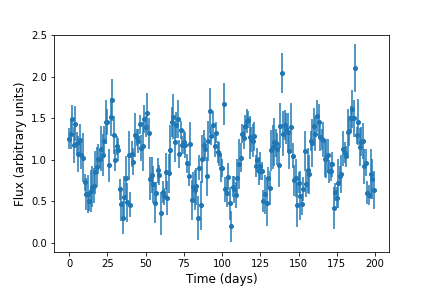

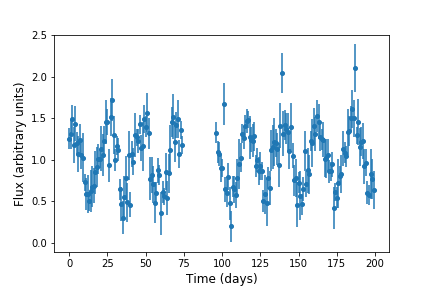

Blue pixels in this heat map represent low values, while yellow pixels represent high values. As we can see, inflammation rises and falls over a 40-day period. Let’s take a look at the average inflammation over time:

ave_inflammation = numpy.mean(data, axis=0)

ave_plot = matplotlib.pyplot.plot(ave_inflammation)

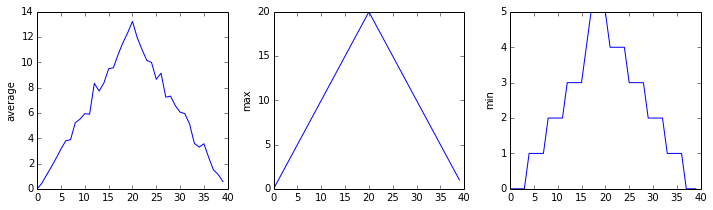

matplotlib.pyplot.show()

Here, we have put the average inflammation per day across all patients in the variable ave_inflammation, then

asked matplotlib.pyplot to create and display a line graph of those values. The result is a

roughly linear rise and fall, which is suspicious: we might instead expect a sharper rise and slower

fall. Let’s have a look at two other statistics:

max_plot = matplotlib.pyplot.plot(numpy.max(data, axis=0))

matplotlib.pyplot.show()

min_plot = matplotlib.pyplot.plot(numpy.min(data, axis=0))

matplotlib.pyplot.show()

The maximum value rises and falls smoothly, while the minimum seems to be a step function. Neither trend seems particularly likely, so either there’s a mistake in our calculations or something is wrong with our data. This insight would have been difficult to reach by examining the numbers themselves without visualization tools.

Grouping plots

You can group similar plots in a single figure using subplots.

This script below uses a number of new commands. The function matplotlib.pyplot.figure()

creates a space into which we will place all of our plots. The parameter figsize

tells Python how big to make this space. Each subplot is placed into the figure using

its add_subplot method. The add_subplot method takes 3

parameters. The first denotes how many total rows of subplots there are, the second parameter

refers to the total number of subplot columns, and the final parameter denotes which subplot

your variable is referencing (left-to-right, top-to-bottom). Each subplot is stored in a

different variable (axes1, axes2, axes3). Once a subplot is created, the axes can

be titled using the set_xlabel() command (or set_ylabel()).

Here are our three plots side by side:

import numpy

import matplotlib.pyplot

data = numpy.loadtxt(fname='inflammation-01.csv', delimiter=',')

fig = matplotlib.pyplot.figure(figsize=(10.0, 3.0))

axes1 = fig.add_subplot(1, 3, 1)

axes2 = fig.add_subplot(1, 3, 2)

axes3 = fig.add_subplot(1, 3, 3)

axes1.set_ylabel('average')

axes1.plot(numpy.mean(data, axis=0))

axes2.set_ylabel('max')

axes2.plot(numpy.max(data, axis=0))

axes3.set_ylabel('min')

axes3.plot(numpy.min(data, axis=0))

fig.tight_layout()

matplotlib.pyplot.savefig('inflammation.png')

matplotlib.pyplot.show()

The call to loadtxt reads our data,

and the rest of the program tells the plotting library

how large we want the figure to be,

that we’re creating three subplots,

what to draw for each one,

and that we want a tight layout.

(If we leave out that call to fig.tight_layout(),

the graphs will actually be squeezed together more closely.)

The call to savefig stores the plot as a graphics file. This can be

a convenient way to store your plots for use in other documents, web

pages etc. The graphics format is automatically determined by

Matplotlib from the file name ending we specify; here PNG from

‘inflammation.png’. Matplotlib supports many different graphics

formats, including SVG, PDF, and JPEG.

Plot Scaling

Why do all of our plots stop just short of the upper end of our graph?

Solution

Because matplotlib normally sets x and y axes limits to the min and max of our data (depending on data range)

If we want to change this, we can use the

set_ylim(min, max)method of each ‘axes’, for example:axes3.set_ylim(0,6)Update your plotting code to automatically set a more appropriate scale. (Hint: you can make use of the

maxandminmethods to help.)Solution

# One method axes3.set_ylabel('min') axes3.plot(numpy.min(data, axis=0)) axes3.set_ylim(0,6)Solution

# A more automated approach min_data = numpy.min(data, axis=0) axes3.set_ylabel('min') axes3.plot(min_data) axes3.set_ylim(numpy.min(min_data), numpy.max(min_data) * 1.1)

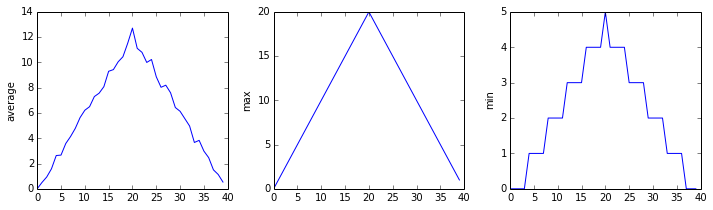

Drawing Straight Lines

In the center and right subplots above, we expect all lines to look like step functions because non-integer value are not realistic for the minimum and maximum values. However, you can see that the lines are not always vertical or horizontal, and in particular the step function in the subplot on the right looks slanted. Why is this?

Solution

Because matplotlib interpolates (draws a straight line) between the points. One way to do avoid this is to use the Matplotlib

drawstyleoption:import numpy import matplotlib.pyplot data = numpy.loadtxt(fname='inflammation-01.csv', delimiter=',') fig = matplotlib.pyplot.figure(figsize=(10.0, 3.0)) axes1 = fig.add_subplot(1, 3, 1) axes2 = fig.add_subplot(1, 3, 2) axes3 = fig.add_subplot(1, 3, 3) axes1.set_ylabel('average') axes1.plot(numpy.mean(data, axis=0), drawstyle='steps-mid') axes2.set_ylabel('max') axes2.plot(numpy.max(data, axis=0), drawstyle='steps-mid') axes3.set_ylabel('min') axes3.plot(numpy.min(data, axis=0), drawstyle='steps-mid') fig.tight_layout() matplotlib.pyplot.show()

Make Your Own Plot

Create a plot showing the standard deviation (

numpy.std) of the inflammation data for each day across all patients.Solution

std_plot = matplotlib.pyplot.plot(numpy.std(data, axis=0)) matplotlib.pyplot.show()

Moving Plots Around

Modify the program to display the three plots on top of one another instead of side by side.

Solution

import numpy import matplotlib.pyplot data = numpy.loadtxt(fname='inflammation-01.csv', delimiter=',') # change figsize (swap width and height) fig = matplotlib.pyplot.figure(figsize=(3.0, 10.0)) # change add_subplot (swap first two parameters) axes1 = fig.add_subplot(3, 1, 1) axes2 = fig.add_subplot(3, 1, 2) axes3 = fig.add_subplot(3, 1, 3) axes1.set_ylabel('average') axes1.plot(numpy.mean(data, axis=0)) axes2.set_ylabel('max') axes2.plot(numpy.max(data, axis=0)) axes3.set_ylabel('min') axes3.plot(numpy.min(data, axis=0)) fig.tight_layout() matplotlib.pyplot.show()

Key Points

Use the

pyplotmodule from thematplotliblibrary for creating simple visualizations.

Repeating Actions with Loops

Overview

Teaching: 30 min

Exercises: 0 minQuestions

How can I do the same operations on many different values?

Objectives

Explain what a

forloop does.Correctly write

forloops to repeat simple calculations.Trace changes to a loop variable as the loop runs.

Trace changes to other variables as they are updated by a

forloop.

In the last episode, we wrote Python code that plots values of interest from our first

inflammation dataset (inflammation-01.csv), which revealed some suspicious features in it.

We have a dozen data sets right now, though, and more on the way. We want to create plots for all of our data sets with a single statement. To do that, we’ll have to teach the computer how to repeat things.

An example task that we might want to repeat is printing each character in a word on a line of its own.

word = 'lead'

In Python, a string is basically an ordered collection of characters, and every

character has a unique number associated with it – its index. This means that

we can access characters in a string using their indices.

For example, we can get the first character of the word 'lead', by using

word[0]. One way to print each character is to use four print statements:

print(word[0])

print(word[1])

print(word[2])

print(word[3])

l

e

a

d

This is a bad approach for three reasons:

-

Not scalable. Imagine you need to print characters of a string that is hundreds of letters long. It might be easier to type them in manually.

-

Difficult to maintain. If we want to decorate each printed character with an asterisk or any other character, we would have to change four lines of code. While this might not be a problem for short strings, it would definitely be a problem for longer ones.

-

Fragile. If we use it with a word that has more characters than what we initially envisioned, it will only display part of the word’s characters. A shorter string, on the other hand, will cause an error because it will be trying to display part of the string that doesn’t exist.

word = 'tin'

print(word[0])

print(word[1])

print(word[2])

print(word[3])

t

i

n

---------------------------------------------------------------------------

IndexError Traceback (most recent call last)

<ipython-input-3-7974b6cdaf14> in <module>()

3 print(word[1])

4 print(word[2])

----> 5 print(word[3])

IndexError: string index out of range

Here’s a better approach:

word = 'lead'

for char in word:

print(char)

l

e

a

d

This is shorter — certainly shorter than something that prints every character in a hundred-letter string — and more robust as well:

word = 'oxygen'

for char in word:

print(char)

o

x

y

g

e

n

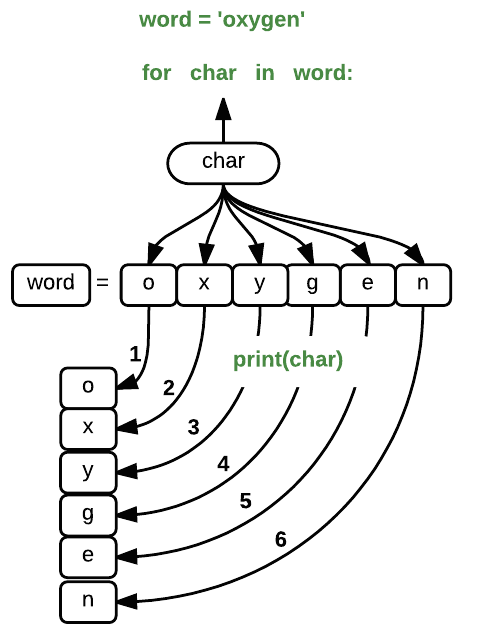

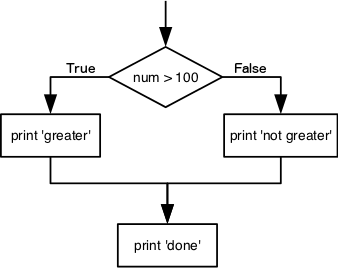

The improved version uses a for loop to repeat an operation — in this case, printing — once for each thing in a sequence. The general form of a loop is:

for variable in collection:

# do things using variable, such as print

Using the oxygen example above, the loop might look like this:

where each character (char) in the variable word is looped through and printed one character

after another. The numbers in the diagram denote which loop cycle the character was printed in (1

being the first loop, and 6 being the final loop).

We can call the loop variable anything we like, but

there must be a colon at the end of the line starting the loop, and we must indent anything we

want to run inside the loop. Unlike many other languages, there is no command to signify the end

of the loop body (e.g. end for); what is indented after the for statement belongs to the loop.

What’s in a name?

In the example above, the loop variable was given the name

charas a mnemonic; it is short for ‘character’. We can choose any name we want for variables. We can even call our loop variablebanana, as long as we use this name consistently:word = 'oxygen' for banana in word: print(banana)o x y g e nIt is a good idea to choose variable names that are meaningful, otherwise it would be more difficult to understand what the loop is doing.

Here’s another loop that repeatedly updates a variable:

length = 0

for vowel in 'aeiou':

length = length + 1

print('There are', length, 'vowels')

There are 5 vowels

It’s worth tracing the execution of this little program step by step.

Since there are five characters in 'aeiou',

the statement on line 3 will be executed five times.

The first time around,

length is zero (the value assigned to it on line 1)

and vowel is 'a'.

The statement adds 1 to the old value of length,

producing 1,

and updates length to refer to that new value.

The next time around,

vowel is 'e' and length is 1,

so length is updated to be 2.

After three more updates,

length is 5;

since there is nothing left in 'aeiou' for Python to process,

the loop finishes

and the print statement on line 4 tells us our final answer.

Note that a loop variable is a variable that’s being used to record progress in a loop. It still exists after the loop is over, and we can re-use variables previously defined as loop variables as well:

letter = 'z'

for letter in 'abc':

print(letter)

print('after the loop, letter is', letter)

a

b

c

after the loop, letter is c

Note also that finding the length of a string is such a common operation

that Python actually has a built-in function to do it called len:

print(len('aeiou'))

5

len is much faster than any function we could write ourselves,

and much easier to read than a two-line loop;

it will also give us the length of many other things that we haven’t met yet,

so we should always use it when we can.

From 1 to N

Python has a built-in function called

rangethat generates a sequence of numbers.rangecan accept 1, 2, or 3 parameters.

- If one parameter is given,

rangegenerates a sequence of that length, starting at zero and incrementing by 1. For example,range(3)produces the numbers0, 1, 2.- If two parameters are given,

rangestarts at the first and ends just before the second, incrementing by one. For example,range(2, 5)produces2, 3, 4.- If

rangeis given 3 parameters, it starts at the first one, ends just before the second one, and increments by the third one. For example,range(3, 10, 2)produces3, 5, 7, 9.Using

range, write a loop that usesrangeto print the first 3 natural numbers:1 2 3Solution

for number in range(1, 4): print(number)

Understanding the loops

Given the following loop:

word = 'oxygen' for char in word: print(char)How many times is the body of the loop executed?

- 3 times

- 4 times

- 5 times

- 6 times

Solution

The body of the loop is executed 6 times.

Computing Powers With Loops

Exponentiation is built into Python:

print(5 ** 3)125Write a loop that calculates the same result as

5 ** 3using multiplication (and without exponentiation).Solution

result = 1 for number in range(0, 3): result = result * 5 print(result)

Reverse a String

Knowing that two strings can be concatenated using the

+operator, write a loop that takes a string and produces a new string with the characters in reverse order, so'Newton'becomes'notweN'.Solution

newstring = '' oldstring = 'Newton' for char in oldstring: newstring = char + newstring print(newstring)

Computing the Value of a Polynomial

The built-in function

enumeratetakes a sequence (e.g. a list) and generates a new sequence of the same length. Each element of the new sequence is a pair composed of the index (0, 1, 2,…) and the value from the original sequence:for idx, val in enumerate(a_list): # Do something using idx and valThe code above loops through

a_list, assigning the index toidxand the value toval.Suppose you have encoded a polynomial as a list of coefficients in the following way: the first element is the constant term, the second element is the coefficient of the linear term, the third is the coefficient of the quadratic term, etc.

x = 5 coefs = [2, 4, 3] y = coefs[0] * x**0 + coefs[1] * x**1 + coefs[2] * x**2 print(y)97Write a loop using

enumerate(coefs)which computes the valueyof any polynomial, givenxandcoefs.Solution

y = 0 for idx, coef in enumerate(coefs): y = y + coef * x**idx

Key Points

Use

for variable in sequenceto process the elements of a sequence one at a time.The body of a

forloop must be indented.Use

len(thing)to determine the length of something that contains other values.

Storing Multiple Values in Lists

Overview

Teaching: 30 min

Exercises: 15 minQuestions

How can I store many values together?

Objectives

Explain what a list is.

Create and index lists of simple values.

Change the values of individual elements

Append values to an existing list

Reorder and slice list elements

Create and manipulate nested lists

Similar to a string that can contain many characters, a list is a container that can store many values. Unlike NumPy arrays, lists are built into the language (so we don’t have to load a library to use them). We create a list by putting values inside square brackets and separating the values with commas:

odds = [1, 3, 5, 7]

print('odds are:', odds)

odds are: [1, 3, 5, 7]

We can access elements of a list using indices – numbered positions of elements in the list. These positions are numbered starting at 0, so the first element has an index of 0.

print('first element:', odds[0])

print('last element:', odds[3])

print('"-1" element:', odds[-1])

first element: 1

last element: 7

"-1" element: 7

Yes, we can use negative numbers as indices in Python. When we do so, the index -1 gives us the

last element in the list, -2 the second to last, and so on.

Because of this, odds[3] and odds[-1] point to the same element here.

If we loop over a list, the loop variable is assigned to its elements one at a time:

for number in odds:

print(number)

1

3

5

7

There is one important difference between lists and strings: we can change the values in a list, but we cannot change individual characters in a string. For example:

names = ['Curie', 'Darwing', 'Turing'] # typo in Darwin's name

print('names is originally:', names)

names[1] = 'Darwin' # correct the name

print('final value of names:', names)

names is originally: ['Curie', 'Darwing', 'Turing']

final value of names: ['Curie', 'Darwin', 'Turing']

works, but:

name = 'Darwin'

name[0] = 'd'

---------------------------------------------------------------------------

TypeError Traceback (most recent call last)

<ipython-input-8-220df48aeb2e> in <module>()

1 name = 'Darwin'

----> 2 name[0] = 'd'

TypeError: 'str' object does not support item assignment

does not.

Ch-Ch-Ch-Ch-Changes

Data which can be modified in place is called mutable, while data which cannot be modified is called immutable. Strings and numbers are immutable. This does not mean that variables with string or number values are constants, but when we want to change the value of a string or number variable, we can only replace the old value with a completely new value.

Lists and arrays, on the other hand, are mutable: we can modify them after they have been created. We can change individual elements, append new elements, or reorder the whole list. For some operations, like sorting, we can choose whether to use a function that modifies the data in-place or a function that returns a modified copy and leaves the original unchanged.

Be careful when modifying data in-place. If two variables refer to the same list, and you modify the list value, it will change for both variables!

salsa = ['peppers', 'onions', 'cilantro', 'tomatoes'] my_salsa = salsa # <-- my_salsa and salsa point to the *same* list data in memory salsa[0] = 'hot peppers' print('Ingredients in my salsa:', my_salsa)Ingredients in my salsa: ['hot peppers', 'onions', 'cilantro', 'tomatoes']If you want variables with mutable values to be independent, you must make a copy of the value when you assign it.

salsa = ['peppers', 'onions', 'cilantro', 'tomatoes'] my_salsa = list(salsa) # <-- makes a *copy* of the list salsa[0] = 'hot peppers' print('Ingredients in my salsa:', my_salsa)Ingredients in my salsa: ['peppers', 'onions', 'cilantro', 'tomatoes']Because of pitfalls like this, code which modifies data in place can be more difficult to understand. However, it is often far more efficient to modify a large data structure in place than to create a modified copy for every small change. You should consider both of these aspects when writing your code.