Introducing probability calculus and conditional probability

Overview

Teaching: 40 min

Exercises: 40 minQuestions

How do we calculate with probabilities, taking account of whether some event is dependent on the occurrence of another?

Objectives

Learn the key definitions of probability theory: events, sample spaces, conditional probability and independence.

Learn how to use the probability calculus to calculate the probabilities of events or combinations of events, which may be conditional on each other.

In this episode we will be using numpy. You can set this up as follows:

import numpy as np

Probability, and frequentist vs. Bayesian approaches

Correct statistical inference requires an understanding of probability and how to work with it. We can do this using the language of mathematics and probability theory.

First let us consider what a probability is. There are two approaches to this, famously known as the frequentist and Bayesian approaches:

- A frequentist approach considers that the probability \(P\) of a random event occuring is equivalent to the frequency such an event would occur at, in an infinite number of repeating trials which have that event as one of the outcomes. \(P\) is expressed as the fractional number of occurrences, relative to the total number. For example, consider the flip of a coin (which is perfect so it cannot land on it’s edge, only on one of its two sides). If the coin is fair (unbiased to one side or the other), it will land on the ‘heads’ side (denoted by \(H\)) exactly half the time in an infinite number of trials, i.e. the probability \(P(H)=0.5\).

- A Bayesian approach considers the idea of an infinite number of trials to be somewhat abstract: instead the Bayesian considers the probability \(P\) to represent the belief that we have that the event will occur. In this case we can again state that \(P(H)=0.5\) if the coin is fair, since this is what we expect. However, the approach also explicitly allows the experimenter’s prior belief in a hypothesis (e.g. ‘The coin is fair?’) to factor in to the assessment of probability. This turns out to be crucial to assessing the probability that a given hypothesis is true.

Some years ago these approaches were seen as being in conflict, but the value of ‘Bayesianism’ in answering scientific questions, as well as in the rapidly expanding field of Machine Learning, means it is now seen as the best approach to statistical inference. We can still get useful insights and methods from frequentism however, so what we present here will focus on what is practically useful and may reflect a mix of both approaches. Although, at heart, we are all Bayesians now.

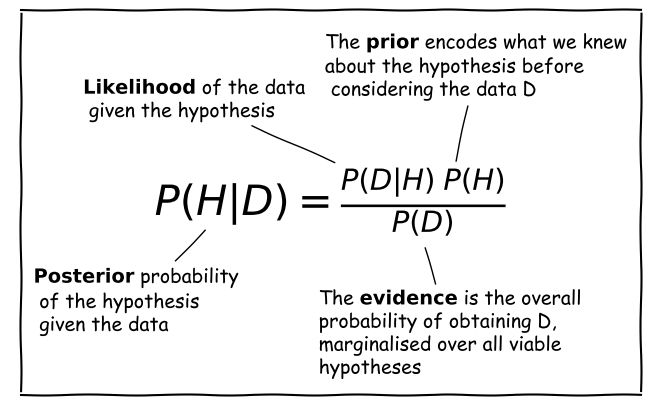

We will consder the details of Bayes’ theorem later on. But first, we must introduce probability theory, in terms of the concepts of sample space and events, conditional probability, probability calculus, and (in the next episodes) probability distributions.

Sample space and conditional events

Imagine a sample space, \(\Omega\) which contains the set of all possible and mutially exclusive outcomes of some random process (also known as elements or elementary outcomes of the set). In statistical terminology, an event is a set containing one or more outcomes. The event occurs if the outcome of a draw (or sample) of that process is in that set. Events do not have to be mutually exclusive and may also share outcomes, so that events may also be considered as combinations or subsets of other events.

For example, we can denote the sample space of the results (Heads, Tails) of two successive coin flips as \(\Omega = \{HH, HT, TH, TT\}\). Each of the four outcomes of coin flips can be seen as a separate event, but we can also can also consider new events, such as, for example, the event where the first flip is heads \(\{HH, HT\}\), or the event where both flips are the same \(\{HH, TT\}\).

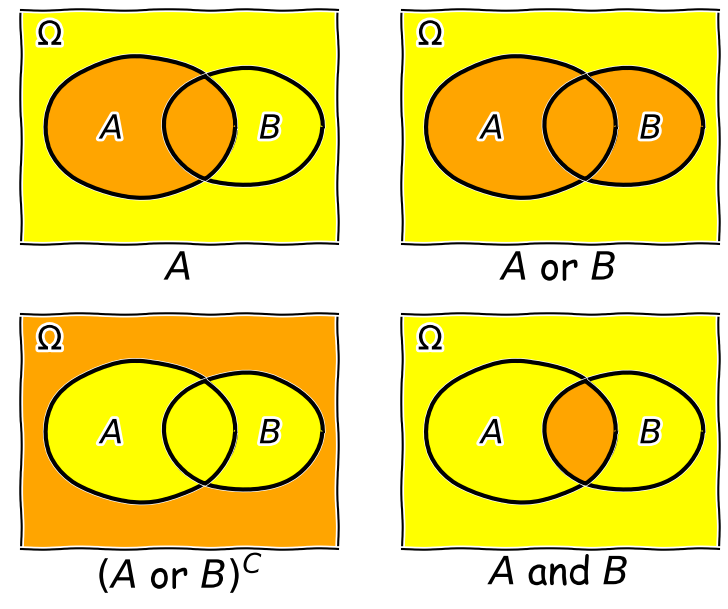

Now consider two events \(A\) and \(B\), whose probability is conditional on one another. I.e. the chance of one event occurring is dependent on whether the other event also occurs. The occurrence of conditional events can be represented by Venn diagrams where the entire area of the diagram represents the sample space of all possible events (i.e. probability \(P(\Omega)=1\)) and the probability of a given event or combination of events is represented by its area on the diagram. The diagram below shows four of the possible combinations of events, where the area highlighted in orange shows the event (or combination) being described in the notation below.

We’ll now decribe these combinations and do the equivalent calculation for the coin flip case where event \(A=\{HH, HT\}\) and event \(B=\{HH, TT\}\) (the probabilities are equal to 0.5 for these two events, so the example diagram is not to scale).

- Event \(A\) occurs (regardless of whether \(B\) also occurs), with probability \(P(A)\) given by the area of the enclosed shape relative to the total area.

- Event \((A \mbox{ or } B)\) occurs (in set notation this is the union of sets, \(A \cup B\)). Note that the formal ‘\(\mbox{or}\)’ here is the same as in programming logic, i.e. it corresponds to ‘either or both’ events occurring. The total probability is not \(P(A)+P(B)\) however, because that would double-count the intersecting region. In fact you can see from the diagram that \(P(A \mbox{ or } B) = P(A)+P(B)-P(A \mbox{ and } B)\). Note that if \(P(A \mbox{ or } B) = P(A)+P(B)\) we say that the two events are mutually exclusive (since \(P(A \mbox{ and } B)=0\)).

- Event \((A \mbox{ or } B)^{C}\) occurs and is the complement of \((A \mbox{ or } B)\) which is everything excluding \((A \mbox{ or } B)\), i.e. \(\mbox{not }(A \mbox{ or } B)\).

- Event \((A \mbox{ and } B)\) occurs (in set notation this is the intersection of sets, \(A\cap B\)). The probability of \((A \mbox{ and } B)\) corresponds to the area of the overlapping region.

Now in our coin flip example, we know the total sample space is \(\Omega = \{HH, HT, TH, TT\}\) and for a fair coin each of the four outcomes \(X\), has a probability \(P(X)=0.25\). Therefore:

- \(A\) consists of 2 outcomes, so \(P(A) = 0.5\)

- \((A \mbox{ or } B)\) consists of 3 outcomes (since \(TH\) is not included), \(P(A \mbox{ or } B) = 0.75\)

- \((A \mbox{ or } B)^{C}\) corresponds to \(\{TH\}\) only, so \(P(A \mbox{ or } B)^{C}=0.25\)

- \((A \mbox{ and } B)\) corresponds to the overlap of the two sets, i.e. \(HH\), so \(P(A \mbox{ and } B)=0.25\).

Trials and samples

In the language of statistics a trial is a single ‘experiment’ to produce a set of one or more measurements, or in purely statistical terms, a single realisation of a sampling process, e.g. the random draw of one or more outcomes from a sample space. The result of a trial is to produce a sample. It is important not to confuse the sample size, which is the number of outcomes in a sample (i.e. produced by a single trial) with the number of trials. An important aspect of a trial is that the outcome is independent of that of any of the other trials. This need not be the case for the measurements in a sample which may or may not be independent.

Some examples are:

- A roll of a pair of dice would be a single trial. The sample size is 2 and the sample would be the numbers on the dice. It is also possible to consider that a roll of a pair of dice is two separate trials for a sample of a single dice (since the outcome of each is presumably independent of the other roll).

- A single sample of fake data (e.g. from random numbers) generated by a computer would be a trial. By simulating many trials, the distribution of data expected from complex models could be generated.

- A full deal of a deck of cards (to all players) would be a single trial. The sample would be the hands that are dealt to all the players, and the sample size would be the number of players. Note that individual players’ hands cannot themselves be seen as trials as they are clearly not independent of one another (since dealing from a shuffled deck of cards is sampling without replacement).

Test yourself: dice roll sample space

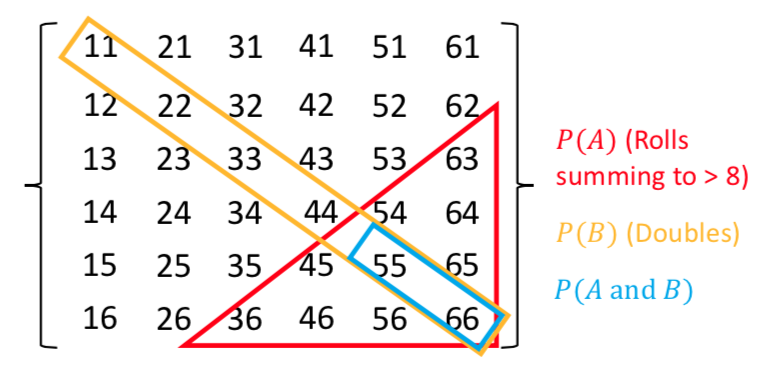

Write out as a grid the sample space of the roll of two six-sided dice (one after the other), e.g. a roll of 1 followed by 3 is denoted by the element 13. You can neglect commas for clarity. For example, the top row and start of the next row will be:

\[11\:21\:31\:41\:51\:61\] \[12\:22\:...........\]Now highlight the regions corresponding to:

- Event \(A\): the numbers on both dice add up to be \(>\)8.

- Event \(B\): both dice roll the same number (e.g. two ones, two twos etc.).

Finally use your grid to calculate the probabilities of \((A \mbox{ and } B)\) and \((A \mbox{ or } B)\), assuming that the dice are fair, so that all the outcomes of a roll of two dice are equally probable.

Solution

There are 36 possible outcomes, so assuming they are equally probable, a single outcome has a probability of 1/36 (\(\simeq\)0.028). We can see that the region corresponding to \(A \mbox{ and } B\) contains 2 outcomes, so \(P(A \mbox{ and } B)=2/36\). Region \(A\) contains 10 outcomes while region \(B\) contains 6. \(P(A \mbox{ or } B)\), which here corresponds to the number of unique outcomes, is given by: \(P(A)+P(B)-P(A \mbox{ and } B)=(10+6-2)/36=7/18\).

Conditional probability

We can also ask the question, what is the probability that an event \(A\) occurs if we know that the other event \(B\) occurs? We write this as the probability of \(A\) conditional on \(B\), i.e. \(P(A\vert B)\). We often also state this is the ‘probability of A given B’.

To calculate this, we can see from the Venn diagram that if \(B\) occurs (i.e. we now have \(P(B)=1\)), the probability of \(A\) also occurring is equal to the fraction of the area of \(B\) covered by \(A\). I.e. in the case where outcomes have equal probability, it is the fraction of outcomes in set \(B\) which are also contained in set \(A\).

This gives us the equation for conditional probability:

[P(A\vert B) = \frac{P(A \mbox{ and } B)}{P(B)}]

So, for our coin flip example, \(P(A\vert B) = 0.25/0.5 = 0.5\). This makes sense because only one of the two outcomes in \(B\) (\(HH\)) is contained in \(A\).

In our simple coin flip example, the sets \(A\) and \(B\) contain an equal number of equal-probability outcomes, and the symmetry of the situation means that \(P(B\vert A)=P(A\vert B)\). However, this is not normally the case.

For example, consider the set \(A\) of people taking this class, and the set of all students \(B\). Clearly the probability of someone being a student, given that they are taking this class, is very high, but the probability of someone taking this class, given that they are a student, is not. In general \(P(B\vert A)\neq P(A\vert B)\).

Note that events \(A\) and \(B\) are independent if \(P(A\vert B) = P(A) \Rightarrow P(A \mbox{ and } B) = P(A)P(B)\). The latter equation is the one for calculating combined probabilities of events that many people are familiar with, but it only holds if the events are independent! For example, the probability that you ate a cheese sandwich for lunch is (generally) independent of the probability that you flip two heads in a row. Clearly, independent events do not belong on the same Venn diagram since they have no relation to one another! However, if you are flipping the coin in order to narrow down what sandwich filling to use, the coin flip and sandwich choice can be classed as outcomes on the same Venn diagram and their combination can become an event with an associated probability.

Test yourself: conditional probability for a dice roll

Use the solution to the dice question above to calculate:

- The probability of rolling doubles given that the total rolled is greater than 8.

- The probability of rolling a total greater than 8, given that you rolled doubles.

Solution

- \(P(B\vert A) = \frac{P(B \mbox{ and } A)}{P(A)}\) (note that the names of the events are arbitrary so we can simply swap them around!). Since \(P(B \mbox{ and } A)=P(A \mbox{ and } B)\) we have \(P(B\vert A) =(2/36)/(10/36)=1/5\).

- \(P(A\vert B) = \frac{P(A \mbox{ and } B)}{P(B)}\) so we have \(P(A\vert B)=(2/36)/(6/36)=1/3\).

Rules of probability calculus

We can now write down the rules of probability calculus and their extensions:

- The convexity rule sets some defining limits: \(0 \leq P(A\vert B) \leq 1 \mbox{ and } P(A\vert A)=1\)

- The addition rule: \(P(A \mbox{ or } B) = P(A)+P(B)-P(A \mbox{ and } B)\)

- The multiplication rule is derived from the equation for conditional probability: \(P(A \mbox{ and } B) = P(A\vert B) P(B)\)

\(A\) and \(B\) are independent if \(P(A\vert B) = P(A) \Rightarrow P(A \mbox{ and } B) = P(A)P(B)\).

We can also ‘extend the conversation’ to consider the probability of \(B\) in terms of probabilities with \(A\):

\[\begin{align} P(B) & = P\left((B \mbox{ and } A) \mbox{ or } (B \mbox{ and } A^{C})\right) \\ & = P(B \mbox{ and } A) + P(B \mbox{ and } A^{C}) \\ & = P(B\vert A)P(A)+P(B\vert A^{C})P(A^{C}) \end{align}\]The 2nd line comes from applying the addition rule and because the events \((B \mbox{ and } A)\) and \((B \mbox{ and } A^{C})\) are mutually exclusive. The final result then follows from applying the multiplication rule.

Finally we can use the ‘extension of the conversation’ rule to derive the law of total probability. Consider a set of all possible mutually exclusive events \(\Omega = \{A_{1},A_{2},...A_{n}\}\), we can start with the first two steps of the extension to the conversion, then express the results using sums of probabilities:

\[P(B) = P(B \mbox{ and } \Omega) = P(B \mbox{ and } A_{1}) + P(B \mbox{ and } A_{2})+...P(B \mbox{ and } A_{n})\] \[= \sum\limits_{i=1}^{n} P(B \mbox{ and } A_{i})\] \[= \sum\limits_{i=1}^{n} P(B\vert A_{i}) P(A_{i})\]This summation to eliminate the conditional terms is called marginalisation. We can say that we obtain the marginal distribution of \(B\) by marginalising over \(A\) (\(A\) is ‘marginalised out’).

Test yourself: conditional probabilities and GW counterparts

You are an astronomer who is looking for radio counterparts of binary neutron star mergers that are detected via gravitational wave events. Assume that there are three types of binary merger: binary neutron stars (\(NN\)), binary black holes (\(BB\)) and neutron-star-black-hole binaries (\(NB\)). For a hypothetical gravitational wave detector, the probabilities for a detected event to correspond to \(NN\), \(BB\), \(NB\) are 0.05, 0.75, 0.2 respectively. Radio emission is detected only from mergers involving a neutron star, with probabilities 0.72 and 0.2 respectively.

Assume that you follow up a gravitational wave event with a radio observation, without knowing what type of event you are looking at. Using \(D\) to denote radio detection, express each probability given above as a conditional probability (e.g. \(P(D\vert NN)\)), or otherwise (e.g. \(P(BB)\)). Then use the rules of probability calculus (or their extensions) to calculate the probability that you will detect a radio counterpart.

Solution

We first write down all the probabilities and the terms they correspond to. First the radio detections, which we denote using \(D\):

\(P(D\vert NN) = 0.72\), \(P(D\vert NB) = 0.2\), \(P(D\vert BB) = 0\)

and: \(P(NN)=0.05\), \(P(NB) = 0.2\), \(P(BB)=0.75\)

We need to obtain the probability of a detection, regardless of the type of merger, i.e. we need \(P(D)\). However, since the probabilities of a radio detection are conditional on the merger type, we need to marginalise over the different merger types, i.e.:

\(P(D) = P(D\vert NN)P(NN) + P(D\vert NB)P(NB) + P(D\vert BB)P(BB)\) \(= (0.72\times 0.05) + (0.2\times 0.2) + 0 = 0.076\)

You may be able to do this simple calculation without explicitly using the law of total probability, by using the ‘intuitive’ probability calculation approach that you may have learned in the past. However, learning to write down the probability terms, and use the probability calculus, will help you to think systematically about these kinds of problems, and solve more difficult ones (e.g. using Bayes theorem, which we will come to later).

Setting up a random event generator in Numpy

It’s often useful to generate your own random outcomes in a program, e.g. to simulate a random process or calculate a probability for some random event which is difficult or even impossible to calculate analytically. In this episode we will consider how to select a non-numerical outcome from a sample space. In the following episodes we will introduce examples of random number generation from different probability distributions.

Common to all these methods is the need for the program to generate random bits which are then used with a method to generate a given type of outcome. This is done in Numpy by setting up a generator object. A generic feature of computer random number generators is that they must be initialised using a random number seed which is an integer value that sets up the sequence of random numbers. Note that the numbers generated are not truly random: the sequence is the same if the same seed is used. However they are random with respect to one another.

E.g.:

rng = np.random.default_rng(331)

will set up a generator with the seed=331. We can use this generator to produce, e.g. random integers to simulate five repeated rolls of a 6-sided dice:

print(rng.integers(1,7,5))

print(rng.integers(1,7,5))

where the first two arguments are the low and high (exclusive, i.e. 1 more than the maximum integer in the sample space) values of the range of contiguous integers to be sampled from, while the third argument is the size of the resulting array of random integers, i.e. how many outcomes to draw from the sample.

[1 3 5 3 6]

[2 3 2 2 6]

Note that repeating the command yields a different sample. This will be the case every time we repeat the integers function call, because the generator starts from a new point in the sequence. If we want to repeat the same ‘random’ sample we have to reset the generator to the same seed:

print(rng.integers(1,7,5))

rng = np.random.default_rng(331)

print(rng.integers(1,7,5))

[4 3 2 5 4]

[1 3 5 3 6]

For many uses of random number generators you may not care about being able to repeat the same sequence of numbers. In these cases you can set initialise the generator with the default seed using np.random.default_rng(), which obtains a seed from system information (usually contained in a continuously updated folder on your computer, specially for provide random information to any applications that need it). However, if you want to do a statistical test or simulate a random process that is exactly repeatable, you should consider specifying the seed. But do not initialise the same seed unless you want to repeat the same sequence!

Random sampling of items in a list or array

If you want to simulate random sampling of non-numerical or non-sequential elements in a sample space, a simple way to do this is to set up a list or array containing the elements and then apply the numpy method choice to the generator to select from the list. As a default, sampling probabilities are assumed to be \(1/n\) for a list with \(n\) items, but they may be set using the p argument to give an array of p-values for each element in the sample space. The replace argument sets whether the sampling should be done with or without replacement.

For example, to set up 10 repeated flips of a coin, for an unbiased and a biased coin:

rng = np.random.default_rng() # Set up the generator with the default system seed

coin = ['h','t']

# Uses the defaults (uniform probability, replacement=True)

print("Unbiased coin: ",rng.choice(coin, size=10))

# Now specify probabilities to strongly weight towards heads:

prob = [0.9,0.1]

print("Biased coin: ",rng.choice(coin, size=10, p=prob))

Unbiased coin: ['h' 'h' 't' 'h' 'h' 't' 'h' 't' 't' 'h']

Biased coin: ['h' 'h' 't' 'h' 'h' 't' 'h' 'h' 'h' 'h']

Remember that your own results will differ from these because your random number generator seed will be different!

Programming challenge: simulating hands in 3-card poker

A normal deck of 52 playing cards, used for playing poker, consists of 4 ‘suits’ (in English, these are clubs, spades, diamonds and hearts) each with 13 cards. The cards are also ranked by the number or letter on them: in normal poker the rank ordering is first the number cards 2-10, followed by - for English cards - J(ack), Q(ueen), K(ing), A(ce). However, for our purposes we can just consider numbers 1 to 13 with 13 being the highest rank.

In a game of three-card poker you are dealt a ‘hand’ of 3 cards from the deck (you are the first player to be dealt cards). If your three cards can be arranged in sequential numerical order (the suit doesn’t matter), e.g. 7, 8, 9 or 11, 12, 13, your hand is called a straight. If you are dealt three cards from the same suit, that is called a flush. You can also be dealt a straight flush where your hand is both a straight and a flush. Note that these three hands are mutually exclusive (because a straight and a flush in the same hand is always classified as a straight flush)!

Write some Python code that simulates randomly being dealt 3 cards from the deck of 52 and determines whether or not your hand is a straight, flush or straight flush (or none of those). Then simulate a large number of hands (at least \(10^{6}\)!) and from this simulation, calculate the probability that your hand will be a straight, a flush or a straight flush. Use your simulation to see what happens if you are the last player to be dealt cards after 12 other players are dealt their cards from the same deck. Does this change the probability of getting each type of hand?

Hint

To sample cards from the deck you can set up a list of tuples which each represent the suit and the rank of a single card in the deck, e.g.

(1,3)for suit 1, card rank 3 in the suit. The exact matching of numbers to suits or cards does not matter!

Key Points

Probability can be thought of in terms of hypothetical frequencies of outcomes over an infinite number of trials (Frequentist), or as a belief in the likelihood of a given outcome (Bayesian).

A sample space contains all possible mutually exclusive outcomes of an experiment or trial.

Events consist of sets of outcomes which may overlap, leading to conditional dependence of the occurrence of one event on another. The conditional dependence of events can be described graphically using Venn diagrams.

Two events are independent if their probability does not depend on the occurrence (or not) of the other event. Events are mutually exclusive if the probability of one event is zero given that the other event occurs.

The probability of an event A occurring, given that B occurs, is in general not equal to the probability of B occurring, given that A occurs.

Calculations with conditional probabilities can be made using the probability calculus, including the addition rule, multiplication rule and extensions such as the law of total probability.

Discrete random variables and their probability distributions

Overview

Teaching: 60 min

Exercises: 60 minQuestions

How do we describe discrete random variables and what are their common probability distributions?

How do I calculate the means, variances and other statistical quantities for numbers drawn from probability distributions?

Objectives

Learn how discrete random variables are defined and how the Bernoulli, binomial and Poisson distributions are derived from them.

Learn how the expected means and variances of discrete random variables (and functions of them) can be calculated from their probability distributions.

Plot, and carry out probability calculations with the binomial and Poisson distributions.

Carry out simple simulations using random variables drawn from the binomial and Poisson distributions

In this episode we will be using numpy, as well as matplotlib’s plotting library. Scipy contains an extensive range of probability distributions in its ‘scipy.stats’ module, so we will also need to import it. Remember: scipy modules should be installed separately as required - they cannot be called if only scipy is imported.

import numpy as np

import matplotlib.pyplot as plt

import scipy.stats as sps

Discrete random variables

Often, we want to map a sample space \(\Omega\) (denoted with curly brackets) of possible outcomes on to a set of corresponding probabilities. The sample space may consist of non-numerical elements or numerical elements, but in all cases the elements of the sample space represent possible outcomes of a random ‘draw’ from a set of probabilities which can be used to form a discrete probability distribution.

For example, when flipping an ideal coin (which cannot land on its edge!) there are two outcomes, heads (\(H\)) or tails (\(T\)) so we have the sample space \(\Omega = \{H, T\}\). We can also represent the possible outcomes of 2 successive coin flips as the sample space \(\Omega = \{HH, HT, TH, TT\}\). A roll of a 6-sided dice would give \(\Omega = \{1, 2, 3, 4, 5, 6\}\). However, a Poisson process corresponds to the sample space \(\Omega = \mathbb{Z}^{0+}\), i.e. the set of all positive and integers and zero, even if the probability for most elements of that sample space is infinitesimal (it is still \(> 0\)).

In the case of a Poisson process or the roll of a dice, our sample space already consists of a set of contiguous (i.e. next to one another in sequence) numbers which correspond to discrete random variables. Where the sample space is categorical, such as heads or tails on a coin, or perhaps a set of discrete but non-integer values (e.g. a pre-defined set of measurements to be randomly drawn from), it is useful to map the elements of the sample space on to a set of integers which then become our discrete random variables. For example, when flipping a coin, we can define the possible values taken by the random variable, known as the variates \(X\), to be:

[X=

\begin{cases}

0 \quad \mbox{if tails}

1 \quad \mbox{if heads}

\end{cases}]

By defining the values taken by a random variable in this way, we can mathematically define a probability distribution for how likely it is for a given event or outcome to be obtained in a single trial (i.e. a draw - a random selection - from the sample space).

Probability distributions of discrete random variables

Random variables do not just take on any value - they are drawn from some probability distribution. In probability theory, a random measurement (or even a set of measurements) is an event which occurs (is ‘drawn’) with a fixed probability, assuming that the experiment is fixed and the underlying distribution being measured does not change over time (statistically we say that the random process is stationary).

We can write the probability that the variate \(X\) has a value \(x\) as \(p(x) = P(X=x)\), so for the example of flipping a coin, assuming the coin is fair, we have \(p(0) = p(1) = 0.5\). Our definition of mapping events on to random variables therefore allows us to map discrete but non-integer outcomes on to numerically ordered integers \(X\) for which we can construct a probability distribution. Using this approach we can define the cumulative distribution function or cdf for discrete random variables as:

[F(x) = P(X\leq x) = \sum\limits_{x_{i}\leq x} p(x_{i})]

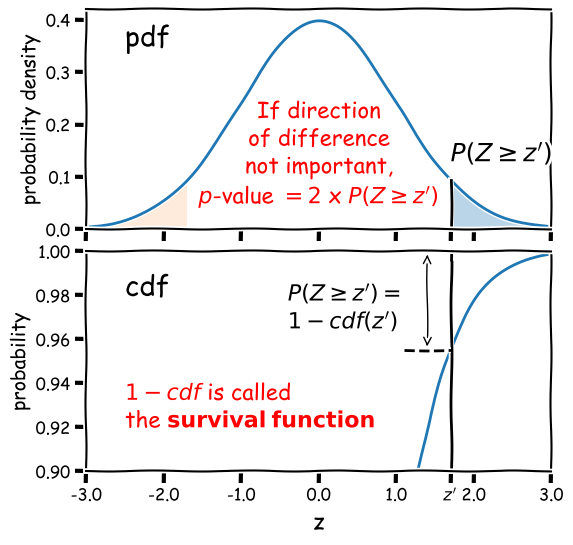

where the subscript \(i\) corresponds to the numerical ordering of a given outcome, i.e. of an element in the sample space. For convenience we can also define the survival function, which is equal to \(P(X\gt x)\). I.e. the survival function is equal to \(1-F(x)\).

The function \(p(x)\) is specified for a given distribution and for the case of discrete random variables, is known as the probability mass function or pmf.

Properties of discrete random variates: mean and variance

Consider a set of repeated samples or draws of a random variable which are independent, which means that the outcome of one does not affect the probability of the outcome of another. A random variate is the quantity that is generated by sampling once from the probability distribution, while a random variable is the notional object able to assume different numerical values, i.e. the distinction is similar to the distinction in python between x=15 and the object to which the number is assigned x (the variable).

The expectation \(E(X)\) is equal to the arithmetic mean of the random variates as the number of sampled variates increases \(\rightarrow \infty\). For a discrete probability distribution it is given by the mean of the distribution function, i.e. the pmf, which is equal to the sum of the product of all possible values of the variable with the associated probabilities:

[E[X] = \mu = \sum\limits_{i=1}^{n} x_{i}p(x_{i})]

This quantity \(\mu\) is often just called the mean of the distribution, or the population mean to distinguish it from the sample mean of data, which we will come to later on.

More generally, we can obtain the expectation of some function of \(X\), \(f(X)\):

[E[f(X)] = \sum\limits_{i=1}^{n} f(x_{i})p(x_{i})]

It follows that the expectation is a linear operator. So we can also consider the expectation of a scaled sum of variables \(X_{1}\) and \(X_{2}\) (which may themselves have different distributions):

[E[a_{1}X_{1}+a_{2}X_{2}] = a_{1}E[X_{1}]+a_{2}E[X_{2}]]

and more generally for a scaled sum of variables \(Y=\sum\limits_{i=1}^{n} a_{i}X_{i}\):

[E[Y] = \sum\limits_{i=1}^{n} a_{i}E[X_{i}] = \sum\limits_{i=1}^{n} a_{i}\mu_{i}]

i.e. the expectation for a scaled sum of variates is the scaled sum of their distribution means.

It is also useful to consider the variance, which is a measure of the squared ‘spread’ of the values of the variates around the mean, i.e. it is related to the weighted width of the probability distribution. It is a squared quantity because deviations from the mean may be positive (above the mean) or negative (below the mean). The (population) variance of discrete random variates \(X\) with (population) mean \(\mu\), is the expectation of the function that gives the squared difference from the mean:

[V[X] = \sigma^{2} = \sum\limits_{i=1}^{n} (x_{i}-\mu)^{2} p(x_{i})]

It is possible to rearrange things:

[V[X] = E[(X-\mu)^{2}] = E[X^{2}-2X\mu+\mu^{2}]]

[\rightarrow V[X] = E[X^{2}] - E[2X\mu] + E[\mu^{2}] = E[X^{2}] - 2\mu^{2} + \mu^{2}]

[\rightarrow V[X] = E[X^{2}] - \mu^{2} = E[X^{2}] - E[X]^{2}]

In other words, the variance is the expectation of squares - square of expectations. Therefore, for a function of \(X\):

[V[f(X)] = E[f(X)^{2}] - E[f(X)]^{2}]

For a sum of independent, scaled random variables, the expected variance is equal to the sum of the individual variances multiplied by their squared scaling factors:

[V[Y] = \sum\limits_{i=1}^{n} a_{i}^{2} \sigma_{i}^{2}]

We will consider the case where the variables are correlated (and not independent) in a later Episode.

Test yourself: mean and variance of dice rolls

Starting with the probability distribution of the score (i.e. from 1 to 6) obtained from a roll of a single, fair, 6-sided dice, use equations given above to calculate the expected mean and variance of the total obtained from summing the scores from a roll of three 6-sided dice. You should not need to explicitly work out the probability distribution of the total from the roll of three dice!

Solution

We require \(E[Y]\) and \(V[Y]\), where \(Y=X+X+X\), with \(X\) the variate produced by a roll of one dice. The dice are fair so \(p(x)=P(X=x)=1/6\) for all \(X\) from 1 to 6. Therefore the expectation for one dice is \(E[X]=\frac{1}{6}\sum\limits_{i=1}^{6} i = 21/6 = 7/2\).

The variance is \(V[X]=E[X^{2}]-\left(E[X]\right)^{2} = \frac{1}{6}\sum\limits_{i=1}^{6} i^{2} - (7/2)^{2} = 91/6 - 49/4 = 35/12 \simeq 2.92\) .

The mean and variance for a roll of three six sided dice, since they are equally weighted is equal to the sums of mean and variance, i.e. \(E[Y]=21/2\) and \(V[Y]=35/4\).

Probability distributions: Bernoulli and Binomial

A Bernoulli trial is a draw from a sample with only two possibilities (e.g. the colour of sweets drawn, with replacement, from a bag of red and green sweets). The outcomes are mapped on to integer variates \(X=1\) or \(0\), assuming probability of one of the outcomes \(\theta\), so the probability \(p(x)=P(X=x)\) is:

[p(x)=

\begin{cases}

\theta & \mbox{for }x=1

1-\theta & \mbox{for }x=0

\end{cases}]

and the corresponding Bernoulli distribution function (the pmf) can be written as:

[p(x\vert \theta) = \theta^{x}(1-\theta)^{1-x} \quad \mbox{for }x=0,1]

where the notation \(p(x\vert \theta)\) means ‘probability of obtaining x, conditional on model parameter \(\theta\)‘. The vertical line \(\vert\) meaning ‘conditional on’ (i.e. ‘given these existing conditions’) is the usual notation from probability theory, which we use often in this course. A variate drawn from this distribution is denoted as \(X\sim \mathrm{Bern}(\theta)\) (the ‘tilde’ symbol \(\sim\) here means ‘distributed as’). It has \(E[X]=\theta\) and \(V[X]=\theta(1-\theta)\) (which can be calculated using the equations for discrete random variables above).

We can go on to consider what happens if we have repeated Bernoulli trials. For example, if we draw sweets with replacement (i.e. we put the drawn sweet back before drawing again, so as not to change \(\theta\)), and denote a ‘success’ (with \(X=1\)) as drawing a red sweet, we expect the probability of drawing \(red, red, green\) (in that order) to be \(\theta^{2}(1-\theta)\).

However, what if we don’t care about the order and would just like to know the probability of getting a certain number of successes from \(n\) draws or trials (since we count each draw as a sampling of a single variate)? The resulting distribution for the number of successes (\(x\)) as a function of \(n\) and \(\theta\) is called the binomial distribution:

[p(x\vert n,\theta) = \begin{pmatrix} n \ x \end{pmatrix} \theta^{x}(1-\theta)^{n-x} = \frac{n!}{(n-x)!x!} \theta^{x}(1-\theta)^{n-x} \quad \mbox{for }x=0,1,2,…,n.]

Note that the matrix term in brackets is the binomial coefficient to account for the permutations of the ordering of the \(x\) successes. For variates distributed as \(X\sim \mathrm{Binom}(n,\theta)\), we have \(E[X]=n\theta\) and \(V[X]=n\theta(1-\theta)\).

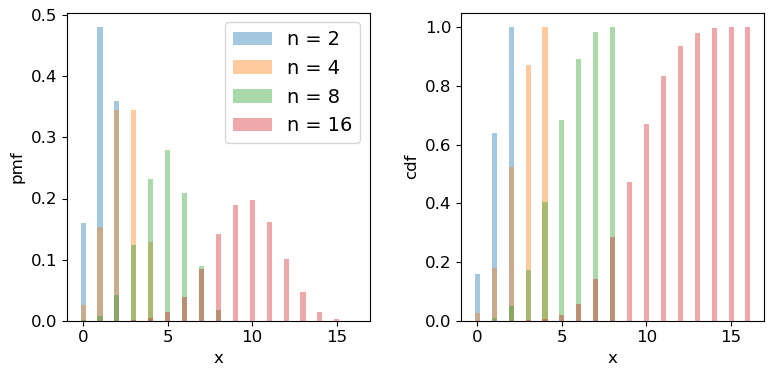

Scipy has the binomial distribution, binom in its stats module. Different properties of the distribution can be accessed via appending the appropriate method to the function call, e.g. sps.binom.pmf, or sps.binom.cdf. Below we plot the pmf and cdf of the distribution for different numbers of trials \(n\). It is formally correct to plot discrete distributions using separated bars, to indicate single discrete values, rather than bins over multiple or continuous values, but sometimes stepped line plots (or even histograms) can be clearer, provided you explain what they show.

## Define theta

theta = 0.6

## Plot as a bar plot

fig, (ax1, ax2) = plt.subplots(1,2, figsize=(9,4))

fig.subplots_adjust(wspace=0.3)

for n in [2,4,8,16]:

x = np.arange(0,n+1)

## Plot the pmf

ax1.bar(x, sps.binom.pmf(x,n,p=theta), width=0.3, alpha=0.4, label='n = '+str(n))

## and the cumulative distribution function:

ax2.bar(x, sps.binom.cdf(x,n,p=theta), width=0.3, alpha=0.4, label='n = '+str(n))

for ax in (ax1,ax2):

ax.tick_params(labelsize=12)

ax.set_xlabel("x", fontsize=12)

ax.tick_params(axis='x', labelsize=12)

ax.tick_params(axis='y', labelsize=12)

ax1.set_ylabel("pmf", fontsize=12)

ax2.set_ylabel("cdf", fontsize=12)

ax1.legend(fontsize=14)

plt.show()

Programming example: how many observations do I need?

Imagine that you are developing a research project to study the radio-emitting counterparts of binary neutron star mergers that are detected by a gravitational wave detector. Due to relativistic beaming effects however, radio counterparts are not always detectable (they may be beamed away from us). You know from previous observations and theory that the probability of detecting a radio counterpart from a binary neutron star merger detected by gravitational waves is 0.72. For simplicity you can assume that you know from the gravitational wave signal whether the merger is a binary neutron star system or not, with no false positives.

You need to request a set of observations in advance from a sensitive radio telescope to try to detect the counterparts for each binary merger detected in gravitational waves. You need 10 successful detections of radio emission in different mergers, in order to test a hypothesis about the origin of the radio emission. Observing time is expensive however, so you need to minimise the number of observations of different binary neutron star mergers requested, while maintaining a good chance of success. What is the minimum number of observations of different mergers that you need to request, in order to have a better than 95% chance of being able to test your hypothesis?

(Note: all the numbers here are hypothetical, and are intended just to make an interesting problem!)

Hint

We would like to know the chance of getting at least 10 detections, but the cdf is defined as \(F(x) = P(X\leq x)\). So it would be more useful (and more accurate than calculating 1-cdf for cases with cdf\(\rightarrow 1\)) to use the survival function method (

sps.binom.sf).Solution

We want to know the number of trials \(n\) for which we have a 95% probability of getting at least 10 detections. Remember that the cdf is defined as \(F(x) = P(X\leq x)\), so we need to use the survival function (1-cdf) but for \(x=9\), so that we calculate what we need, which is \(P(X\geq 10)\). We also need to step over increasing values of \(n\) to find the smallest value for which our survival function exceeds 0.95. We will look at the range \(n=\)10-25 (there is no point in going below 10!).

theta = 0.72 for n in range(10,26): print("For",n,"observations, chance of 10 or more detections =",sps.binom.sf(9,n,p=theta))For 10 observations, chance of 10 or more detections = 0.03743906242624486 For 11 observations, chance of 10 or more detections = 0.14226843721973037 For 12 observations, chance of 10 or more detections = 0.3037056744016985 For 13 observations, chance of 10 or more detections = 0.48451538004550243 For 14 observations, chance of 10 or more detections = 0.649052212181364 For 15 observations, chance of 10 or more detections = 0.7780490885758796 For 16 observations, chance of 10 or more detections = 0.8683469020520406 For 17 observations, chance of 10 or more detections = 0.9261375026767835 For 18 observations, chance of 10 or more detections = 0.9605229100485057 For 19 observations, chance of 10 or more detections = 0.9797787381766699 For 20 observations, chance of 10 or more detections = 0.9900228387408534 For 21 observations, chance of 10 or more detections = 0.9952380172098922 For 22 observations, chance of 10 or more detections = 0.9977934546597212 For 23 observations, chance of 10 or more detections = 0.9990043388667172 For 24 observations, chance of 10 or more detections = 0.9995613456019353 For 25 observations, chance of 10 or more detections = 0.999810884619313So we conclude that we need 18 observations of different binary neutron star mergers, to get a better than 95% chance of obtaining 10 radio detections.

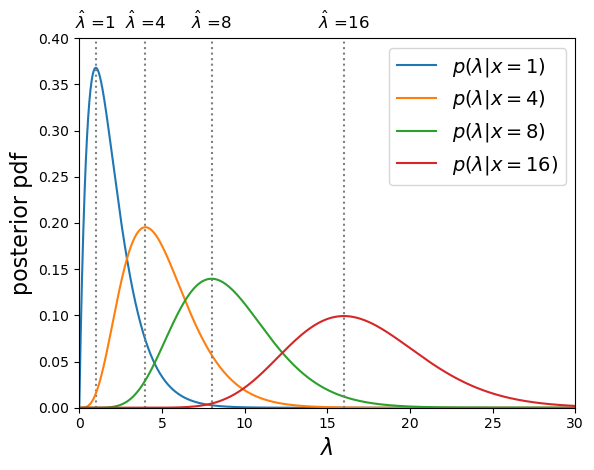

Probability distributions: Poisson

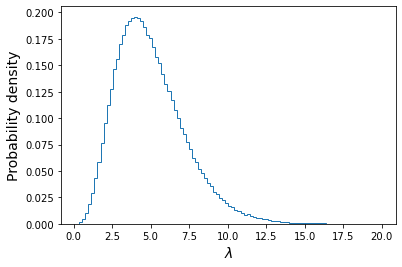

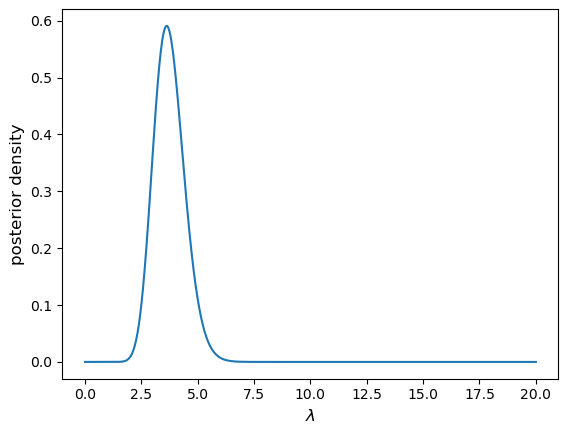

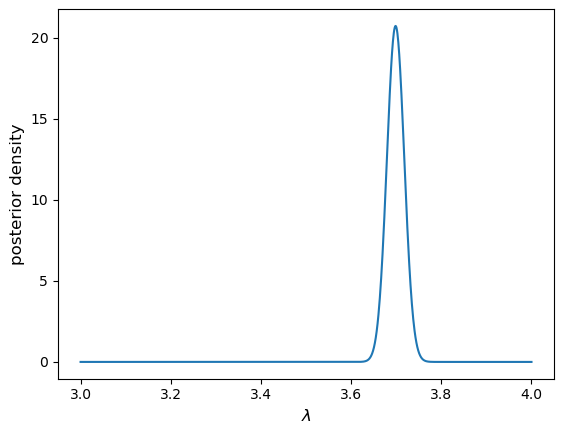

Imagine that we are running a particle detection experiment, e.g. to detect radioactive decays. The particles are detected at random intervals such that the expected mean rate per time interval (i.e. defined over an infinite number of intervals) is \(\lambda\). To work out the distribution of the number of particles \(x\) detected in the time interval, we can imagine splitting the interval into \(n\) equal sub-intervals. Then, if the expected rate \(\lambda\) is constant and the detections are independent of one another, the probability of a detection in any given time interval is the same: \(\lambda/n\). We can think of the sub-intervals as a set of \(n\) repeated Bernoulli trials, so that the number of particles detected in the overall time-interval follows a binomial distribution with \(\theta = \lambda/n\):

[p(x \vert \, n,\lambda/n) = \frac{n!}{(n-x)!x!} \frac{\lambda^{x}}{n^{x}} \left(1-\frac{\lambda}{n}\right)^{n-x}.]

In reality the distribution of possible arrival times in an interval is continuous and so we should make the sub-intervals infinitesimally small, otherwise the number of possible detections would be artificially limited to the finite and arbitrary number of sub-intervals. If we take the limit \(n\rightarrow \infty\) we obtain the follow useful results:

\(\frac{n!}{(n-x)!} = \prod\limits_{i=0}^{x-1} (n-i) \rightarrow n^{x}\) and \(\lim\limits_{n\rightarrow \infty} (1-\lambda/n)^{n-x} = e^{-\lambda}\)

where the second limit arises from the result that \(e^{x} = \lim\limits_{n\rightarrow \infty} (1+x/n)^{n}\). Substituting these terms into the expression from the binomial distribution we obtain:

[p(x \vert \lambda) = \frac{\lambda^{x}e^{-\lambda}}{x!}]

This is the Poisson distribution, one of the most important distributions in observational science, because it describes counting statistics, i.e. the distribution of the numbers of counts in bins. For example, although we formally derived it here as being the distribution of the number of counts in a fixed interval with mean rate \(\lambda\) (known as a rate parameter), the interval can refer to any kind of binning of counts where individual counts are independent and \(\lambda\) gives the expected number of counts in the bin.

For a random variate distributed as a Poisson distribution, \(X\sim \mathrm{Pois}(\lambda)\), \(E[X] = \lambda\) and \(V[X] = \lambda\). The expected variance leads to the expression that the standard deviation of the counts in a bin is equal to \(\sqrt{\lambda}\), i.e. the square root of the expected value. We will see later on that for a Poisson distributed likelihood, the observed number of counts is an estimator for the expected value. From this, we obtain the famous \(\sqrt{counts}\) error due to counting statistics.

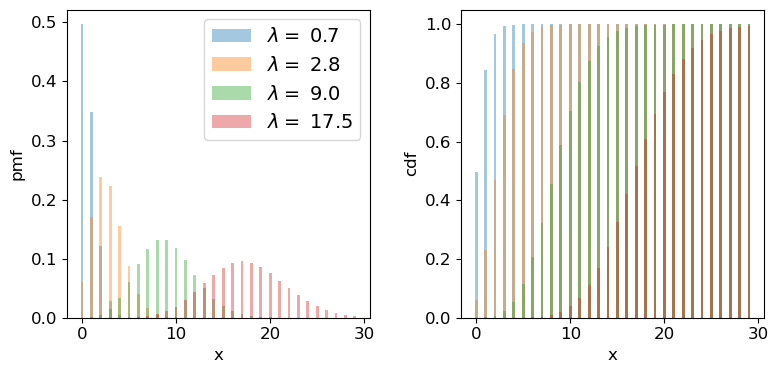

We can plot the Poisson pmf and cdf in a similar way to how we plotted the binomial distribution functions. An important point to bear in mind is that the rate parameter \(\lambda\) does not itself have to be an integer: the underlying rate is likely to be real-valued, but the Poisson distribution produces integer variates drawn from the distribution that is unique to \(\lambda\).

## Plot as a bar plot

fig, (ax1, ax2) = plt.subplots(1,2, figsize=(9,4))

fig.subplots_adjust(wspace=0.3)

## Step through lambda, note that to avoid Python confusion with lambda functions, we make sure we choose

## a different variable name!

for lam in [0.7,2.8,9.0,17.5]:

x = np.arange(0,30)

## Plot the pmf, note that perhaps confusingly, the rate parameter is defined as mu

ax1.bar(x, sps.poisson.pmf(x,mu=lam), width=0.3, alpha=0.4, label='$\lambda =$ '+str(lam))

## and the cumulative distribution function:

ax2.bar(x, sps.poisson.cdf(x,mu=lam), width=0.3, alpha=0.4, label='$\lambda =$ '+str(lam))

for ax in (ax1,ax2):

ax.tick_params(labelsize=12)

ax.set_xlabel("x", fontsize=12)

ax.tick_params(axis='x', labelsize=12)

ax.tick_params(axis='y', labelsize=12)

ax1.set_ylabel("pmf", fontsize=12)

ax2.set_ylabel("cdf", fontsize=12)

ax1.legend(fontsize=14)

plt.show()

Programming example: how long until the data are complete?

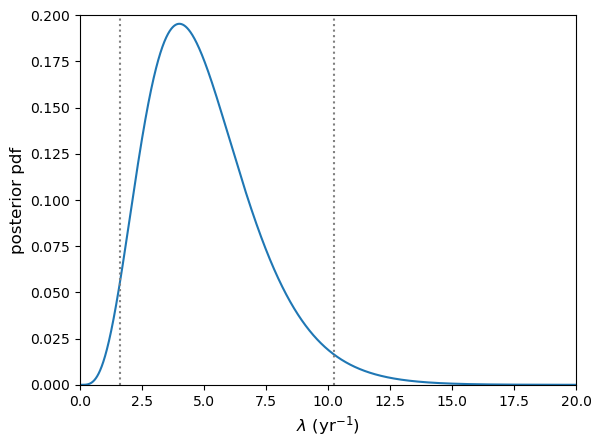

Following your successful proposal for observing time, you’ve been awarded 18 radio observations of neutron star binary mergers (detected via gravitational wave emission) in order to search for radio emitting counterparts. The expected detection rate for gravitational wave events from binary neutron star mergers is 9.5 per year. Assume that you require all 18 observations to complete your proposed research. According to the time award rules for so-called ‘Target of opportunity (ToO)’ observations like this, you will need to resubmit your proposal if it isn’t completed within 3 years. What is the probability that you will need to resubmit your proposal?

Solution

Since binary mergers are independent random events and their mean detection rate (at least for a fixed detector sensitivity and on the time-scale of the experiment!) should be constant in time, the number of merger events in a fixed time interval should follow a Poisson distribution.

Given that we require the full 18 observations, the proposal will need to be resubmitted if there are fewer than 18 gravitational wave events (from binary neutron stars) in 3 years. For this we can use the cdf, remember again that for the cdf \(F(x) = P(X\leq x)\), so we need the cdf for 17 events. The interval we are considering is 3 years not 1 year, so we should multiply the annual detection rate by 3 to get the correct \(\lambda\):

lam = 9.5*3 print("Probability that < 18 observations have been carried out in 3 years =",sps.poisson.cdf(17,lam))Probability that < 18 observations have been carried out in 3 years = 0.014388006538141204So there is only a 1.4% chance that we will need to resubmit our proposal.

Test yourself: is this a surprising detection?

Meanwhile, one of your colleagues is part of a team using a sensitive neutrino detector to search for bursts of neutrinos associated with neutron star mergers detected from gravitational wave events. The detector has a constant background rate of 0.03 count/s. In a 1 s interval following a gravitational wave event, the detector detects a two neutrinos. Without using Python (i.e. by hand, with a calculator), calculate the probability that you would detect less than two neutrinos in that 1 s interval. Assuming that the neutrinos are background detections, should you be suprised about your detection of two neutrinos?

Solution

The probability that you would detect less than two neutrinos is equal to the summed probability that you would detect 0 or 1 neutrino (note that we can sum their probabilities because they are mutually exclusive outcomes). Assuming these are background detections, the rate parameter is given by the background rate \(\lambda=0.03\). Applying the Poisson distribution formula (assuming the random Poisson variable \(x\) is the number of background detections \(N_{\nu}\)) we obtain:

\(P(N_{\nu}<2)=P(N_{\nu}=0)+P(N_{\nu}=1)=\frac{0.03^{0}e^{-0.03}}{0!}+\frac{0.03^{1}e^{-0.03}}{1!}=(1+0.03)e^{-0.03}=0.99956\) to 5 significant figures.

We should be surprised if the detections are just due to the background, because the chance we would see 2 (or more) background neutrinos in the 1-second interval after the GW event is less than one in two thousand! Having answered the question ‘the slow way’, you can also check your result for the probability using the

scipy.statsPoisson cdf for \(x=1\).Note that it is crucial here that the neutrinos arrive within 1 s of the GW event. This is because GW events are rare and the background rate is low enough that it would be unusual to see two background neutrinos appear within 1 s of the rare event which triggers our search. If we had seen two neutrinos only within 100 s of the GW event, we would be much less surprised (the rate expected is 3 count/100 s). We will consider these points in more detail in a later episode, after we have discussed Bayes’ theorem.

Generating random variates drawn from discrete probability distributions

So far we have discussed random variables in an abstract sense, in terms of the population, i.e. the continuous probability distribution. But real data is in the form of samples: individual measurements or collections of measurements, so we can get a lot more insight if we can generate ‘fake’ samples from a given distribution.

The scipy.stats distribution functions have a method rvs for generating random variates that are drawn from the distribution (with the given parameter values). You can either freeze the distribution or specify the parameters when you call it. The number of variates generated is set by the size argument and the results are returned to a numpy array. The default seed is the system seed, but if you initialise your own generator (e.g. using rng = np.random.default_rng()) you can pass this to the rvs method using the random_state parameter.

# Generate 10 variates from Binom(10,0.4)

bn_vars = print(sps.binom.rvs(n=4,p=0.4,size=10))

print("Binomial variates generated:",bn_vars)

# Generate 10 variates from Pois(2.7)

pois_vars = sps.poisson.rvs(mu=2.7,size=10)

print("Poisson variates generated:",pois_vars)

Binomial variates generated: [1 2 0 2 3 2 2 1 2 3]

Poisson variates generated: [6 4 2 2 5 2 2 0 3 5]

Remember that these numbers depend on the starting seed which is almost certainly unique to your computer (unless you pre-select it by passing a bit generator initialised by a specific seed as the argument for the random_state parameter). They will also change each time you run the code cell.

How random number generation works

Random number generators use algorithms which are strictly pseudo-random since (at least until quantum-computers become mainstream) no algorithm can produce genuinely random numbers. However, the non-randomness of the algorithms that exist is impossible to detect, even in very large samples.

For any distribution, the starting point is to generate uniform random variates in the interval \([0,1]\) (often the interval is half-open \([0,1)\), i.e. exactly 1 is excluded). \(U(0,1)\) is the same distribution as the distribution of percentiles - a fixed range quantile has the same probability of occurring wherever it is in the distribution, i.e. the range 0.9-0.91 has the same probability of occuring as 0.14-0.15. This means that by drawing a \(U(0,1)\) random variate to generate a quantile and putting that in the ppf of the distribution of choice, the generator can produce random variates from that distribution. All this work is done ‘under the hood’ within the

scipy.statsdistribution function.It’s important to bear in mind that random variates work by starting from a ‘seed’ (usually an integer) and then each call to the function will generate a new (pseudo-)independent variate but the sequence of variates is replicated if you start with the same seed. However, seeds are generated by starting from a system seed set using random and continuously updated data in a special file in your computer, so they will differ each time you run the code.

Programming challenge: distributions of discrete measurements

It often happens that we want to take measurements for a sample of objects in order to classify them. E.g. we might observe photons from a particle detector and use them to identify the particle that caused the photon trigger. Or we measure spectra for a sample of astronomical sources, and use them to classify and count the number of each type of object in the sample. In these situations it is useful to know how the number of objects with a given classification - which is a random variate - is distributed.

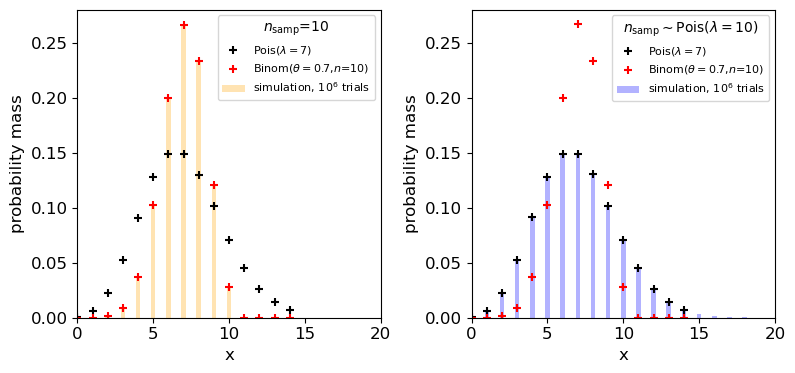

For this challenge, consider the following simple situation. We use X-ray data to classify a sample of \(n_{\rm samp}\) X-ray sources in an old star cluster as either black holes or neutron stars. Assume that the probability of an X-ray source being classified as a black hole is \(\theta=0.7\) and the classification for any source is independent of any other. Then, for a given sample size the number of X-ray sources classified as black holes is a random variable \(X\), with \(X\sim \mathrm{Binom}(n_{\rm samp},\theta)\).

Now imagine two situations for constructing your sample of X-ray sources:

- a. You consider only a fixed, pre-selected sample size \(n_{\rm samp}=10\). E.g. perhaps you consider only the 10 brightest X-ray sources, or the 10 that are closest to the cluster centre (for simplicity, you can assume that the sample selection criterion does not affect the probability that a source is classified as a black hole).

- b. You consider a random sample size of X-ray sources such that \(n_{\rm samp}\) is Poisson distributed with \(n_{\rm samp}\sim \mathrm{Pois}(\lambda=10)\). I.e. the expectation of the sample size is the same as for the fixed sample size, but the sample itself can randomly vary in size, following a Poisson distribution.

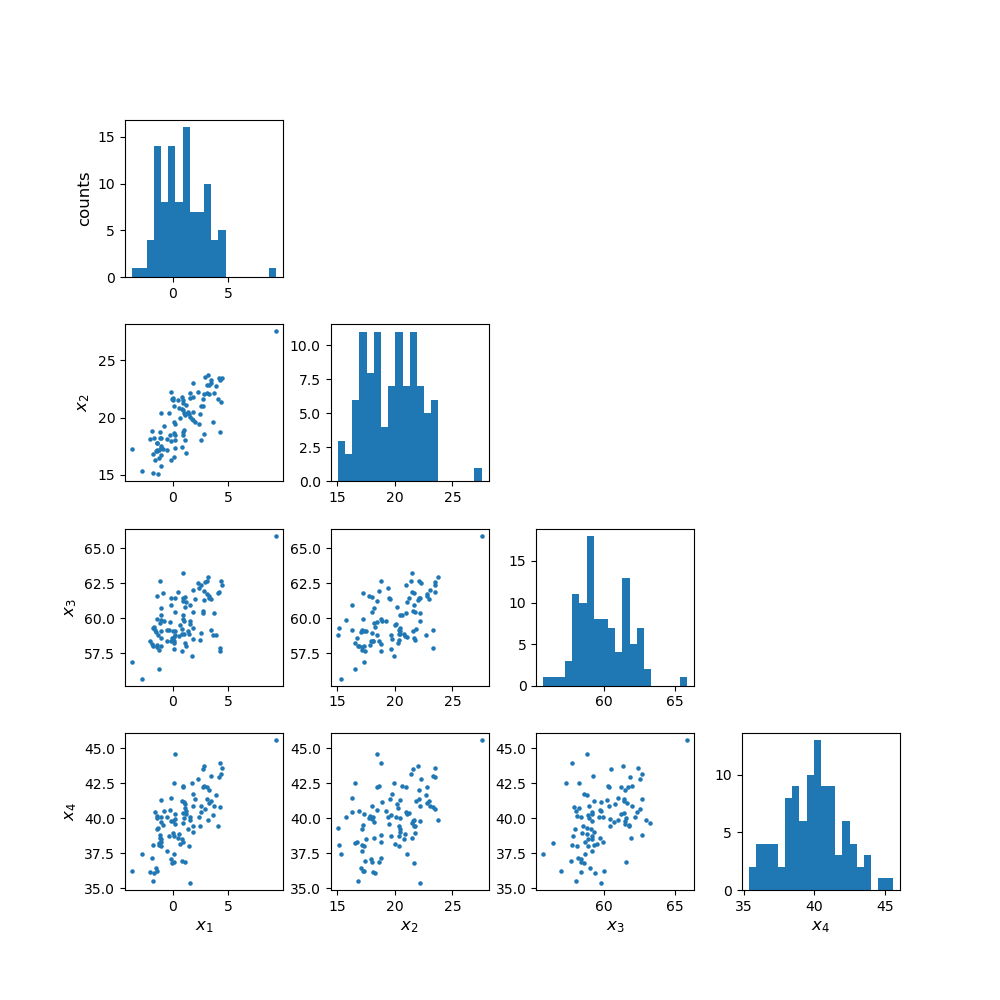

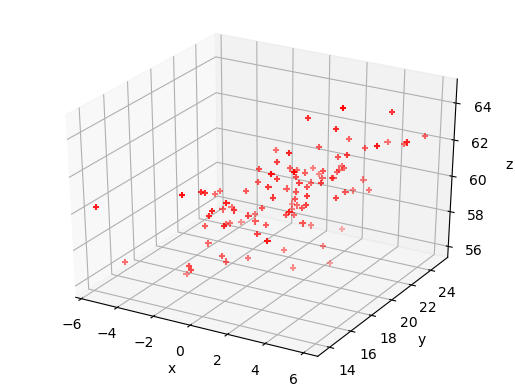

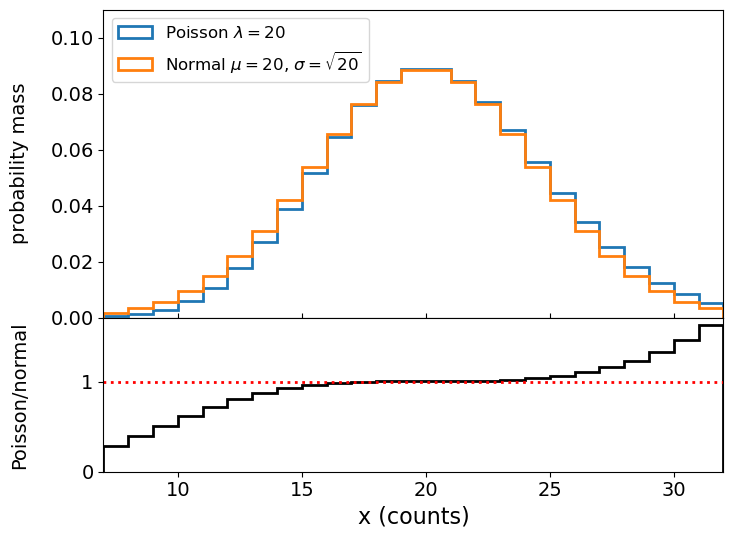

Now use Scipy to simulate a set of \(10^{6}\) samples of measurements of \(X\) for each of these two situations. I.e. first keeping the sample size fixed (then randomly generated) for a million trials, generate binomially distributed variates. Plot the probability mass function of your simulated measurements of \(X\) (this is just a histogram of the number of trials producing each value of \(X\), divided by the number of trials to give a probability for each value of \(X\)) and compare your simulated distributions with the pmfs for binomial and Poisson distributions to see what matches best for each of the two cases of sample size (fixed or Poisson-distributed) considered.

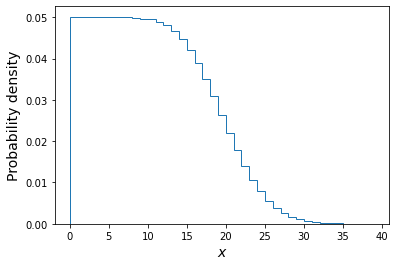

To help you, you should make a plot which looks like this:

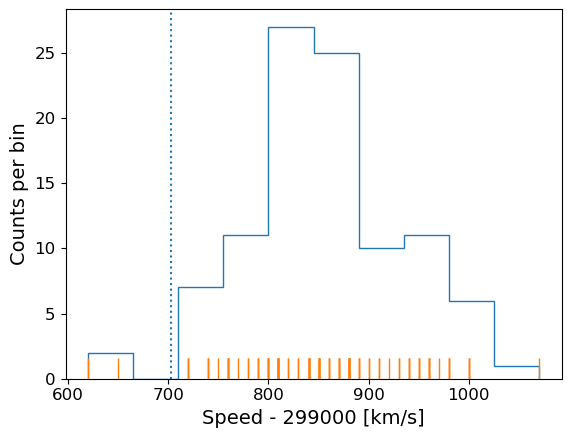

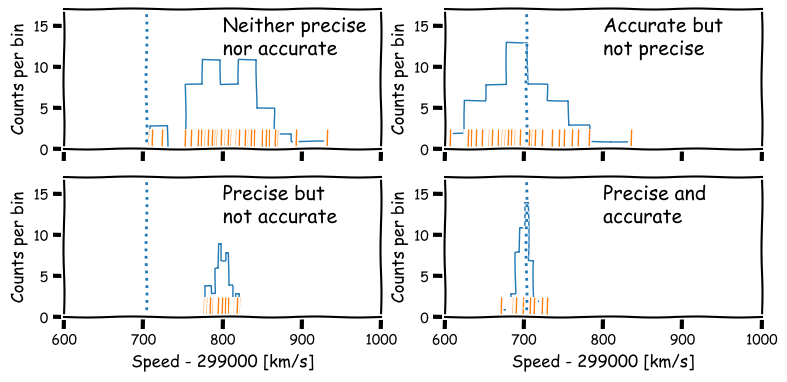

The plot shows that the distribution of the measured number of black holes depends on whether the sample size was fixed or not!

Finally, explain why the distribution of \(X\) for the random Poisson-distributed sample size follows the Poisson distribution for \(\lambda=7\).

Hints

- Instead of using a for loop to generate a million random variates, you can use the

sizeargument with the.rvsmethod to generate a single array of a million variates.- For counting the frequency of each value of a random variate, you can use e.g.

np.histogramorplt.hist(the latter will plot the histogram directly) but when the values are integers it is simplest to count the numbers of each value usingnp.bincount. Look it up to see how it is used.- Use

plt.scatterto plot pmf values using markers.

Key Points

Discrete probability distributions map a sample space of discrete outcomes (categorical or numerical) on to their probabilities.

By assigning an outcome to an ordered sequence of integers corresponding to the discrete variates, functional forms for probability distributions (the pmf or probability mass function) can be defined.

Random variables are drawn from probability distributions. The expectation value (arithmetic mean for an infinite number of sampled variates) is equal to the mean of the distribution function (pmf or pdf).

The expectation of the variance of a random variable is equal to the expectation of the squared variable minus the squared expectation of the variable.

Sums of scaled random variables have expectation values equal to the sum of scaled expectations of the individual variables, and variances equal to the sum of scaled individual variances.

Bernoulli trials correspond to a single binary outcome (success/fail) while the number of successes in repeated Bernoulli trials is given by the binomial distribution.

The Poisson distribution can be derived as a limiting case of the binomial distribution and corresponds to the probability of obtaining a certain number of counts in a fixed interval, from a random process with a constant rate.

Random variates can be sampled from Scipy probability distributions using the

.rvsmethod.The probability distribution of numbers of objects for a given bin/classification depends on whether the original sample size was fixed at a pre-determined value or not.

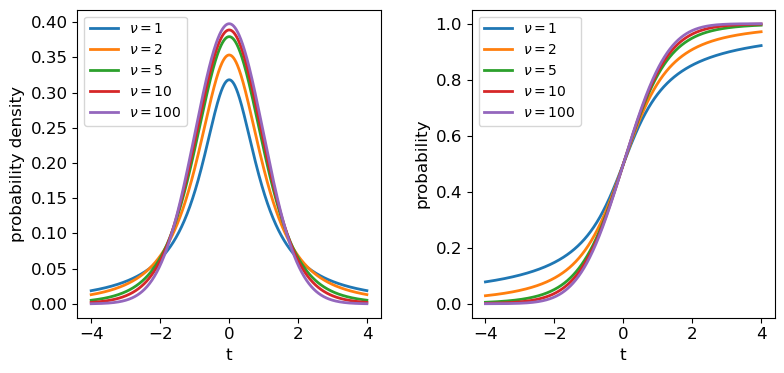

Continuous random variables and their probability distributions

Overview

Teaching: 60 min

Exercises: 60 minQuestions

How are continuous probability distributions defined and described?

What happens to the distributions of sums or means of random data?

Objectives

Learn how the pdf, cdf, quantiles, ppf are defined and how to plot them using

scipy.statsdistribution functions and methods.Learn how the expected means and variances of continuous random variables (and functions of them) can be calculated from their probability distributions.

Understand how the shapes of distributions can be described parametrically and empirically.

Learn how to carry out Monte Carlo simulations to demonstrate key statistical results and theorems.

In this episode we will be using numpy, as well as matplotlib’s plotting library. Scipy contains an extensive range of distributions in its ‘scipy.stats’ module, so we will also need to import it. Remember: scipy modules should be installed separately as required - they cannot be called if only scipy is imported.

import numpy as np

import matplotlib.pyplot as plt

import scipy.stats as sps

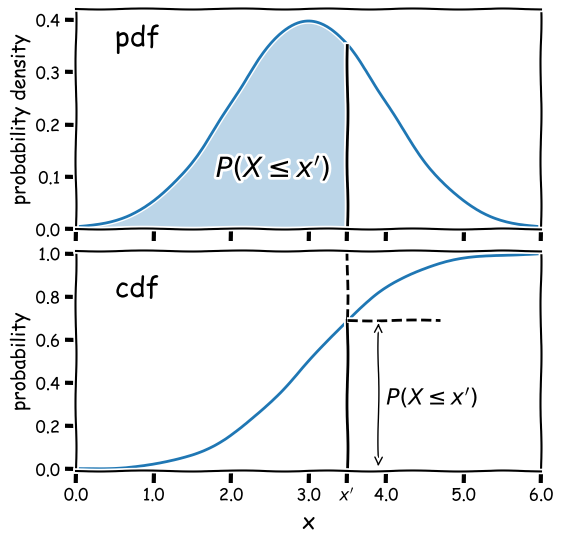

The cdf and pdf of a continuous probability distribution

Consider a continuous random variable \(x\), which follows a fixed, continuous probability distribution. A random variate \(X\) (e.g. a single experimental measurement) is drawn from the distribution. We can define the probability \(P\) that \(X\leq x\) as being the cumulative distribution function (or cdf), \(F(x)\):

[F(x) = P(X\leq x)]

We can choose the limiting values of our distribution, but since \(X\) must take on some value (i.e. the definition of an ‘event’ is that something must happen) the distribution must satisfy:

\(\lim\limits_{x\rightarrow -\infty} F(x) = 0\) and \(\lim\limits_{x\rightarrow +\infty} F(x) = 1\)

From these definitions we find that the probability that \(X\) lies in the closed interval \([a,b]\) (note: a closed interval, denoted by square brackets, means that we include the endpoints \(a\) and \(b\)) is:

[P(a \leq X \leq b) = F(b) - F(a)]

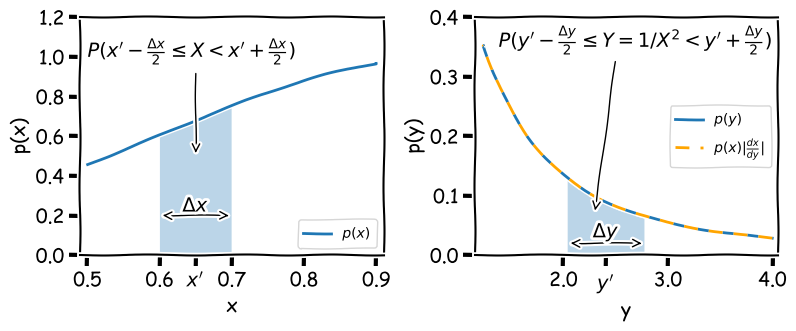

We can then take the limit of a very small interval \([x,x+\delta x]\) to define the probability density function (or pdf), \(p(x)\):

[\frac{P(x\leq X \leq x+\delta x)}{\delta x} = \frac{F(x+\delta x)-F(x)}{\delta x}]

[p(x) = \lim\limits_{\delta x \rightarrow 0} \frac{P(x\leq X \leq x+\delta x)}{\delta x} = \frac{\mathrm{d}F(x)}{\mathrm{d}x}]

This means that the cdf is the integral of the pdf, e.g.:

[P(X \leq x) = F(x) = \int^{x}_{-\infty} p(x^{\prime})\mathrm{d}x^{\prime}]

where \(x^{\prime}\) is a dummy variable. The probability that \(X\) lies in the interval \([a,b]\) is:

[P(a \leq X \leq b) = F(b) - F(a) = \int_{a}^{b} p(x)\mathrm{d}x]

and \(\int_{-\infty}^{\infty} p(x)\mathrm{d}x = 1\).

Note that the pdf is in some sense the continuous equivalent of the pmf of discrete distributions, but for continuous distributions the function must be expressed as a probability density for a given value of the continuous random variable, instead of the probability used by the pmf. A discrete distribution always shows a finite (or exactly zero) probability for a given value of the discrete random variable, hence the different definitions of the pdf and pmf.

Why use the pdf?

By definition, the cdf can be used to directly calculate probabilities (which is very useful in statistical assessments of data), while the pdf only gives us the probability density for a specific value of \(X\). So why use the pdf? One of the main reasons is that it is generally much easier to calculate the pdf for a particular probability distribution, than it is to calculate the cdf, which requires integration (which may be analytically impossible in some cases!).

Also, the pdf gives the relative probabilities (or likelihoods) for particular values of \(X\) and the model parameters, allowing us to compare the relative likelihood of hypotheses where the model parameters are different. This principle is a cornerstone of statistical inference which we will come to later on.

Properties of continuous random variates: mean and variance

As with variates drawn from discrete distributions, the expectation \(E(X)\) (also known as the mean for continuous random variates is equal to their arithmetic mean as the number of sampled variates increases \(\rightarrow \infty\). For a continuous probability distribution it is given by the mean of the distribution function, i.e. the pdf:

[E[X] = \mu = \int_{-\infty}^{+\infty} xp(x)\mathrm{d}x]

And we can obtain the expectation of some function of \(X\), \(f(X)\):

[E[f(X)] = \int_{-\infty}^{+\infty} f(x)p(x)\mathrm{d}x]

while the variance is:

[V[X] = \sigma^{2} = E[(X-\mu)^{2})] = \int_{-\infty}^{+\infty} (x-\mu)^{2} p(x)\mathrm{d}x]

and the results for scaled linear combinations of continuous random variates are the same as for discrete random variates, i.e. for a scaled sum of random variates \(Y=\sum\limits_{i=1}^{n} a_{i}X_{i}\):

[E[Y] = \sum\limits_{i=1}^{n} a_{i}E[X_{i}]]

[V[Y] = \sum\limits_{i=1}^{n} a_{i}^{2} \sigma_{i}^{2}]

Taking averages: sample means vs. population means

As an example of summing scaled random variates, it is often necessary to calculate an average quantity rather than the summed value, i.e.:

\[\bar{X} = \frac{1}{n} \sum\limits_{i=1}^{n} X_{i}\]Where \(\bar{X}\) is also known as the sample mean. In this case the scaling factors \(a_{i}=\frac{1}{n}\) for all \(i\) and we obtain:

\[E[\bar{X}] = \frac{1}{n} \sum\limits_{i=1}^{n} \mu_{i}\] \[V[\bar{X}] = \frac{1}{n^{2}} \sum\limits_{i=1}^{n} \sigma_{i}^{2}\]and in the special case where the variates are all drawn from the same distribution with mean \(\mu\) and variance \(\sigma^{2}\):

\(E[\bar{X}] = \mu\) and \(V[\bar{X}] = \frac{\sigma^{2}}{n}\),

which leads to the so-called standard error on the sample mean (the standard deviation of the sample mean):

\[\sigma_{\bar{X}} = \sigma/\sqrt{n}\]It is important to make a distinction between the sample mean for a sample of random variates (\(\bar{X}\)) and the expectation value, also known as the population mean of the distribution the variates are drawn from, in this case \(\mu\). In frequentist statistics, expectation values are the limiting average values for an infinitely sized sample (the ‘population’) drawn from a given distribution, while in Bayesian terms they simply represent the mean of the probability distribution.

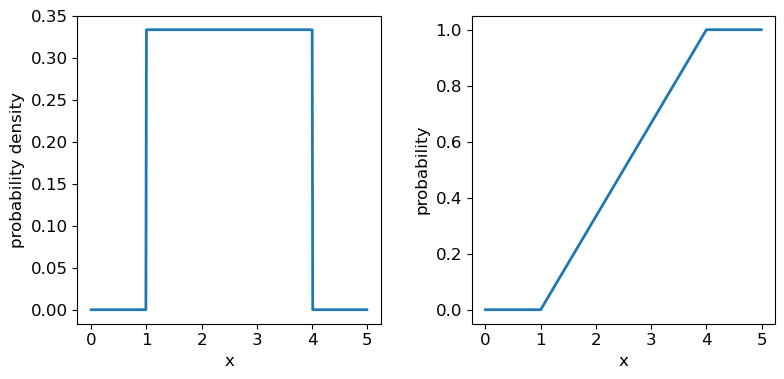

Probability distributions: Uniform

Now we’ll introduce two common probability distributions, and see how to use them in your Python data analysis. We start with the uniform distribution, which has equal probability values defined over some finite interval \([a,b]\) (and zero elsewhere). The pdf is given by:

[p(x\vert a,b) = 1/(b-a) \quad \mathrm{for} \quad a \leq x \leq b]

where the notation \(p(x\vert a,b)\) means ‘probability density at x, conditional on model parameters \(a\) and \(b\)‘. For \(X\) drawn from a uniform distribution over the interval \([a,b]\), we write \(X\sim \mathrm{U}(a,b)\). We can use the approach given above to calculate the mean \(E[X] = (b+a)/2\) and variance \(V[X] = (b-a)^{2}/12\).

Distribution parameters: location, scale and shape

When working with probability distributions in Python, it is often useful to ‘freeze’ a distribution by fixing its parameters and defining the frozen distribution as a new function, which saves repeating the parameters each time. The common format for arguments of scipy statistical distributions which represent distribution parameters, corresponds to statistical terminology for the parameters:

- A location parameter (the

locargument in the scipy function) determines the location of the distribution on the \(x\)-axis. Changing the location parameter just shifts the distribution along the \(x\)-axis.- A scale parameter (the

scaleargument in the scipy function) determines the width or (more formally) the statistical dispersion of the distribution. Changing the scale parameter just stretches or shrinks the distribution along the \(x\)-axis but does not otherwise alter its shape.- There may be one or more shape parameters (scipy function arguments may have different names specific to the distribution). These are parameters which do something other than shifting, or stretching/shrinking the distribution, i.e. they change the shape in some way.

Distributions may have all or just one of these parameters, depending on their form. For example, normal distributions are completely described by their location (the mean) and scale (the standard deviation), while exponential distributions (and the related discrete Poisson distribution) may be defined by a single parameter which sets their location as well as width. Some distributions use a rate parameter which is the reciprocal of the scale parameter (exponential/Poisson distributions are an example of this).

Now let’s freeze a uniform distribution with parameters \(a=1\) and \(b=4\):

## define parameters for our uniform distribution

a = 1

b = 4

print("Uniform distribution with limits",a,"and",b,":")

## freeze the distribution for a given a and b

ud = sps.uniform(loc=a, scale=b-a) # The 2nd parameter is added to a to obtain the upper limit = b

The uniform distribution has a scale parameter \(\lvert b-a \rvert\). This statistical distribution’s location parameter is formally the centre of the distribution, \((a+b)/2\), but for convenience the scipy uniform function uses \(a\) to place a bound on one side of the distribution. We can obtain and plot the pdf and cdf by applying those named methods to the scipy function. Note that we must also use a suitable function (e.g. numpy.arange) to create a sufficiently dense range of \(x\)-values to make the plots over.

## You can plot the probability density function

fig, (ax1, ax2) = plt.subplots(1,2, figsize=(9,4))

# change the separation between the sub-plots:

fig.subplots_adjust(wspace=0.3)

x = np.arange(0., 5.0, 0.01)

ax1.plot(x, ud.pdf(x), lw=2)

## or you can plot the cumulative distribution function:

ax2.plot(x, ud.cdf(x), lw=2)

for ax in (ax1,ax2):

ax.tick_params(labelsize=12)

ax.set_xlabel("x", fontsize=12)

ax.tick_params(axis='x', labelsize=12)

ax.tick_params(axis='y', labelsize=12)

ax1.set_ylabel("probability density", fontsize=12)

ax2.set_ylabel("probability", fontsize=12)

plt.show()

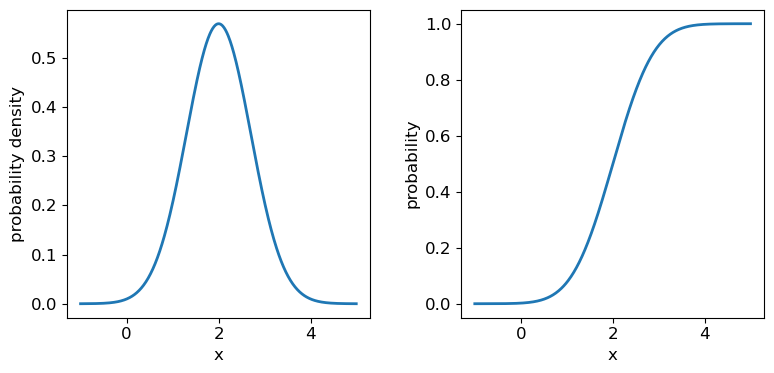

Probability distributions: Normal

The normal distribution is one of the most important in statistical data analysis (for reasons which will become clear) and is also known to physicists and engineers as the Gaussian distribution. The distribution is defined by location parameter \(\mu\) (often just called the mean, but not to be confused with the mean of a statistical sample) and scale parameter \(\sigma\) (also called the standard deviation, but again not to be confused with the sample standard deviation). The pdf is given by:

[p(x\vert \mu,\sigma)=\frac{1}{\sigma \sqrt{2\pi}} e^{-(x-\mu)^{2}/(2\sigma^{2})}]

For normally-distributed variates (\(X\sim \mathrm{N}(\mu,\sigma)\)) we obtain the simple results that \(E[X]=\mu\) and \(V[X]=\sigma^{2}\).

It is also common to refer to the standard normal distribution which is the normal distribution with \(\mu=0\) and \(\sigma=1\):

[p(z\vert 0,1) = \frac{1}{\sqrt{2\pi}} e^{-z^{2}/2}]

The standard normal is important for many statistical results, including the approach of defining statistical significance in terms of the number of ‘sigmas’ which refers to the probability contained within a range \(\pm z\) on the standard normal distribution (we will discuss this in more detail when we discuss statistical significance testing).

Programming example: plotting the normal distribution

Now that you have seen the example of a uniform distribution, use the appropriate

scipy.statsfunction to plot the pdf and cdf of the normal distribution, for a mean and standard deviation of your choice (you can freeze the distribution first if you wish, but it is not essential).Solution

## Define mu and sigma: mu = 2.0 sigma = 0.7 ## Plot the probability density function fig, (ax1, ax2) = plt.subplots(1,2, figsize=(9,4)) fig.subplots_adjust(wspace=0.3) ## we will plot +/- 3 sigma on either side of the mean x = np.arange(-1.0, 5.0, 0.01) ax1.plot(x, sps.norm.pdf(x,loc=mu,scale=sigma), lw=2) ## and the cumulative distribution function: ax2.plot(x, sps.norm.cdf(x,loc=mu,scale=sigma), lw=2) for ax in (ax1,ax2): ax.tick_params(labelsize=12) ax.set_xlabel("x", fontsize=12) ax.tick_params(axis='x', labelsize=12) ax.tick_params(axis='y', labelsize=12) ax1.set_ylabel("probability density", fontsize=12) ax2.set_ylabel("probability", fontsize=12) plt.show()

It’s useful to note that the pdf is much more distinctive for different functions than the cdf, which (because of how it is defined) always takes on a similar, slanted ‘S’-shape, hence there is some similarity in the form of cdf between the normal and uniform distributions, although their pdfs look radically different.

Probability distributions: Lognormal

Another important continuous distribution is the lognormal distribution. If random variates \(X\) are lognormally distributed, then the variates \(Y=\ln(X)\) are normally distributed.

[p(x\vert \theta,m,s) = \frac{1}{(x-\theta)s\sqrt{2\pi}}\exp \left(\frac{-\left(\ln [(x-\theta)/m] \right)^{2}}{2s^{2}}\right) \quad x > \theta \mbox{ ; } m, s > 0]

Here \(\theta\) is the location parameter, \(m\) the scale parameter and \(s\) is the shape parameter. The case for \(\theta=0\) is known as the 2-parameter lognormal distribution while the standard lognormal occurs when \(\theta=0\) and \(m=1\). For the 2-parameter lognormal (with location parameter \(\theta=0\)), \(X\sim \mathrm{Lognormal}(m,s)\) and we find \(E[X]=m\exp(s^{2}/2)\) and \(V[X]=m^{2}[\exp(s^{2})-1]\exp(s^{2})\).

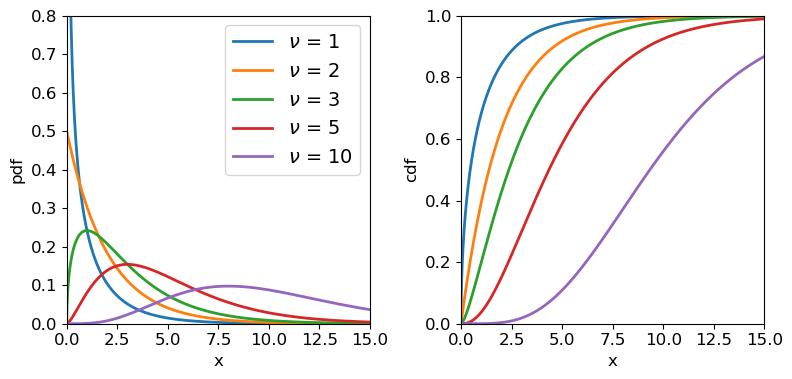

Moments: mean, variance, skew, kurtosis

The mean and variance of a distribution of random variates are examples of statistical moments. The first raw moment is the mean \(\mu=E[X]\). By subtracting the mean when calculating expectations and taking higher integer powers, we obtain the central moments:

\[\mu_{n} = E[(X-\mu)^{n}]\]Which for a continuous probability distribution may be calculated as:

\[\mu_{n} = \int_{-\infty}^{+\infty} (x-\mu)^{n} p(x)\mathrm{d}x\]The central moments may sometimes be standardised by dividing by \(\sigma^{n}\), to obtain a dimensionless quantity. The first central moment is zero by definition. The second central moment is the variance (\(\sigma^{2}\)). Although the mean and variance are by far the most common, you will sometimes encounter the third and fourth central moments, known respectively as the skewness and kurtosis.

Skewness measures how skewed (asymmetric) the distribution is around the mean. Positively skewed (or ‘right-skewed’) distributions are more extended to larger values of \(x\), while negatively skewed (‘left-skewed’) distributions are more extended to smaller (or more negative) values of \(x\). For a symmetric distribution such as the normal or uniform distributions, the skewness is zero. Kurtosis measures how `heavy-tailed’ the distribution is, i.e. how strong the tail is relative to the peak of the distribution. The excess kurtosis, equal to kurtosis minus 3 is often used, so that the standard normal distribution has excess kurtosis equal to zero by definition.

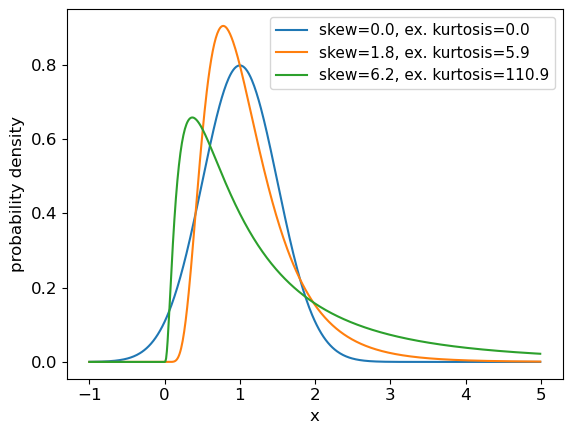

Programming example: skewness and kurtosis

We can return the moments of a Scipy distribution using the

statsmethod with the argumentmoments='sk'to return only the skew and excess kurtosis. In a single plot panel, plot the pdfs of the following distributions and give the skew and kurtosis of the distributions as labels in the legend.

- A normal distribution with \(\mu=1\) and \(\sigma=0.5\).

- A 2-parameter lognormal distribution with \(s=0.5\) and \(m=1\).

- A 2-parameter lognormal distribution with \(s=1\) and \(m=1\).

Solution

# First set up the frozen distributions: ndist = sps.norm(loc=1,scale=0.5) lndist1 = sps.lognorm(loc=0,scale=1,s=0.5) lndist2 = sps.lognorm(loc=0,scale=1,s=1) x = np.arange(-1.0, 5.0, 0.01) # x-values to plot the pdfs over plt.figure() for dist in [ndist,lndist1,lndist2]: skvals = dist.stats(moments='sk') # The stats method outputs an array with the corresponding moments label_txt = r"skew="+str(np.round(skvals[0],1))+", ex. kurtosis="+str(np.round(skvals[1],1)) plt.plot(x,dist.pdf(x),label=label_txt) plt.xlabel("x", fontsize=12) plt.ylabel("probability density", fontsize=12) plt.tick_params(axis='x', labelsize=12) plt.tick_params(axis='y', labelsize=12) plt.legend(fontsize=11) plt.show()

Quantiles and median values

It is often useful to be able to calculate the quantiles (such as percentiles or quartiles) of a distribution, that is, what value of \(x\) corresponds to a fixed interval of integrated probability? We can obtain these from the inverse function of the cdf (\(F(x)\)). E.g. for the quantile \(\alpha\):

[F(x_{\alpha}) = \int^{x_{\alpha}}{-\infty} p(x)\mathrm{d}x = \alpha \Longleftrightarrow x{\alpha} = F^{-1}(\alpha)]

The value of \(x\) corresponding to $\alpha=0.5$, i.e. the fiftieth percentile value, which contains half the total probability below it, is known as the median. Note that positively skewed distributions always show mean values that exceed the median, while negatively skewed distributions show mean values which are less than the median (for symmetric distributions the mean and median are the same).

Note that \(F^{-1}\) denotes the inverse function of \(F\), not \(1/F\)! This is called the percent point function (or ppf). To obtain a given quantile for a distribution we can use the scipy.stats method ppf applied to the distribution function. For example:

## Print the 30th percentile of a normal distribution with mu = 3.5 and sigma=0.3

print("30th percentile:",sps.norm.ppf(0.3,loc=3.5,scale=0.3))

## Print the median (50th percentile) of the distribution

print("Median (via ppf):",sps.norm.ppf(0.5,loc=3.5,scale=0.3))

## There is also a median method to quickly return the median for a distribution:

print("Median (via median method):",sps.norm.median(loc=3.5,scale=0.3))

30th percentile: 3.342679846187588

Median (via ppf): 3.5

Median (via median method): 3.5

Intervals

It is sometimes useful to be able to quote an interval, containing some fraction of the probability (and usually centred on the median) as a ‘typical’ range expected for the random variable \(X\). We will discuss intervals on probability distributions further when we discuss confidence intervals on parameters. For now, we note that the

.intervalmethod can be used to obtain a given interval centred on the median. For example, the Interquartile Range (IQR) is often quoted as it marks the interval containing half the probability, between the upper and lower quartiles (i.e. from 0.25 to 0.75):## Print the IQR for a normal distribution with mu = 3.5 and sigma=0.3 print("IQR:",sps.norm.interval(0.5,loc=3.5,scale=0.3))IQR: (3.2976530749411754, 3.7023469250588246)So for the normal distribution, with \(\mu=3.5\) and \(\sigma=0.3\), half of the probability is contained in the range \(3.5\pm0.202\) (to 3 decimal places).

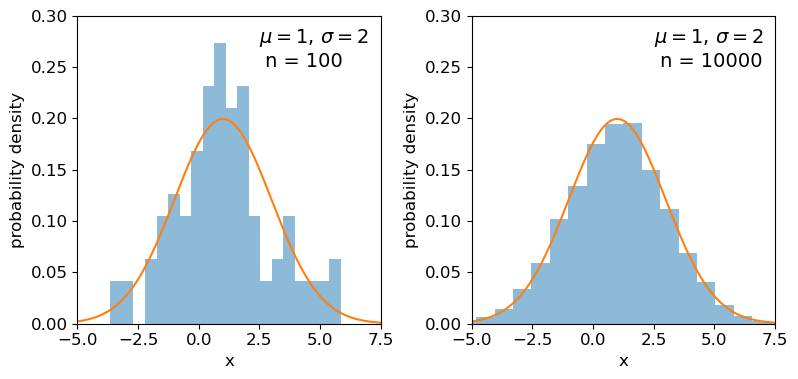

The distributions of random numbers

In the previous episode we saw how to use Python to generate random numbers and calculate statistics or do simple statistical experiments with them (e.g. looking at the covariance as a function of sample size). We can also generate a larger number of random variates and compare the resulting sample distribution with the pdf of the distribution which generated them. We show this for the uniform and normal distributions below:

mu = 1

sigma = 2

## freeze the distribution for the given mean and standard deviation

nd = sps.norm(mu, sigma)

## Generate a large and a small sample

sizes=[100,10000]

x = np.arange(-5.0, 8.0, 0.01)

fig, (ax1, ax2) = plt.subplots(1,2, figsize=(9,4))

fig.subplots_adjust(wspace=0.3)

for i, ax in enumerate([ax1,ax2]):

nd_rand = nd.rvs(size=sizes[i])

# Make the histogram semi-transparent

ax.hist(nd_rand, bins=20, density=True, alpha=0.5)

ax.plot(x,nd.pdf(x))

ax.tick_params(labelsize=12)

ax.set_xlabel("x", fontsize=12)

ax.tick_params(axis='x', labelsize=12)

ax.tick_params(axis='y', labelsize=12)

ax.set_ylabel("probability density", fontsize=12)

ax.set_xlim(-5,7.5)

ax.set_ylim(0,0.3)

ax.text(2.5,0.25,

"$\mu=$"+str(mu)+", $\sigma=$"+str(sigma)+"\n n = "+str(sizes[i]),fontsize=14)

plt.show()

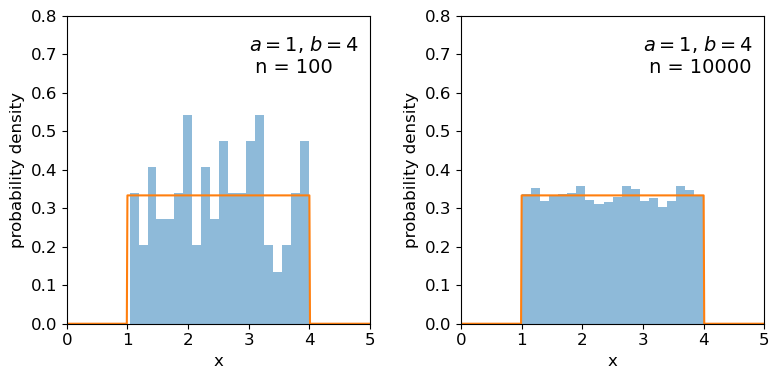

## Repeat for the uniform distribution

a = 1

b = 4

## freeze the distribution for given a and b

ud = sps.uniform(loc=a, scale=b-a)

sizes=[100,10000]

x = np.arange(0.0, 5.0, 0.01)

fig, (ax1, ax2) = plt.subplots(1,2, figsize=(9,4))

fig.subplots_adjust(wspace=0.3)

for i, ax in enumerate([ax1,ax2]):

ud_rand = ud.rvs(size=sizes[i])

ax.hist(ud_rand, bins=20, density=True, alpha=0.5)

ax.plot(x,ud.pdf(x))

ax.tick_params(labelsize=12)

ax.set_xlabel("x", fontsize=12)

ax.tick_params(axis='x', labelsize=12)

ax.tick_params(axis='y', labelsize=12)

ax.set_ylabel("probability density", fontsize=12)

ax.set_xlim(0,5)

ax.set_ylim(0,0.8)

ax.text(3.0,0.65,

"$a=$"+str(a)+", $b=$"+str(b)+"\n n = "+str(sizes[i]),fontsize=14)

plt.show()

Clearly the sample distributions for 100 random variates are much more scattered compared to the 10000 random variates case (and the ‘true’ distribution).

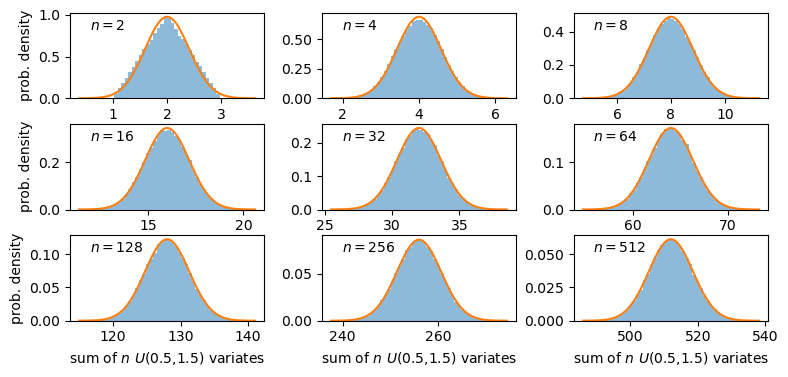

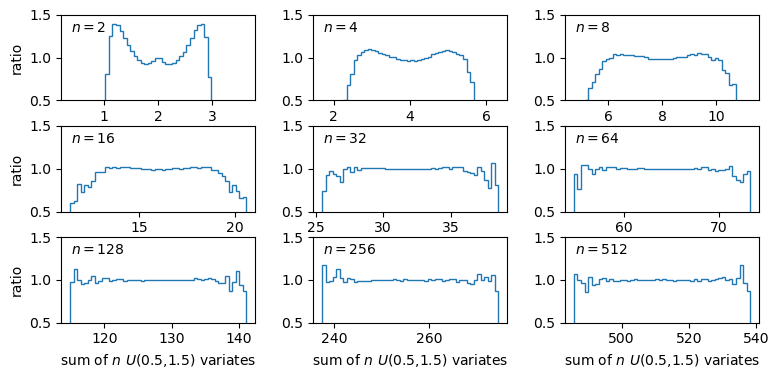

The distributions of sums of uniform random numbers

Now we will go a step further and run a similar experiment but plot the histograms of sums of random numbers instead of the random numbers themselves. We will start with sums of uniform random numbers which are all drawn from the same, uniform distribution. To plot the histogram we need to generate a large number (ntrials) of samples of size given by nsamp, and step through a range of nsamp to make a histogram of the distribution of summed sample variates. Since we know the mean and variance of the distribution our variates are drawn from, we can calculate the expected variance and mean of our sum using the approach for sums of random variables described in the previous episode.