Programming Style

Overview

Teaching: 15 min

Exercises: 15 minQuestions

How can I make my programs more readable?

How do most programmers format their code?

Objectives

Provide sound justifications for basic rules of coding style.

Refactor one-page programs to make them more readable and justify the changes.

Use Python community coding standards (PEP-8).

Coding style

Coding style helps us to understand the code better. It helps to maintain and change the code. Python relies strongly on coding style, as we may notice by the indentation we apply to lines to define different blocks of code. Python proposes a standard style through one of its first Python Enhancement Proposals (PEP), PEP8, and highlight the importance of readability in the Zen of Python.

We highlight some points:

- Document your code

- Use clear, meaningful variable names

- Indents should be with 4 whitespaces, not a tab - note that IDEs and Jupyter notebooks will automatically convert tabs to whitespaces, but check that this is the case!

- Python lines should be shorter than 79 characters

- No deeply indented code

- Variables in small case (

mass = 45) - Global variables in uppercase if your are using them (e.g.

OUTPUT = False) - Avoid builtin names

- Use underscores for readability (

def cal_density():) - Classes (see later) in camel case (

RingedPlanet) - Always avoid commented out code (at least in the final stages of development)

- Use descriptive names for variables (e.g. not

l2 = [])

Follow standard Python style in your code.

- PEP8:

a style guide for Python that discusses topics such as how you should name variables,

how you should use indentation in your code,

how you should structure your

importstatements, etc. Adhering to PEP8 makes it easier for other Python developers to read and understand your code, and to understand what their contributions should look like. The PEP8 application and Python library can check your code for compliance with PEP8. - Google style guide on Python supports the use of PEP8 and extend the coding style to more specific structure of a Python code, which may be interesting also to follow. Google’s formatting application is called “yapf”.

Reminder: use docstrings to provide builtin help.

- If the first thing in a function is a character string that is not assigned directly to a variable, Python attaches it to the function as the builtin help variable.

- Called a docstring (short for “documentation string”).

def average(values):

"Return average of values, or None if no values are supplied."

if len(values) == 0:

return None

return sum(values) / len(values)

help(average)

Help on function average in module __main__:

average(values)

Return average of values, or None if no values are supplied.

Multiline Strings

Often use multiline strings for documentation. These start and end with three quote characters (either single or double) and end with three matching characters.

"""This string spans multiple lines. Blank lines are allowed."""

What Will Be Shown?

Highlight the lines in the code below that will be available as help. Are there lines that should be made available, but won’t be? Will any lines produce a syntax error or a runtime error?

"Find maximum edit distance between multiple sequences." # This finds the maximum distance between all sequences. def overall_max(sequences): '''Determine overall maximum edit distance.''' highest = 0 for left in sequences: for right in sequences: '''Avoid checking sequence against itself.''' if left != right: this = edit_distance(left, right) highest = max(highest, this) # Report. return highest

Document This

Turn the comment on the following function into a docstring and check that

helpdisplays it properly.def middle(a, b, c): # Return the middle value of three. # Assumes the values can actually be compared. values = [a, b, c] values.sort() return values[1]Solution

def middle(a, b, c): '''Return the middle value of three. Assumes the values can actually be compared.''' values = [a, b, c] values.sort() return values[1]

Clean Up This Code

- Read this short program and try to predict what it does.

- Run it: how accurate was your prediction?

- Refactor the program to make it more readable. Remember to run it after each change to ensure its behavior hasn’t changed.

- Compare your rewrite with your neighbor’s. What did you do the same? What did you do differently, and why?

n = 10 s = 'et cetera' print(s) i = 0 while i < n: # print('at', j) new = '' for j in range(len(s)): left = j-1 right = (j+1)%len(s) if s[left]==s[right]: new += '-' else: new += '*' s=''.join(new) print(s) i += 1Solution

Here’s one solution.

def string_machine(input_string, iterations): """ Generates iteratively marked strings for the same adjacent characters Takes input_string and generates a new string with -'s and *'s corresponding to characters that have identical adjacent characters or not, respectively. Iterates through this procedure with the resultant strings for the supplied number of iterations. """ print(input_string) input_string_length = len(input_string) old = input_string for i in range(iterations): new = '' # iterate through characters in previous string for j in range(input_string_length): left = j-1 right = (j+1) % input_string_length # ensure right index wraps around if old[left] == old[right]: new += '-' else: new += '*' print(new) # store new string as old old = new string_machine('et cetera', 10)et cetera *****-*** ----*-*-- ---*---*- --*-*-*-* **------- ***-----* --**---** *****-*** ----*-*-- ---*---*-

Key Points

Follow standard Python style in your code.

Use docstrings to provide builtin help.

Errors and Exceptions

Overview

Teaching: 30 min

Exercises: 0 minQuestions

How does Python report errors?

How can I handle errors in Python programs?

Objectives

To be able to read a traceback, and determine where the error took place and what type it is.

To be able to describe the types of situations in which syntax errors, indentation errors, name errors, index errors, and missing file errors occur.

Every programmer encounters errors, both those who are just beginning, and those who have been programming for years. Encountering errors and exceptions can be very frustrating at times, and can make coding feel like a hopeless endeavour. However, understanding what the different types of errors are and when you are likely to encounter them can help a lot. Once you know why you get certain types of errors, they become much easier to fix.

Errors in Python have a very specific form, called a traceback. Let’s examine one:

# This code has an intentional error. You can type it directly or

# use it for reference to understand the error message below.

def favorite_ice_cream():

ice_creams = [

'chocolate',

'vanilla',

'strawberry'

]

print(ice_creams[3])

favorite_ice_cream()

IndexError Traceback (most recent call last)

<ipython-input-7-e5b074b4d20d> in <module>

9 print(ice_creams[3])

10

---> 11 favorite_ice_cream()

<ipython-input-7-e5b074b4d20d> in favorite_ice_cream()

7 'strawberry'

8 ]

----> 9 print(ice_creams[3])

10

11 favorite_ice_cream()

IndexError: list index out of range

This particular traceback has two levels. You can determine the number of levels by looking for the number of arrows on the left hand side. In this case:

-

The first shows code from the cell above, with an arrow pointing to Line 8 (which is

favorite_ice_cream()). -

The second shows some code in the function

favorite_ice_cream, with an arrow pointing to Line 6 (which isprint(ice_creams[3])).

The last level is the actual place where the error occurred.

The other level(s) show what function the program executed to get to the next level down.

So, in this case, the program first performed a

function call to the function favorite_ice_cream.

Inside this function,

the program encountered an error on Line 6, when it tried to run the code print(ice_creams[3]).

Long Tracebacks

Sometimes, you might see a traceback that is very long – sometimes they might even be 20 levels deep! This can make it seem like something horrible happened, but the length of the error message does not reflect severity, rather, it indicates that your program called many functions before it encountered the error. Most of the time, the actual place where the error occurred is at the bottom-most level, so you can skip down the traceback to the bottom.

So what error did the program actually encounter?

In the last line of the traceback,

Python helpfully tells us the category or type of error (in this case, it is an IndexError)

and a more detailed error message (in this case, it says “list index out of range”).

If you encounter an error and don’t know what it means, it is still important to read the traceback closely. That way, if you fix the error, but encounter a new one, you can tell that the error changed. Additionally, sometimes knowing where the error occurred is enough to fix it, even if you don’t entirely understand the message.

If you do encounter an error you don’t recognize, try looking at the official documentation on errors. However, note that you may not always be able to find the error there, as it is possible to create custom errors. In that case, hopefully the custom error message is informative enough to help you figure out what went wrong.

Syntax Errors

When you forget a colon at the end of a line,

accidentally add one space too many when indenting under an if statement,

or forget a parenthesis,

you will encounter a syntax error.

This means that Python couldn’t figure out how to read your program.

This is similar to forgetting punctuation in English:

for example,

this text is difficult to read there is no punctuation there is also no capitalization

why is this hard because you have to figure out where each sentence ends

you also have to figure out where each sentence begins

to some extent it might be ambiguous if there should be a sentence break or not

People can typically figure out what is meant by text with no punctuation, but people are much smarter than computers. If Python doesn’t know how to read the program, it will give up and inform you with an error. For example:

def some_function()

msg = 'hello, world!'

print(msg)

return msg

File "<ipython-input-3-6bb841ea1423>", line 1

def some_function()

^

SyntaxError: invalid syntax

Here, Python tells us that there is a SyntaxError on line 1,

and even puts a little arrow in the place where there is an issue.

In this case the problem is that the function definition is missing a colon at the end.

Actually, the function above has two issues with syntax.

If we fix the problem with the colon,

we see that there is also an IndentationError,

which means that the lines in the function definition do not all have the same indentation:

def some_function():

msg = 'hello, world!'

print(msg)

return msg

File "<ipython-input-4-ae290e7659cb>", line 4

return msg

^

IndentationError: unexpected indent

Both SyntaxError and IndentationError indicate a problem with the syntax of your program,

but an IndentationError is more specific:

it always means that there is a problem with how your code is indented.

Tabs and Spaces

Some indentation errors are harder to spot than others. In particular, mixing spaces and tabs can be difficult to spot because they are both whitespace. In the example below, the first two lines in the body of the function

some_functionare indented with tabs, while the third line — with spaces. If you’re working in a Jupyter notebook, be sure to copy and paste this example rather than trying to type it in manually because Jupyter automatically replaces tabs with spaces.def some_function(): msg = 'hello, world!' print(msg) return msgVisually it may be difficult to spot the error (although Jupyter notebooks will now highlight the problem for you). Fortunately, Python does not allow you to mix tabs and spaces.

File "<ipython-input-5-653b36fbcd41>", line 4 return msg ^ TabError: inconsistent use of tabs and spaces in indentation

Variable Name Errors

Another very common type of error is called a NameError,

and occurs when you try to use a variable that does not exist.

For example:

print(a)

---------------------------------------------------------------------------

NameError Traceback (most recent call last)

<ipython-input-7-9d7b17ad5387> in <module>()

----> 1 print(a)

NameError: name 'a' is not defined

Variable name errors come with some of the most informative error messages, which are usually of the form “name ‘the_variable_name’ is not defined”.

Why does this error message occur? That’s a harder question to answer, because it depends on what your code is supposed to do. However, there are a few very common reasons why you might have an undefined variable. The first is that you meant to use a string, but forgot to put quotes around it:

print(hello)

---------------------------------------------------------------------------

NameError Traceback (most recent call last)

<ipython-input-8-9553ee03b645> in <module>()

----> 1 print(hello)

NameError: name 'hello' is not defined

The second reason is that you might be trying to use a variable that does not yet exist.

In the following example,

count should have been defined (e.g., with count = 0) before the for loop:

for number in range(10):

count = count + number

print('The count is:', count)

---------------------------------------------------------------------------

NameError Traceback (most recent call last)

<ipython-input-9-dd6a12d7ca5c> in <module>()

1 for number in range(10):

----> 2 count = count + number

3 print('The count is:', count)

NameError: name 'count' is not defined

Finally, the third possibility is that you made a typo when you were writing your code.

Let’s say we fixed the error above by adding the line Count = 0 before the for loop.

Frustratingly, this actually does not fix the error.

Remember that variables are case-sensitive,

so the variable count is different from Count. We still get the same error,

because we still have not defined count:

Count = 0

for number in range(10):

count = count + number

print('The count is:', count)

---------------------------------------------------------------------------

NameError Traceback (most recent call last)

<ipython-input-10-d77d40059aea> in <module>()

1 Count = 0

2 for number in range(10):

----> 3 count = count + number

4 print('The count is:', count)

NameError: name 'count' is not defined

Index Errors

Next up are errors having to do with containers (like lists and strings) and the items within them. If you try to access an item in a list or a string that does not exist, then you will get an error. This makes sense: if you asked someone what day they would like to get coffee, and they answered “caturday”, you might be a bit annoyed. Python gets similarly annoyed if you try to ask it for an item that doesn’t exist:

letters = ['a', 'b', 'c']

print('Letter #1 is', letters[0])

print('Letter #2 is', letters[1])

print('Letter #3 is', letters[2])

print('Letter #4 is', letters[3])

Letter #1 is a

Letter #2 is b

Letter #3 is c

---------------------------------------------------------------------------

IndexError Traceback (most recent call last)

<ipython-input-11-d817f55b7d6c> in <module>()

3 print('Letter #2 is', letters[1])

4 print('Letter #3 is', letters[2])

----> 5 print('Letter #4 is', letters[3])

IndexError: list index out of range

Here,

Python is telling us that there is an IndexError in our code,

meaning we tried to access a list index that did not exist.

File Errors

The last type of error we’ll cover today

are those associated with reading and writing files: FileNotFoundError.

If you try to read a file that does not exist,

you will receive a FileNotFoundError telling you so.

If you attempt to write to a file that was opened read-only, Python 3

returns an UnsupportedOperationError.

More generally, problems with input and output manifest as

IOErrors or OSErrors, depending on the version of Python you use.

file_handle = open('myfile.txt', 'r')

---------------------------------------------------------------------------

FileNotFoundError Traceback (most recent call last)

<ipython-input-14-f6e1ac4aee96> in <module>()

----> 1 file_handle = open('myfile.txt', 'r')

FileNotFoundError: [Errno 2] No such file or directory: 'myfile.txt'

One reason for receiving this error is that you specified an incorrect path to the file.

For example,

if I am currently in a folder called myproject,

and I have a file in myproject/writing/myfile.txt,

but I try to open myfile.txt,

this will fail.

The correct path would be writing/myfile.txt.

It is also possible that the file name or its path contains a typo.

A related issue can occur if you use the “read” flag instead of the “write” flag.

Python will not give you an error if you try to open a file for writing

when the file does not exist.

However,

if you meant to open a file for reading,

but accidentally opened it for writing,

and then try to read from it,

you will get an UnsupportedOperation error

telling you that the file was not opened for reading:

file_handle = open('myfile.txt', 'w')

file_handle.read()

---------------------------------------------------------------------------

UnsupportedOperation Traceback (most recent call last)

<ipython-input-15-b846479bc61f> in <module>()

1 file_handle = open('myfile.txt', 'w')

----> 2 file_handle.read()

UnsupportedOperation: not readable

These are the most common errors with files, though many others exist. If you get an error that you’ve never seen before, searching the Internet for that error type often reveals common reasons why you might get that error.

Reading Error Messages

Read the Python code and the resulting traceback below, and answer the following questions:

- How many levels does the traceback have?

- What is the function name where the error occurred?

- On which line number in this function did the error occur?

- What is the type of error?

- What is the error message?

# This code has an intentional error. Do not type it directly; # use it for reference to understand the error message below. def print_message(day): messages = { 'monday': 'Hello, world!', 'tuesday': 'Today is Tuesday!', 'wednesday': 'It is the middle of the week.', 'thursday': 'Today is Donnerstag in German!', 'friday': 'Last day of the week!', 'saturday': 'Hooray for the weekend!', 'sunday': 'Aw, the weekend is almost over.' } print(messages[day]) def print_friday_message(): print_message('Friday') print_friday_message()--------------------------------------------------------------------------- KeyError Traceback (most recent call last) <ipython-input-133-fd935ca3ca2c> in <module> 16 print_message('Friday') 17 ---> 18 print_friday_message() <ipython-input-133-fd935ca3ca2c> in print_friday_message() 14 15 def print_friday_message(): ---> 16 print_message('Friday') 17 18 print_friday_message() <ipython-input-133-fd935ca3ca2c> in print_message(day) 11 'sunday': 'Aw, the weekend is almost over.' 12 } ---> 13 print(messages[day]) 14 15 def print_friday_message(): KeyError: 'Friday'Solution

- 3 levels

print_message- 13

KeyError- There isn’t really a message; you’re supposed to infer that

Fridayis not a key inmessages.

Identifying Syntax Errors

- Read the code below, and (without running it) try to identify what the errors are.

- Run the code, and read the error message. Is it a

SyntaxErroror anIndentationError?- Fix the error.

- Repeat steps 2 and 3, until you have fixed all the errors.

def another_function print('Syntax errors are annoying.') print('But at least Python tells us about them!') print('So they are usually not too hard to fix.')Solution

SyntaxErrorfor missing():at end of first line,IndentationErrorfor mismatch between second and third lines. A fixed version is:def another_function(): print('Syntax errors are annoying.') print('But at least Python tells us about them!') print('So they are usually not too hard to fix.')

Identifying Variable Name Errors

- Read the code below, and (without running it) try to identify what the errors are.

- Run the code, and read the error message. What type of

NameErrordo you think this is? In other words, is it a string with no quotes, a misspelled variable, or a variable that should have been defined but was not?- Fix the error.

- Repeat steps 2 and 3, until you have fixed all the errors.

for number in range(10): # use a if the number is a multiple of 3, otherwise use b if (Number % 3) == 0: message = message + a else: message = message + 'b' print(message)Solution

3

NameErrors fornumberbeing misspelled, formessagenot defined, and foranot being in quotes.Fixed version:

message = '' for number in range(10): # use a if the number is a multiple of 3, otherwise use b if (number % 3) == 0: message = message + 'a' else: message = message + 'b' print(message)

Identifying Index Errors

- Read the code below, and (without running it) try to identify what the errors are.

- Run the code, and read the error message. What type of error is it?

- Fix the error.

seasons = ['Spring', 'Summer', 'Fall', 'Winter'] print('My favorite season is ', seasons[4])Solution

IndexError; the last entry isseasons[3], soseasons[4]doesn’t make sense. A fixed version is:seasons = ['Spring', 'Summer', 'Fall', 'Winter'] print('My favorite season is ', seasons[-1])

Key Points

Tracebacks can look intimidating, but they give us a lot of useful information about what went wrong in our program, including where the error occurred and what type of error it was.

An error having to do with the ‘grammar’ or syntax of the program is called a

SyntaxError. If the issue has to do with how the code is indented, then it will be called anIndentationError.A

NameErrorwill occur when trying to use a variable that does not exist. Possible causes are that a variable definition is missing, a variable reference differs from its definition in spelling or capitalization, or the code contains a string that is missing quotes around it.Containers like lists and strings will generate errors if you try to access items in them that do not exist. This type of error is called an

IndexError.Trying to read a file that does not exist will give you an

FileNotFoundError. Trying to read a file that is open for writing, or writing to a file that is open for reading, will give you anIOError.

Defensive Programming

Overview

Teaching: 30 min

Exercises: 10 minQuestions

How can I make my programs more reliable?

Objectives

Explain what an assertion is.

Add assertions that check the program’s state is correct.

Correctly add precondition and postcondition assertions to functions.

Explain what test-driven development is, and use it when creating new functions.

Explain why variables should be initialized using actual data values rather than arbitrary constants.

Our previous lessons have introduced the basic tools of programming: variables and lists, file I/O, loops, conditionals, and functions. What they haven’t done is show us how to tell whether a program is getting the right answer, and how to tell if it’s still getting the right answer as we make changes to it.

To achieve that, we need to:

- Write programs that check their own operation.

- Write and run tests for widely-used functions.

- Make sure we know what “correct” actually means.

The good news is, doing these things will speed up our programming, not slow it down. As in real carpentry — the kind done with lumber — the time saved by measuring carefully before cutting a piece of wood is much greater than the time that measuring takes.

Assertions

The first step toward getting the right answers from our programs is to assume that mistakes will happen and to guard against them. This is called defensive programming, and the most common way to do it is to add assertions to our code so that it checks itself as it runs. An assertion is simply a statement that something must be true at a certain point in a program. When Python sees one, it evaluates the assertion’s condition. If it’s true, Python does nothing, but if it’s false, Python halts the program immediately and prints the error message if one is provided. For example, this piece of code halts as soon as the loop encounters a value that isn’t positive:

numbers = [1.5, 2.3, 0.7, -0.001, 4.4]

total = 0.0

for num in numbers:

assert num > 0.0, 'Data should only contain positive values'

total += num

print('total is:', total)

---------------------------------------------------------------------------

AssertionError Traceback (most recent call last)

<ipython-input-19-33d87ea29ae4> in <module>()

2 total = 0.0

3 for num in numbers:

----> 4 assert num > 0.0, 'Data should only contain positive values'

5 total += num

6 print('total is:', total)

AssertionError: Data should only contain positive values

Programs like the Firefox browser are full of assertions: 10-20% of the code they contain are there to check that the other 80–90% are working correctly. Broadly speaking, assertions fall into three categories:

-

A precondition is something that must be true at the start of a function in order for it to work correctly.

-

A postcondition is something that the function guarantees is true when it finishes.

-

An invariant is something that is always true at a particular point inside a piece of code.

For example,

suppose we are representing rectangles using a tuple

of four coordinates (x0, y0, x1, y1),

representing the lower left and upper right corners of the rectangle.

In order to do some calculations,

we need to normalize the rectangle so that the lower left corner is at the origin

and the longest side is 1.0 units long.

This function does that,

but checks that its input is correctly formatted and that its result makes sense:

def normalize_rectangle(rect):

"""Normalizes a rectangle so that it is at the origin and 1.0 units

long on its longest axis.

Input should be of the format (x0, y0, x1, y1).

(x0, y0) and (x1, y1) define the lower left and upper right corners

of the rectangle, respectively."""

assert len(rect) == 4, 'Rectangles must contain 4 coordinates'

x0, y0, x1, y1 = rect

assert x0 < x1, 'Invalid X coordinates'

assert y0 < y1, 'Invalid Y coordinates'

dx = x1 - x0

dy = y1 - y0

if dx > dy:

scaled = float(dx) / dy

upper_x, upper_y = 1.0, scaled

else:

scaled = float(dx) / dy

upper_x, upper_y = scaled, 1.0

assert 0 < upper_x <= 1.0, 'Calculated upper X coordinate invalid'

assert 0 < upper_y <= 1.0, 'Calculated upper Y coordinate invalid'

return (0, 0, upper_x, upper_y)

The preconditions on lines 6, 8, and 9 catch invalid inputs:

print(normalize_rectangle( (0.0, 1.0, 2.0) )) # missing the fourth coordinate

---------------------------------------------------------------------------

AssertionError Traceback (most recent call last)

<ipython-input-2-1b9cd8e18a1f> in <module>

----> 1 print(normalize_rectangle( (0.0, 1.0, 2.0) )) # missing the fourth coordinate

<ipython-input-1-b2455ef6a457> in normalize_rectangle(rect)

7 of the rectangle, respectively."""

8

----> 9 assert len(rect) == 4, 'Rectangles must contain 4 coordinates'

10 x0, y0, x1, y1 = rect

11 assert x0 < x1, 'Invalid X coordinates'

AssertionError: Rectangles must contain 4 coordinates

print(normalize_rectangle( (4.0, 2.0, 1.0, 5.0) )) # X axis inverted

---------------------------------------------------------------------------

AssertionError Traceback (most recent call last)

<ipython-input-3-325036405532> in <module>

----> 1 print(normalize_rectangle( (4.0, 2.0, 1.0, 5.0) )) # X axis inverted

<ipython-input-1-b2455ef6a457> in normalize_rectangle(rect)

9 assert len(rect) == 4, 'Rectangles must contain 4 coordinates'

10 x0, y0, x1, y1 = rect

---> 11 assert x0 < x1, 'Invalid X coordinates'

12 assert y0 < y1, 'Invalid Y coordinates'

13

AssertionError: Invalid X coordinates

The post-conditions on lines 20 and 21 help us catch bugs by telling us when our calculations might have been incorrect. For example, if we normalize a rectangle that is taller than it is wide everything seems OK:

print(normalize_rectangle( (0.0, 0.0, 1.0, 5.0) ))

(0, 0, 0.2, 1.0)

but if we normalize one that’s wider than it is tall, the assertion is triggered:

print(normalize_rectangle( (0.0, 0.0, 5.0, 1.0) ))

---------------------------------------------------------------------------

AssertionError Traceback (most recent call last)

<ipython-input-4-8d4a48f1d068> in <module>

----> 1 print(normalize_rectangle( (0.0, 0.0, 5.0, 1.0) ))

<ipython-input-1-b2455ef6a457> in normalize_rectangle(rect)

22

23 assert 0 < upper_x <= 1.0, 'Calculated upper X coordinate invalid'

---> 24 assert 0 < upper_y <= 1.0, 'Calculated upper Y coordinate invalid'

25

26 return (0, 0, upper_x, upper_y)

AssertionError: Calculated upper Y coordinate invalid

Re-reading our function,

we realize that line 14 should divide dy by dx rather than dx by dy.

In a Jupyter notebook, you can display line numbers by typing Ctrl+M

followed by L.

If we had left out the assertion at the end of the function,

we would have created and returned something that had the right shape as a valid answer,

but wasn’t.

Detecting and debugging that would almost certainly have taken more time in the long run

than writing the assertion.

But assertions aren’t just about catching errors: they also help people understand programs. Each assertion gives the person reading the program a chance to check (consciously or otherwise) that their understanding matches what the code is doing.

Most good programmers follow two rules when adding assertions to their code. The first is, fail early, fail often. The greater the distance between when and where an error occurs and when it’s noticed, the harder the error will be to debug, so good code catches mistakes as early as possible.

The second rule is, turn bugs into assertions or tests. Whenever you fix a bug, write an assertion that catches the mistake should you make it again. If you made a mistake in a piece of code, the odds are good that you have made other mistakes nearby, or will make the same mistake (or a related one) the next time you change it. Writing assertions to check that you haven’t regressed (i.e., haven’t re-introduced an old problem) can save a lot of time in the long run, and helps to warn people who are reading the code (including your future self) that this bit is tricky.

Test-Driven Development

An assertion checks that something is true at a particular point in the program. The next step is to check the overall behavior of a piece of code, i.e., to make sure that it produces the right output when it’s given a particular input. For example, suppose we need to find where two or more time series overlap. The range of each time series is represented as a pair of numbers, which are the time the interval started and ended. The output is the largest range that they all include:

Most novice programmers would solve this problem like this:

- Write a function

range_overlap. - Call it interactively on two or three different inputs.

- If it produces the wrong answer, fix the function and re-run that test.

This clearly works — after all, thousands of scientists are doing it right now — but there’s a better way:

- Write a short function for each test.

- Write a

range_overlapfunction that should pass those tests. - If

range_overlapproduces any wrong answers, fix it and re-run the test functions.

Writing the tests before writing the function they exercise is called test-driven development (TDD). Its advocates believe it produces better code faster because:

- If people write tests after writing the thing to be tested, they are subject to confirmation bias, i.e., they subconsciously write tests to show that their code is correct, rather than to find errors.

- Writing tests helps programmers figure out what the function is actually supposed to do.

Here are three test functions for range_overlap:

assert range_overlap([ (0.0, 1.0) ]) == (0.0, 1.0)

assert range_overlap([ (2.0, 3.0), (2.0, 4.0) ]) == (2.0, 3.0)

assert range_overlap([ (0.0, 1.0), (0.0, 2.0), (-1.0, 1.0) ]) == (0.0, 1.0)

---------------------------------------------------------------------------

AssertionError Traceback (most recent call last)

<ipython-input-25-d8be150fbef6> in <module>()

----> 1 assert range_overlap([ (0.0, 1.0) ]) == (0.0, 1.0)

2 assert range_overlap([ (2.0, 3.0), (2.0, 4.0) ]) == (2.0, 3.0)

3 assert range_overlap([ (0.0, 1.0), (0.0, 2.0), (-1.0, 1.0) ]) == (0.0, 1.0)

AssertionError:

The error is actually reassuring:

we haven’t written range_overlap yet,

so if the tests passed,

it would be a sign that someone else had

and that we were accidentally using their function.

And as a bonus of writing these tests, we’ve implicitly defined what our input and output look like: we expect a list of pairs as input, and produce a single pair as output.

Something important is missing, though. We don’t have any tests for the case where the ranges don’t overlap at all:

assert range_overlap([ (0.0, 1.0), (5.0, 6.0) ]) == ???

What should range_overlap do in this case:

fail with an error message,

produce a special value like (0.0, 0.0) to signal that there’s no overlap,

or something else?

Any actual implementation of the function will do one of these things;

writing the tests first helps us figure out which is best

before we’re emotionally invested in whatever we happened to write

before we realized there was an issue.

And what about this case?

assert range_overlap([ (0.0, 1.0), (1.0, 2.0) ]) == ???

Do two segments that touch at their endpoints overlap or not?

Mathematicians usually say “yes”,

but engineers usually say “no”.

The best answer is “whatever is most useful in the rest of our program”,

but again,

any actual implementation of range_overlap is going to do something,

and whatever it is ought to be consistent with what it does when there’s no overlap at all.

Since we’re planning to use the range this function returns as the X axis in a time series chart, we decide that:

- every overlap has to have non-zero width, and

- we will return the special value

Nonewhen there’s no overlap.

None is built into Python,

and means “nothing here”.

(Other languages often call the equivalent value null or nil).

With that decision made,

we can finish writing our last two tests:

assert range_overlap([ (0.0, 1.0), (5.0, 6.0) ]) == None

assert range_overlap([ (0.0, 1.0), (1.0, 2.0) ]) == None

---------------------------------------------------------------------------

NameError Traceback (most recent call last)

<ipython-input-148-42de7ddfb428> in <module>

----> 1 assert range_overlap([ (0.0, 1.0), (5.0, 6.0) ]) == None

2 assert range_overlap([ (0.0, 1.0), (1.0, 2.0) ]) == None

NameError: name 'range_overlap' is not defined

Again, we get an error because we haven’t written our function, but we’re now ready to do so:

def range_overlap(ranges):

"""Return common overlap among a set of [left, right] ranges."""

max_left = 0.0

min_right = 1.0

for (left, right) in ranges:

max_left = max(max_left, left)

min_right = min(min_right, right)

return (max_left, min_right)

Take a moment to think about why we calculate the left endpoint of the overlap as the maximum of the input left endpoints, and the overlap right endpoint as the minimum of the input right endpoints. We’d now like to re-run our tests, but they’re scattered across three different cells. To make running them easier, let’s put them all in a function:

def test_range_overlap():

assert range_overlap([ (0.0, 1.0), (5.0, 6.0) ]) == None

assert range_overlap([ (0.0, 1.0), (1.0, 2.0) ]) == None

assert range_overlap([ (0.0, 1.0) ]) == (0.0, 1.0)

assert range_overlap([ (2.0, 3.0), (2.0, 4.0) ]) == (2.0, 3.0)

assert range_overlap([ (0.0, 1.0), (0.0, 2.0), (-1.0, 1.0) ]) == (0.0, 1.0)

assert range_overlap([]) == None

We can now test range_overlap with a single function call:

test_range_overlap()

---------------------------------------------------------------------------

AssertionError Traceback (most recent call last)

<ipython-input-29-cf9215c96457> in <module>()

----> 1 test_range_overlap()

<ipython-input-28-5d4cd6fd41d9> in test_range_overlap()

1 def test_range_overlap():

----> 2 assert range_overlap([ (0.0, 1.0), (5.0, 6.0) ]) == None

3 assert range_overlap([ (0.0, 1.0), (1.0, 2.0) ]) == None

4 assert range_overlap([ (0.0, 1.0) ]) == (0.0, 1.0)

5 assert range_overlap([ (2.0, 3.0), (2.0, 4.0) ]) == (2.0, 3.0)

AssertionError:

The first test that was supposed to produce None fails,

so we know something is wrong with our function.

We don’t know whether the other tests passed or failed

because Python halted the program as soon as it spotted the first error.

Still,

some information is better than none,

and if we trace the behavior of the function with that input,

we realize that we’re initializing max_left and min_right to 0.0 and 1.0 respectively,

regardless of the input values.

This violates another important rule of programming:

always initialize from data.

Pre- and Post-Conditions

Suppose you are writing a function called

averagethat calculates the average of the numbers in a list. What pre-conditions and post-conditions would you write for it? Compare your answer to your neighbor’s: can you think of a function that will pass your tests but not his/hers or vice versa?Solution

# a possible pre-condition: assert len(input_list) > 0, 'List length must be non-zero' # a possible post-condition: assert numpy.min(input_list) <= average <= numpy.max(input_list), 'Average should be between min and max of input values (inclusive)'

Testing Assertions

Given a sequence of a number of cars, the function

get_total_carsreturns the total number of cars.get_total_cars([1, 2, 3, 4])10get_total_cars(['a', 'b', 'c'])ValueError: invalid literal for int() with base 10: 'a'Explain in words what the assertions in this function check, and for each one, give an example of input that will make that assertion fail.

def get_total(values): assert len(values) > 0 for element in values: assert int(element) values = [int(element) for element in values] total = sum(values) assert total > 0 return totalSolution

- The first assertion checks that the input sequence

valuesis not empty. An empty sequence such as[]will make it fail.- The second assertion checks that each value in the list can be turned into an integer. Input such as

[1, 2,'c', 3]will make it fail.- The third assertion checks that the total of the list is greater than 0. Input such as

[-10, 2, 3]will make it fail.

Key Points

Program defensively, i.e., assume that errors are going to arise, and write code to detect them when they do.

Put assertions in programs to check their state as they run, and to help readers understand how those programs are supposed to work.

Use preconditions to check that the inputs to a function are safe to use.

Use postconditions to check that the output from a function is safe to use.

Write tests before writing code in order to help determine exactly what that code is supposed to do.

Debugging

Overview

Teaching: 30 min

Exercises: 20 minQuestions

How can I debug my programs?

Objectives

Debug code containing an error systematically.

Identify ways of making code less error-prone and more easily tested.

Once testing has uncovered problems, the next step is to fix them. Many novices do this by making more-or-less random changes to their code until it seems to produce the right answer, but that’s very inefficient (and the result is usually only correct for the one case they’re testing). The more experienced a programmer is, the more systematically they debug, and most follow some variation on the rules explained below.

Know What It’s Supposed to Do

The first step in debugging something is to know what it’s supposed to do. “My program doesn’t work” isn’t good enough: in order to diagnose and fix problems, we need to be able to tell correct output from incorrect. If we can write a test case for the failing case — i.e., if we can assert that with these inputs, the function should produce that result — then we’re ready to start debugging. If we can’t, then we need to figure out how we’re going to know when we’ve fixed things.

But writing test cases for scientific software is frequently harder than writing test cases for commercial applications, because if we knew what the output of the scientific code was supposed to be, we wouldn’t be running the software: we’d be writing up our results and moving on to the next program. In practice, scientists tend to do the following:

-

Test with simplified data. Before doing statistics on a real data set, we should try calculating statistics for a single record, for two identical records, for two records whose values are one step apart, or for some other case where we can calculate the right answer by hand.

-

Test a simplified case. If our program is supposed to simulate magnetic eddies in rapidly-rotating blobs of supercooled helium, our first test should be a blob of helium that isn’t rotating, and isn’t being subjected to any external electromagnetic fields. Similarly, if we’re looking at the effects of climate change on speciation, our first test should hold temperature, precipitation, and other factors constant.

-

Compare to an oracle. A test oracle is something whose results are trusted, such as experimental data, an older program, or a human expert. We use test oracles to determine if our new program produces the correct results. If we have a test oracle, we should store its output for particular cases so that we can compare it with our new results as often as we like without re-running that program.

-

Check conservation laws. Mass, energy, and other quantities are conserved in physical systems, so they should be in programs as well. Similarly, if we are analyzing patient data, the number of records should either stay the same or decrease as we move from one analysis to the next (since we might throw away outliers or records with missing values). If “new” patients start appearing out of nowhere as we move through our pipeline, it’s probably a sign that something is wrong.

-

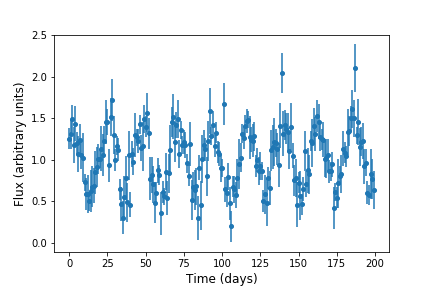

Visualize. Data analysts frequently use simple visualizations to check both the science they’re doing and the correctness of their code (just as we did in the opening lesson of this tutorial). This should only be used for debugging as a last resort, though, since it’s very hard to compare two visualizations automatically.

Make It Fail Every Time

We can only debug something when it fails, so the second step is always to find a test case that makes it fail every time. The “every time” part is important because few things are more frustrating than debugging an intermittent problem: if we have to call a function a dozen times to get a single failure, the odds are good that we’ll scroll past the failure when it actually occurs.

As part of this, it’s always important to check that our code is “plugged in”, i.e., that we’re actually exercising the problem that we think we are. Every programmer has spent hours chasing a bug, only to realize that they were actually calling their code on the wrong data set or with the wrong configuration parameters, or are using the wrong version of the software entirely. Mistakes like these are particularly likely to happen when we’re tired, frustrated, and up against a deadline, which is one of the reasons late-night (or overnight) coding sessions are almost never worthwhile.

Make It Fail Fast

If it takes 20 minutes for the bug to surface, we can only do three experiments an hour. This means that we’ll get less data in more time and that we’re more likely to be distracted by other things as we wait for our program to fail, which means the time we are spending on the problem is less focused. It’s therefore critical to make it fail fast.

As well as making the program fail fast in time, we want to make it fail fast in space, i.e., we want to localize the failure to the smallest possible region of code:

-

The smaller the gap between cause and effect, the easier the connection is to find. Many programmers therefore use a divide and conquer strategy to find bugs, i.e., if the output of a function is wrong, they check whether things are OK in the middle, then concentrate on either the first or second half, and so on.

-

N things can interact in N! different ways, so every line of code that isn’t run as part of a test means more than one thing we don’t need to worry about.

Change One Thing at a Time, For a Reason

Replacing random chunks of code is unlikely to do much good. (After all, if you got it wrong the first time, you’ll probably get it wrong the second and third as well.) Good programmers therefore change one thing at a time, for a reason. They are either trying to gather more information (“is the bug still there if we change the order of the loops?”) or test a fix (“can we make the bug go away by sorting our data before processing it?”).

Every time we make a change, however small, we should re-run our tests immediately, because the more things we change at once, the harder it is to know what’s responsible for what (those N! interactions again). And we should re-run all of our tests: more than half of fixes made to code introduce (or re-introduce) bugs, so re-running all of our tests tells us whether we have regressed.

Keep Track of What You’ve Done

Good scientists keep track of what they’ve done so that they can reproduce their work, and so that they don’t waste time repeating the same experiments or running ones whose results won’t be interesting. Similarly, debugging works best when we keep track of what we’ve done and how well it worked. If we find ourselves asking, “Did left followed by right with an odd number of lines cause the crash? Or was it right followed by left? Or was I using an even number of lines?” then it’s time to step away from the computer, take a deep breath, and start working more systematically.

Records are particularly useful when the time comes to ask for help. People are more likely to listen to us when we can explain clearly what we did, and we’re better able to give them the information they need to be useful.

Version Control Revisited

Version control is often used to reset software to a known state during debugging, and to explore recent changes to code that might be responsible for bugs. In particular, most version control systems (e.g. git, Mercurial) have:

- a

blamecommand that shows who last changed each line of a file;- a

bisectcommand that helps with finding the commit that introduced an issue.

Be Humble

And speaking of help: if we can’t find a bug in 10 minutes, we should be humble and ask for help. Explaining the problem to someone else is often useful, since hearing what we’re thinking helps us spot inconsistencies and hidden assumptions. If you don’t have someone nearby to share your problem description with, get a rubber duck!

Asking for help also helps alleviate confirmation bias. If we have just spent an hour writing a complicated program, we want it to work, so we’re likely to keep telling ourselves why it should, rather than searching for the reason it doesn’t. People who aren’t emotionally invested in the code can be more objective, which is why they’re often able to spot the simple mistakes we have overlooked.

Part of being humble is learning from our mistakes. Programmers tend to get the same things wrong over and over: either they don’t understand the language and libraries they’re working with, or their model of how things work is wrong. In either case, taking note of why the error occurred and checking for it next time quickly turns into not making the mistake at all.

And that is what makes us most productive in the long run. As the saying goes, A week of hard work can sometimes save you an hour of thought. If we train ourselves to avoid making some kinds of mistakes, to break our code into modular, testable chunks, and to turn every assumption (or mistake) into an assertion, it will actually take us less time to produce working programs, not more.

Debug With a Neighbor

Take a function that you have written today, and introduce a tricky bug. Your function should still run, but will give the wrong output. Switch seats with your neighbor and attempt to debug the bug that they introduced into their function. Which of the principles discussed above did you find helpful?

Not Supposed to be the Same

You are assisting a researcher with Python code that computes the Body Mass Index (BMI) of patients. The researcher is concerned because all patients seemingly have unusual and identical BMIs, despite having different physiques. BMI is calculated as weight in kilograms divided by the square of height in metres.

Use the debugging principles in this exercise and locate problems with the code. What suggestions would you give the researcher for ensuring any later changes they make work correctly?

patients = [[70, 1.8], [80, 1.9], [150, 1.7]] def calculate_bmi(weight, height): return weight / (height ** 2) for patient in patients: weight, height = patients[0] bmi = calculate_bmi(height, weight) print("Patient's BMI is: %f" % bmi)Patient's BMI is: 0.000367 Patient's BMI is: 0.000367 Patient's BMI is: 0.000367Solution

The loop is not being utilised correctly.

heightandweightare always set as the first patient’s data during each iteration of the loop.The height/weight variables are reversed in the function call to

calculate_bmi(...), the correct BMIs are 21.604938, 22.160665 and 51.903114.

Key Points

Know what code is supposed to do before trying to debug it.

Make it fail every time.

Make it fail fast.

Change one thing at a time, and for a reason.

Keep track of what you’ve done.

Be humble.

Timing and Speeding Up Your Programs

Overview

Teaching: 20 min

Exercises: 10 minQuestions

How can I speed-test my programs, and if necessary, make it faster?

Objectives

Analyse the speed of your code and where the bottle-necks are.

Use numpy arrays and ufuncs where possible to speed up calculations and operations on large data sets.

Because of Python’s flexibility and large number of libraries, there are often many ways that a program can do the same task. Also, the fact that it is an interpreter language can lead to large differences in speed according to whether a function is carrying out a task sequentially, i.e. line by line via the interpreter, or using some pre-compiled function to roll the different parts of the task into a single function call. For these reasons, it is easy for your code to be sub-optimal in terms of its speed. When writing Python programs you should spend some time thinking about whether your code could be made more efficient and/or to run faster.

Speed-Testing in a Notebook

Within a Jupyter Notebook or from the iPython command line, you can time sections

of code using the built-in magic commands %time and %timeit. For example:

import math

def cos_list(ang_list):

'''Takes input of a list of angles (in radians) and converts to

a list of cosines of those angles'''

cos_vals = [math.cos(ang) for ang in ang_list]

return cos_vals

angles = [i*2*math.pi/1000 for i in range(1000)] # Quick way to create a list

%time cosines = cos_list(angles)

# These examples were tested on a 2.9 GHz Intel Core i5 processor - YMMV

CPU times: user 174 µs, sys: 1 µs, total: 175 µs

Wall time: 178 µs

Here, the user CPU time is the amount of time the CPU is busy executing the user’s code, while the system CPU time is the amount of time the CPU spends executing other code, e.g. related to the operating system, such as a system call. The wall time refers to the time taken as measured by ‘a clock on the wall’, i.e. the actual time taken from the start of the process to the end, accounting for any time waiting for external processes to be run on the CPU.

Since the CPU time can vary from execution to execution (and is dependent on other processes running at the same time), it can be more informative to see an average time

(and its standard deviation) measured over many iterations of the same executed

code, which uses %timeit:

%timeit cosines = cos_list(angles)

126 µs ± 11.7 µs per loop (mean ± std. dev. of 7 runs, 10000 loops each)

The default number of loops is calculated to give a required precision. The number of runs and loops can also be specified by command line options -r and -n. If you want to time the code in an entire cell, you should prefix the %time/%timeit command with an extra %, e.g. %%timeit.

You can now use %time and %timeit to time different parts of your code and identify which

are the slowest parts, so you can analyse and possibly speed them up significantly.

IPython Magic Commands

Magic commands are enhancements to the normal Python code which are intended to solve commons problems and provide shortcuts - they are provided within the iPython kernel and so can be used only within notebooks or the iPython command line itself. They are usually prefaced by a

%character, which means that they operate over the single line of code that follows the command (on the same line). If you want them to operate over the whole cell, you can preface the command with%%. Besides%timeand%timeit, there are many magic commands (see here). A few particularly useful ones are:

%whoWill display the names that have been used in the notebook (provided that the cells defining them have been executed). Adding a data-type, e.g.

%who intwill display only variables of that type. The related command%whoswill give extra information, such as the length of a collection; shape, size, typecode and size in memory of a numpy array.

%%htmlRenders the cell as a block of HTML code - useful if you want to use fancy HTML formatting. Note that this is a cell magic command that by definition is run on the whole cell. Similar commands can be used to run javascript, perl or ruby code in cells.

%%writefileA cell magic command to write the content of the cell to a file. E.g.:

%%writefile myprog.py def func(): print("This is my program!") func()Writing myprog.pyNote that if you the command will overwrite any existing file with that name. If you instead want to append the code to an existing file, use the

-aflag, e.g.%%writefile -a myprog.py. If you want to save a specific set of lines from the cell, instead of the whole cell, you can use the%savecommand instead.

%runAllows you to run any external python file (including another notebook) from a Jupyter notebook. E.g.:

print("Running external program") %run myprog.pyRunning external program This is my program!You can also use relative or absolute paths with the filename of the program to be run (or written).

Accelerating Your Code: Lists and Loops vs. Numpy Arrays and Ufuncs

One of the easiest ways to improve the speed of your code is to replace operations which loop repeatedly over a list of values, with the equivalent pre-compiled numpy functions operating on a numpy array or ‘ndarray’ (the equivalent of a list or nested list). We will describe how numpy arrays work, along with a number of useful functions in the next two Episodes, but for now we will see how replacing a loop with a numpy function can make a huge difference to the speed of your code.

Universal functions (ufuncs) is the general term for a large number of numpy functions designed to work directly with arrays, many of which are written in compiled C. It is this aspect, combined with their often very efficient design for fast calculation of arrays, which makes ufuncs a much faster option than working with your own equivalent interpreted python, especially when the arrays that must be looped over are very large.

Let’s look again at the example calculation of the cosines of a large list of angles, described above. Numpy also has a cosine function. We need to remember to convert the input list into a numpy array before we pass it to the numpy function:

import numpy # unless you have already done so

for n in [1000, 10000, 100000]:

angles = [i*2*math.pi/n for i in range(n)]

angles2 = numpy.array(angles) # convert list to numpy array

%timeit cosines = cos_list(angles)

%timeit cosines2 = numpy.cos(angles2) # numpy cos ufunc

print("for",len(angles2),"values in the list/array.\n")

126 µs ± 7.45 µs per loop (mean ± std. dev. of 7 runs, 10000 loops each)

9.85 µs ± 51.9 ns per loop (mean ± std. dev. of 7 runs, 100000 loops each)

for 1000 items in the list/array.

1.2 ms ± 84.6 µs per loop (mean ± std. dev. of 7 runs, 1000 loops each)

25.6 µs ± 802 ns per loop (mean ± std. dev. of 7 runs, 10000 loops each)

for 10000 items in the list/array.

13.8 ms ± 1.65 ms per loop (mean ± std. dev. of 7 runs, 100 loops each)

134 µs ± 6.71 µs per loop (mean ± std. dev. of 7 runs, 10000 loops each)

for 100000 items in the list/array.

The run-time of our original list/loop-based function scales close to linearly with the number of items in the list. However, the numpy function and array runs much more quickly and the run time scales more closely with the square root of the number of items in this case. Therefore, much larger gains in speed can be obtained when the lists/arrays used are large. Note that the exact improvement will depend on the function as well as the size (and dimensionality) of the arrays used.

The following function reads in a list of values (which must themselves be single numbers) and outputs a new list with those values which equal or exceed some lower-limit:

def select_above(input_list,lower_lim):

for i, cosine_val in enumerate(input_list):

if i == 0 and cosine_val >= lower_lim:

limit_list = [cosine_val]

elif i > 0 and cosine_val >= lower_lim:

limit_list.append(cosine_val)

return limit_list

In numpy we can use a conditional directly on the array, to select the array elements which satisfy the condition and create a new array from them. We can compare the speed of both:

n = 10000

angles = [i*2*math.pi/n for i in range(n)]

angles2 = numpy.array(angles)

cosines = cos_list(angles)

cosines2 = numpy.cos(angles2)

print("Using a list and loop over a conditional:")

%timeit limit_cosines = select_above(cosines,0.5)

print("Use a conditional to select from a numpy array:")

%timeit limit_cosines2 = cosines2[cosines2 >= 0.5]

Using list and loop over a conditional:

1.13 ms ± 81.2 µs per loop (mean ± std. dev. of 7 runs, 1000 loops each)

Use a conditional to select from a numpy array:

7.73 µs ± 61.9 ns per loop (mean ± std. dev. of 7 runs, 100000 loops each)

Again, the numpy solution is much faster than the basic python equivalent!

Vectorization

Numpy includes a useful function called

vectorize()which takes as its main argument the name of a python function (e.g. one that you have defined). It returns a callable function that is vectorized. This means that it can be given arguments that are either nested objects (e.g. lists) or numpy arrays, and then loops over their contents to run the original function on each corresponding set of arguments and return a numpy array or tuple of arrays (if the original function contains multiple outputs). In this way, you can easily run a function for many values of its arguments without setting up a loop yourself, or convert your function into something that works like a numpy function, although it is not pre-compiled.Note however, that because they are not pre-compiled, vectorized functions are not a replacement for e.g. the use of a combination numpy functions or JIT compilation (see below) which can speed up your code significantly.

vectorize()is more of a convenience for you to produce vectorized outputs from your own functions and/or avoid excessive use of loops. The looping is done within the vectorized function however, so it remains as slow as other functions with use the interpreter line-by-line.Challenge

Vectorize the

math.cosfunction and compare its speed (for 10000 input angles) with thecos_listfunction defined earlier, andnumpy.cos:Solution

cos_vec = numpy.vectorize(math.cos) n = 10000 angles = [i*2*math.pi/n for i in range(n)] angles2 = numpy.array(angles) print("Using a list and loop over math.cos:") %timeit cosines = cos_list(angles) print("Using an array and numpy:") %timeit cosines2 = numpy.cos(angles2) print("Using an array and vectorized math.cos:") %timeit cosines_vec = cos_vec(angles2)Using a list and loop over math.cos: 1.2 ms ± 7.29 µs per loop (mean ± std. dev. of 7 runs, 1000 loops each) Using an array and numpy: 25 µs ± 143 ns per loop (mean ± std. dev. of 7 runs, 10000 loops each) Using an array and vectorized math.cos: 1.08 ms ± 72.9 µs per loop (mean ± std. dev. of 7 runs, 1000 loops each)

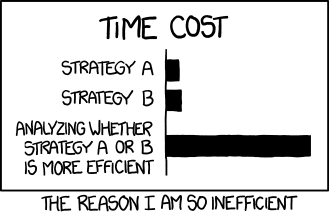

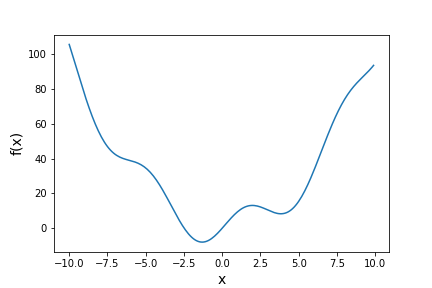

Of course, when trying to optimise your code, you should bear in mind the aphorism ‘the best is the enemy of the good’ (as neatly summarised in this xkcd cartoon below). Try to assess whether your code is good enough for the job you want to do with it and whether it’s actually worthwhile to spend time on speeding it up.

Key Points

Use magic commands

%timeand%timeitto speed-test your code in NotebooksNumpy arrays and functions (ufuncs) are much faster than using lists and loops.

You can make code more efficient for handling arrays (if not faster) using vectorization.

Working with Numpy Arrays

Overview

Teaching: 50 min

Exercises: 30 minQuestions

How do I create, modify and select from numpy arrays?

Objectives

Learn how to create, edit, copy and reshape numpy arrays.

Read data from files into structured arrays and select subsets from arrays using conditional statements.

Array Basics

Numpy arrays, are objects of class ndarray, corresponding to homogeneous and potentially multidimensional ‘tables’, typically containing numbers but which may contain other variable types. The items in the array are indexed by a tuple of integers, with each dimension corresponding to an axis. They can have as many axes (and thus dimensions) as needed.

We can convert a list into a numpy array. Arrays are printed using square brackets, like lists, but with spaces instead of commas between items. You cannot define an array using this format however, you need to use a numpy function to do that:

import numpy as np # We will import numpy and create a shorter 'alias' for it

# This is a 1d array:

a = np.array([1,2,3])

print("1d array: ",a)

# This is a 2d array:

b = np.array([[1,2,3],[3,4,5]])

print("2d array:")

print(b)

# This is a 3d array:

c = np.array([[[1,2,3],[4,5,6],[7,8,9],[10,11,12]],[[21,22,23],[24,25,26],

[27,28,29],[30,31,32]]])

print("3d array:")

print(c)

1d array: [1 2 3]

2d array:

[[1 2 3]

[3 4 5]]

3d array:

[[[ 1 2 3]

[ 4 5 6]

[ 7 8 9]

[10 11 12]]

[[21 22 23]

[24 25 26]

[27 28 29]

[30 31 32]]]

Note that for printing purposes, the last axis is printed from left to right and the second-to-last is printed from top to bottom on consecutive lines. The rest are also printed from top to bottom, with each slice separated by an empty line.

You can find the morphology of the array using various numpy methods:

print("1d array a:")

print("Number of axes: ",a.ndim," and length of each axis: ",a.shape)

print("2d array b:")

print("Number of axes: ",b.ndim," and length of each axis: ",b.shape)

print("3d array c:")

print("Number of axes: ",b.ndim," and length of each axis: ",c.shape)

1d array a:

Number of axes: 1 and length of each axis: (3,)

2d array b:

Number of axes: 2 and length of each axis: (2, 3)

3d array c:

Number of axes: 2 and length of each axis: (2, 4, 3)

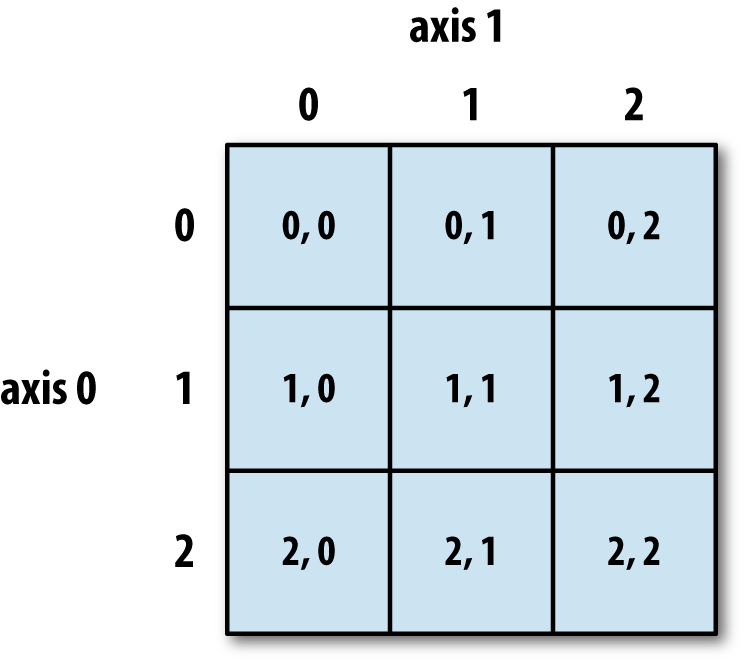

Array Indexing

Numpy arrays are indexed using row-major order, that is in a 2-dimensional array, values are stored consecutively in memory along the rows of the array, and the first index corresponds to the row, the second index the columns (the same as in matrix indexing, but opposite to Cartesian coordinates):

More generally (e.g. for arrays with additional dimensions), the last index in the sequence is the one which is stepped through the fastest in memory, i.e. we read along the columns before we get to the next row.

The size method gives the total number of elements in the array. We can also output the data type using the dtype method:

print("Array c:")

print("total number of elements: ",c.size)

print("data type of elements: ", c.dtype)

Array c:

total number of elements: 24

data type of elements: int64

Array elements can consist of all the different data types. Unless otherwise specified, the type will be chosen that best fits the values you use to create the array.

Just like lists, arrays can be iterated through using loops, starting with the first axis:

print("For array a:")

for val in a:

print(val,val**(1/3))

print("For array c:")

for j, arr in enumerate(c):

print("Sub-array",j,"=",arr)

for k, vec in enumerate(arr):

print("Vector",k,"of sub-array",j,"=",vec)

For array a:

1 1.0

2 1.2599210498948732

3 1.4422495703074083

For array c:

Sub-array 0 = [[ 1 2 3]

[ 4 5 6]

[ 7 8 9]

[10 11 12]]

Vector 0 of sub-array 0 = [1 2 3]

Vector 1 of sub-array 0 = [4 5 6]

Vector 2 of sub-array 0 = [7 8 9]

Vector 3 of sub-array 0 = [10 11 12]

Sub-array 1 = [[21 22 23]

[24 25 26]

[27 28 29]

[30 31 32]]

Vector 0 of sub-array 1 = [21 22 23]

Vector 1 of sub-array 1 = [24 25 26]

Vector 2 of sub-array 1 = [27 28 29]

Vector 3 of sub-array 1 = [30 31 32]

However, numpy allows much faster access to the component parts of an array through slicing, and much faster operations on arrays using the numpy ufuncs.

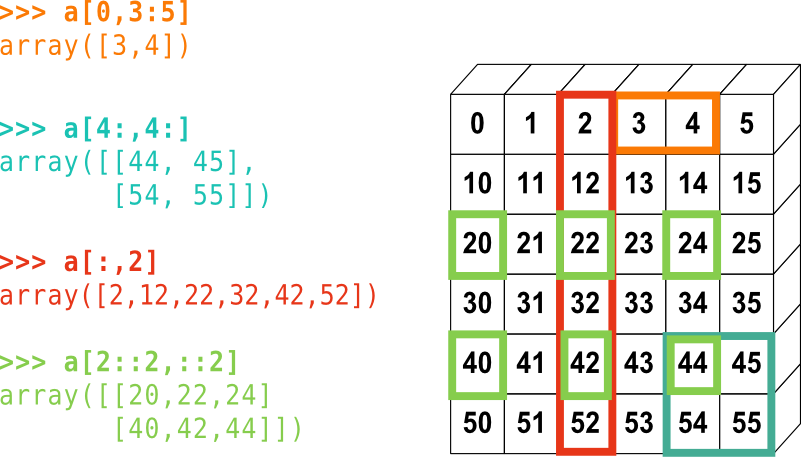

Array Slicing

Numpy arrays use the same rules for slicing as other Python iterables such as lists and strings.

Challenge

Without running the code first, what will the following print statements show?

d = np.array([0,1,2,3,4,5,6]) print(d[1:]) print(d[2:4]) print(d[-1]) print(d[::2]) print(d[-1:1:-1])Solution

[1 2 3 4 5 6] [2 3] 6 [0 2 4 6] [6 5 4 3 2]Slicing in two dimensions:

Challenge

Without running the code first, for the 3D matrix

cdefined earlier, what wouldprint(c[-1,1:3,::2])show?Solution

[[24 26] [27 29]]

Making Simple Starting Arrays

It’s often useful to create a simple starting array of elements that can be modified or written to later on. Some simple ways to do this are shown here - the shape of the new array is specified using a tuple (or single integer if 1-D).

a = np.zeros((2,3)) # Fill the array with 0.

print("a =",a)

b = np.ones((4,4)) # Fill with 1.

print("b =",b)

c = np.full(10,3.0) # Fill with the value given

print("c =",c)

a = [[0. 0. 0.]

[0. 0. 0.]]

b = [[1. 1. 1. 1.]

[1. 1. 1. 1.]

[1. 1. 1. 1.]

[1. 1. 1. 1.]]

c = [3. 3. 3. 3. 3. 3. 3. 3. 3. 3.]

Making Evenly Spaced and Meshgrid Arrays

Besides building an array by hand, we can generate arrays automatically in a variety of ways.

Firstly, there are a variety of numpy functions to generate arrays of evenly spaced numbers.

arange generates numbers with a fixed interval (or step) between them:

a = np.arange(8) # Generates linearly spaced numbers. Default step size = 1.0 and start = 0.0

print("a =",a)

b = np.arange(start=3, stop=12, step=0.8) # The stop value is excluded

print("b =",b)

a = [0 1 2 3 4 5 6 7]

b = [ 3. 3.8 4.6 5.4 6.2 7. 7.8 8.6 9.4 10.2 11. 11.8]

The linspace function produces num numbers over a fixed range inclusive of the start and stop

value. geomspace and logspace work in a similar way to produce geometrically spaced values

(i.e. equivalent to linear spacing of the logarithm of the values). Note that we don’t need to specify

the argument names if they are written in the correct order for the function. There are also a number

of hidden default variables that may be specified if we wish - you should always check the

documentation for a function before you use it, either via an online search or using the help

functionality in the Notebook or python command-line.

c = np.geomspace(10.0,1e6,6)

print("c =",c)

d = np.logspace(1,6,6)

print("d =",d)

c = [1.e+01 1.e+02 1.e+03 1.e+04 1.e+05 1.e+06]

d = [1.e+01 1.e+02 1.e+03 1.e+04 1.e+05 1.e+06]

linspace and geomspace also accept arrays of stop, start and num to produce multidimensional arrays of numbers.

meshgrid is a particularly useful function that accepts N 1-D arrays to produce N N-D grids of coordinates. Each point in a grid shows the coordinate value of the corresponding axis. These can be used to, e.g. evaluate functions across a grid of parameter values or make 3-D plots or contour plots of surfaces.

x = np.linspace(21,30,10)

y = np.linspace(100,800,8)

xgrid1, ygrid1 = np.meshgrid(x,y,indexing='xy') # Use Cartesian (column-major order) indexing

xgrid2, ygrid2 = np.meshgrid(x,y,indexing='ij') # Use matrix (row-major order) indexing

print("Using Cartesian (column-major order) indexing:")

print("Grid of x-values:")

print(xgrid1,"\n") # Add a newline after printing the grid

print("Grid of y-values:")

print(ygrid1,"\n")

print("Using matrix (row-major order) indexing:")

print("Grid of x-values:")

print(xgrid2,"\n")

print("Grid of y-values:")

print(ygrid2,"\n")

Note that the printed grids begin in the top-left corner with the [0,0] position, but the column and row values are then reversed for xy vs ij indexing.

Using Cartesian (column-major order) indexing:

Grid of x-values:

[[21. 22. 23. 24. 25. 26. 27. 28. 29. 30.]

[21. 22. 23. 24. 25. 26. 27. 28. 29. 30.]

[21. 22. 23. 24. 25. 26. 27. 28. 29. 30.]

[21. 22. 23. 24. 25. 26. 27. 28. 29. 30.]

[21. 22. 23. 24. 25. 26. 27. 28. 29. 30.]

[21. 22. 23. 24. 25. 26. 27. 28. 29. 30.]

[21. 22. 23. 24. 25. 26. 27. 28. 29. 30.]

[21. 22. 23. 24. 25. 26. 27. 28. 29. 30.]]

Grid of y-values:

[[100. 100. 100. 100. 100. 100. 100. 100. 100. 100.]

[200. 200. 200. 200. 200. 200. 200. 200. 200. 200.]

[300. 300. 300. 300. 300. 300. 300. 300. 300. 300.]

[400. 400. 400. 400. 400. 400. 400. 400. 400. 400.]

[500. 500. 500. 500. 500. 500. 500. 500. 500. 500.]

[600. 600. 600. 600. 600. 600. 600. 600. 600. 600.]

[700. 700. 700. 700. 700. 700. 700. 700. 700. 700.]

[800. 800. 800. 800. 800. 800. 800. 800. 800. 800.]]

Using matrix (row-major order) indexing:

Grid of x-values:

[[21. 21. 21. 21. 21. 21. 21. 21.]

[22. 22. 22. 22. 22. 22. 22. 22.]

[23. 23. 23. 23. 23. 23. 23. 23.]

[24. 24. 24. 24. 24. 24. 24. 24.]

[25. 25. 25. 25. 25. 25. 25. 25.]

[26. 26. 26. 26. 26. 26. 26. 26.]

[27. 27. 27. 27. 27. 27. 27. 27.]

[28. 28. 28. 28. 28. 28. 28. 28.]

[29. 29. 29. 29. 29. 29. 29. 29.]

[30. 30. 30. 30. 30. 30. 30. 30.]]

Grid of y-values:

[[100. 200. 300. 400. 500. 600. 700. 800.]

[100. 200. 300. 400. 500. 600. 700. 800.]

[100. 200. 300. 400. 500. 600. 700. 800.]

[100. 200. 300. 400. 500. 600. 700. 800.]

[100. 200. 300. 400. 500. 600. 700. 800.]

[100. 200. 300. 400. 500. 600. 700. 800.]

[100. 200. 300. 400. 500. 600. 700. 800.]

[100. 200. 300. 400. 500. 600. 700. 800.]

[100. 200. 300. 400. 500. 600. 700. 800.]

[100. 200. 300. 400. 500. 600. 700. 800.]]

Editing and Appending

To edit specific values of an array, you can simply replace the values using slicing, e.g.:

z = np.zeros((8,6))

z[2::2,2:-1] = 1

print(z)

[[0. 0. 0. 0. 0. 0.]

[0. 0. 0. 0. 0. 0.]

[0. 0. 1. 1. 1. 0.]

[0. 0. 0. 0. 0. 0.]

[0. 0. 1. 1. 1. 0.]

[0. 0. 0. 0. 0. 0.]

[0. 0. 1. 1. 1. 0.]

[0. 0. 0. 0. 0. 0.]]

Additional elements can be added to the end of the array using append, or inserted before a specified index/indices using insert. Elements may be removed using delete.

a = np.arange(2,8)

print(a)

b = np.append(a,[8,9]) # Appends [8,9] to end of array

print(b)

c = np.insert(b,5,[21,22,23]) # Inserts [21,22,23] before element with index 5

print(c)

d = np.delete(c,[0,3,6]) # Deletes elements with index 0, 3, 6

print(d)

[2 3 4 5 6 7]

[2 3 4 5 6 7 8 9]

[ 2 3 4 5 6 21 22 23 7 8 9]

[ 3 4 6 21 23 7 8 9]

If we want to append to a multi-dimensional array, but do not specify an axis, the arrays will

be flattened (see ravel below) before appending, to produce a 1-D array. If we specify an axis, the array we append must have the same number of dimensions and the same shape along the other axes. E.g.:

a = np.zeros((3,8))

print(a,"\n")

b = np.append(a,np.ones((3,1)),axis=1)

print(b,"\n")

c = np.append(b,np.full((2,9),2.),axis=0)

print(c,"\n")

d = np.append(c,np.full((3,1),3.),axis=1)

print(d)

[[0. 0. 0. 0. 0. 0. 0. 0.]

[0. 0. 0. 0. 0. 0. 0. 0.]

[0. 0. 0. 0. 0. 0. 0. 0.]]

[[0. 0. 0. 0. 0. 0. 0. 0. 1.]

[0. 0. 0. 0. 0. 0. 0. 0. 1.]

[0. 0. 0. 0. 0. 0. 0. 0. 1.]]

[[0. 0. 0. 0. 0. 0. 0. 0. 1.]

[0. 0. 0. 0. 0. 0. 0. 0. 1.]

[0. 0. 0. 0. 0. 0. 0. 0. 1.]

[2. 2. 2. 2. 2. 2. 2. 2. 2.]

[2. 2. 2. 2. 2. 2. 2. 2. 2.]]

---------------------------------------------------------------------------

ValueError Traceback (most recent call last)

<ipython-input-65-6a09bd69590d> in <module>

8 print(c,"\n")

9

---> 10 d = np.append(c,np.full((3,1),3.),axis=1)

11 print(d)

<__array_function__ internals> in append(*args, **kwargs)

~/anaconda3/lib/python3.7/site-packages/numpy/lib/function_base.py in append(arr, values, axis)

4698 values = ravel(values)

4699 axis = arr.ndim-1

-> 4700 return concatenate((arr, values), axis=axis)

4701

4702

<__array_function__ internals> in concatenate(*args, **kwargs)

ValueError: all the input array dimensions for the concatenation axis must match exactly, but along dimension 0, the array at index 0 has size 5 and the array at index 1 has size 3

Copying Arrays

You might think that we can make a direct copy

bof a Numpy arrayausinga = b. But look what happens if we change a value ina:a = [5.,4.,3.,9.] b = a print("b =",b) a[2] = 100. print("b =",b)b = [5. 4. 3. 9.] b = [ 5. 4. 100. 9.]The new array variable b is just another label for the array

a, so any changes toaare also mirrored inb, usually with undesirable results! If we want to make an independent copy of an array, we can use numpy’scopyfunction. Alternatively, we can carry out an operation on the original array which doesn’t change it (most operations write a new array by default). For example, both this:a = np.array([5.,4.,3.,9.]) b = np.copy(a) print("b =",b) a[2] = 100. print("b =",b)and this:

a = np.array([5.,4.,3.,9.]) b = a + 0 print("b =",b) a[2] = 100. print("b =",b)will make

ba completely new array which starts out identical toabut is independent of any changes toa:b = [5. 4. 3. 9.] b = [5. 4. 3. 9.]

Reshaping and Stacking

Sometimes it can be useful to change the shape of an array. For example, this can make some data analysis easier (e.g. to make distinct rows or columns in the data) or allow you to apply certain functions which may otherwise be impossible due to the array not having the correct shape (e.g. see broadcasting in the next episode).

Numpy’s reshape function allows an array to be reshaped to a different array of the same size

(so the product of row and column lengths should be the same as in the original array). The