Looking at some univariate data: summary statistics and histograms

Overview

Teaching: 50 min

Exercises: 20 minQuestions

How do we visually present univariate data (relating to a single variable)?

How can we quantify the data distribution in a simple way?

Objectives

Learn to use numpy and matplotlib to load in and plot univariate data as histograms and rug plots.

Learn about simple sample statistics and how to calculate them with numpy.

We are going to start by using Numpy and Matplotlib’s plotting library.

import numpy as np

import matplotlib.pyplot as plt

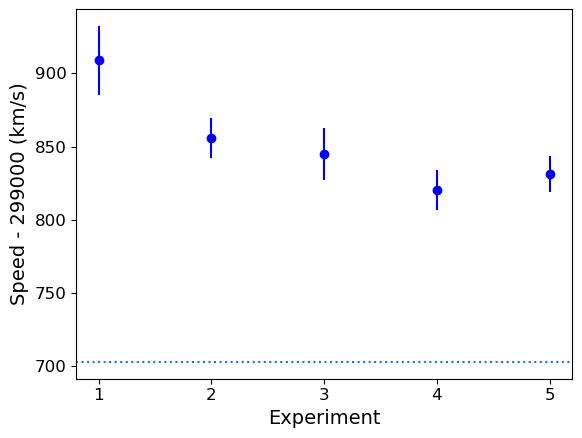

First, let’s load some data. We’ll start with the Michelson speed-of-light measurements (called michelson.txt).

It’s important to understand the nature of the data before you read it in, so be sure to check this by looking at the data file itself (e.g. via a text editor or other file viewer) before loading it. For the speed-of-light data we see 4 columns. The 1st column is just an identifier (‘row number’) for the measurement. The 2nd column is the ‘run’ - the measurement within a particular experiment (an experiment consists of 20 measurements). The 4th column identifies the experiment number. The crucial measurement itself is the 3rd column - to save on digits this just lists the speed (in km/s) minus 299000, rounded to the nearest 10 km/s.

If the data is in a fairly clean array, this is easily achieved with numpy.genfromtxt (google it!). By setting the argument names=True we can read in the column names, which are assigned as field names to the resulting numpy structured data array.

We will use the field names of your input 2-D array to assign the data columns Run, Speed and Expt to separate 1-D arrays and print the three arrays to check them:

michelson = np.genfromtxt("michelson.txt",names=True)

print(michelson.shape) ## Prints shape of the array as a tuple

run = michelson['Run']

speed = michelson['Speed']

experiment = michelson['Expt']

print(run,speed,experiment)

(100,)

[ 1. 2. 3. 4. 5. 6. 7. 8. 9. 10. 11. 12. 13. 14. 15. 16. 17. 18.

19. 20. 1. 2. 3. 4. 5. 6. 7. 8. 9. 10. 11. 12. 13. 14. 15. 16.

17. 18. 19. 20. 1. 2. 3. 4. 5. 6. 7. 8. 9. 10. 11. 12. 13. 14.

15. 16. 17. 18. 19. 20. 1. 2. 3. 4. 5. 6. 7. 8. 9. 10. 11. 12.

13. 14. 15. 16. 17. 18. 19. 20. 1. 2. 3. 4. 5. 6. 7. 8. 9. 10.

11. 12. 13. 14. 15. 16. 17. 18. 19. 20.] [ 850. 740. 900. 1070. 930. 850. 950. 980. 980. 880. 1000. 980.

930. 650. 760. 810. 1000. 1000. 960. 960. 960. 940. 960. 940.

880. 800. 850. 880. 900. 840. 830. 790. 810. 880. 880. 830.

800. 790. 760. 800. 880. 880. 880. 860. 720. 720. 620. 860.

970. 950. 880. 910. 850. 870. 840. 840. 850. 840. 840. 840.

890. 810. 810. 820. 800. 770. 760. 740. 750. 760. 910. 920.

890. 860. 880. 720. 840. 850. 850. 780. 890. 840. 780. 810.

760. 810. 790. 810. 820. 850. 870. 870. 810. 740. 810. 940.

950. 800. 810. 870.] [1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 1. 2. 2. 2. 2.

2. 2. 2. 2. 2. 2. 2. 2. 2. 2. 2. 2. 2. 2. 2. 2. 3. 3. 3. 3. 3. 3. 3. 3.

3. 3. 3. 3. 3. 3. 3. 3. 3. 3. 3. 3. 4. 4. 4. 4. 4. 4. 4. 4. 4. 4. 4. 4.

4. 4. 4. 4. 4. 4. 4. 4. 5. 5. 5. 5. 5. 5. 5. 5. 5. 5. 5. 5. 5. 5. 5. 5.

5. 5. 5. 5.]

The speed-of-light data given here are primarily univariate and continuous (although values are rounded to the nearest km/s). Additional information is provided in the form of the run and experiment number which may be used to screen and compare the data.

Data types and dimensions

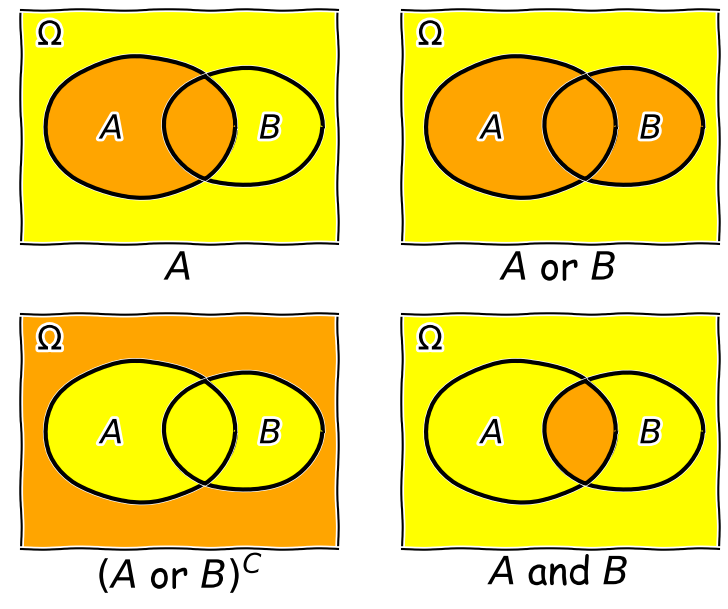

Data can be categorised according to the type of data and also the number of dimensions (number of variables describing the data).

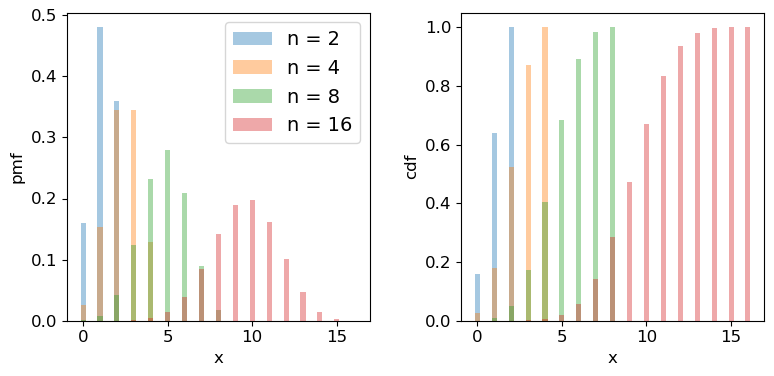

- Continuous data may take any value within a finite or infinite interval; e.g. the energy of a photon produced by a continuum emission process, the strength of a magnetic field or the luminosity of a star.

- Discrete data has numerical values which are distinct, most commonly integer values for counting something; e.g. the number of particles detected in a specified energy range and/or time interval, or the number of objects with a particular property (e.g. stars with luminosity in a certain range, or planets in a stellar system).

- Categorical data takes on non-numerical values, e.g. particle type (electron, pion, muon…).

- Ordinal data is a type of categorical data which can be given a relative ordering or ranking but where the actual differences between ranks are not known or explicitly given by the categories; e.g. questionnaires with numerical grading schemes such as strongly agree, agree…. strongly disagree. Astronomical classification schemes (e.g. types of star, A0, G2 etc.) are a form of ordinal data, although the categories may map on to some form of continuous data (e.g. stellar temperature).

In this course we will consider the analysis of continuous and discrete data types which are most common in the physical sciences, although categorical/ordinal data types may be used to select and compare different sub-sets of data.

Besides the data type, data may have different numbers of dimensions.

- Univariate data is described by only one variable (e.g. stellar temperature). Visually, it is often represented as a one-dimensional histogram.

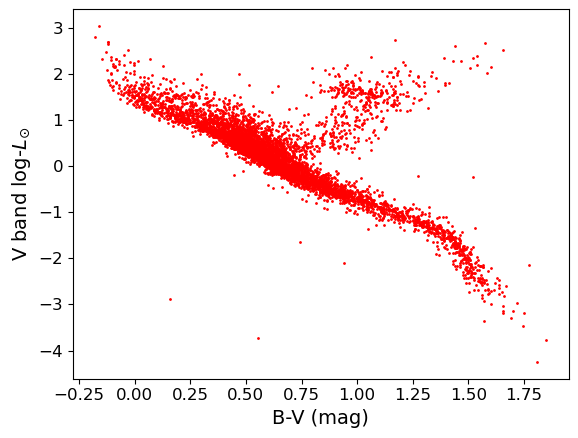

- Bivariate data is described by two variables (e.g. stellar temperature and luminosity). Visually it is often represented as a set of co-ordinates on a 2-dimensional plane, i.e. a scatter plot.

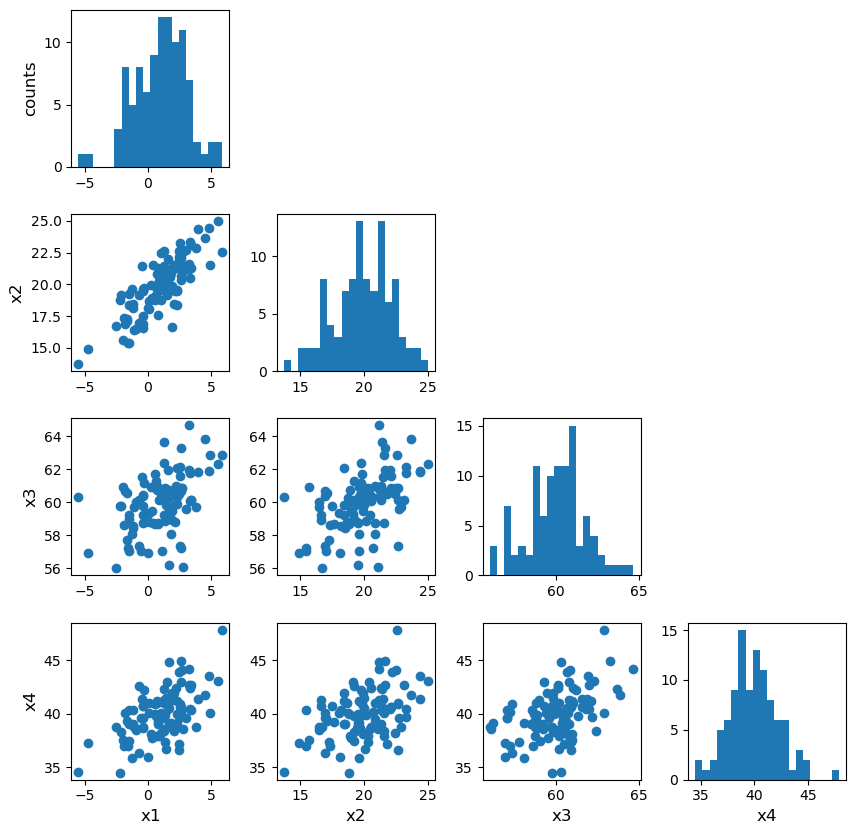

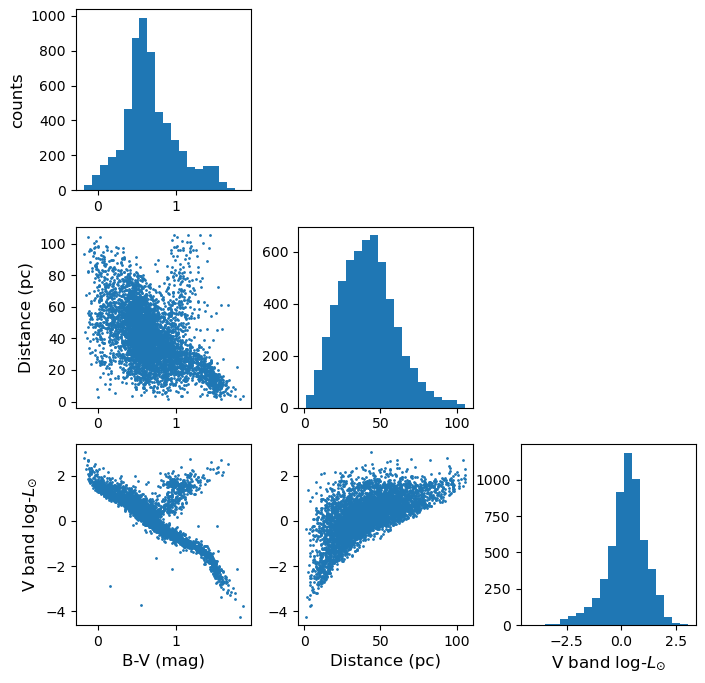

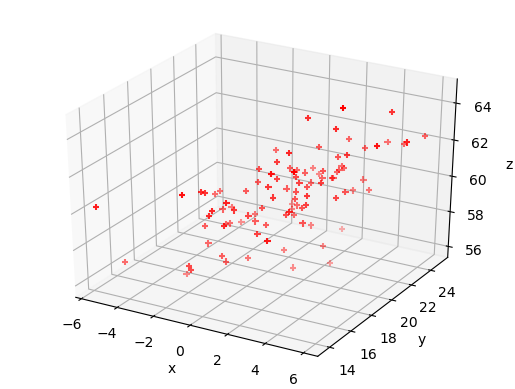

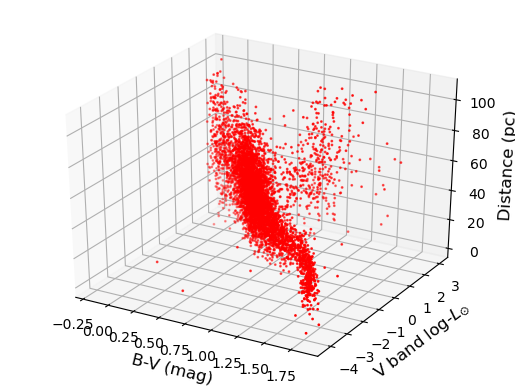

- Multivariate data includes three or more variables (e.g. stellar temperature, luminosity, mass, distance etc.). Visually it can be difficult to represent, but colour or tone may be used to describe a 3rd dimension on a plot and it is possible to show interactive 3-D scatter plots which can be rotated to see all the dimensions clearly. Multivariate data can also be represented 2-dimensionally using a scatter plot matrix.

We will first consider univariate data, and explore statistical methods for bivariate and multivariate data later on.

Making and plotting histograms

Now the most important step: always plot your data!!!

It is possible to show univariate data as a series of points or lines along a single axis using a rug plot, but it is more common to plot a histogram, where the data values are assigned to and counted in fixed width bins. The bins are usually defined to be contiguous (i.e. touching, with no gaps between them), although bins may have values of zero if no data points fall in them.

The matplotlib.pyplot.hist function in matplotlib automatically produces a histogram plot and returns the edges and counts per bin. The bins argument specifies the number of histogram bins (10 by default), range allows the user to predefine a range over which the histogram will be made. If the density argument is set to True, the histogram counts will be normalised such that the integral over all bins is 1 (this turns your histogram units into those of a probability density function). For more information on the commands and their arguments, google them or type in the cell plt.hist?.

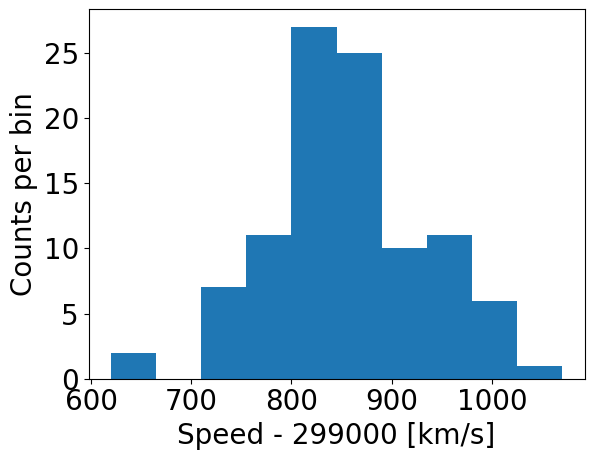

Now let’s try plotting the histogram of the data. When plotting, we should be sure to include clearly and appropriately labelled axes for this and any other figures we make, including correct units as necessary!

Note that it is important to make sure that your labels are easily readable by using the fontsize/labelsize arguments in the commands below. It is worth spending some time playing with the settings for making the histogram plots, to understand how you can change the plots to suit your preferences.

### First we use the matplotlib hist command, setting density=False:

## Set up plot window

plt.figure()

## Make and plot histogram (note that patches are matplotlib drawing objects)

counts, edges, patches = plt.hist(speed, bins=10, density=False)

plt.xlabel("Speed - 299000 [km/s]", fontsize=14)

plt.ylabel("Counts per bin", fontsize=14)

plt.tick_params(axis='x', labelsize=12)

plt.tick_params(axis='y', labelsize=12)

plt.show()

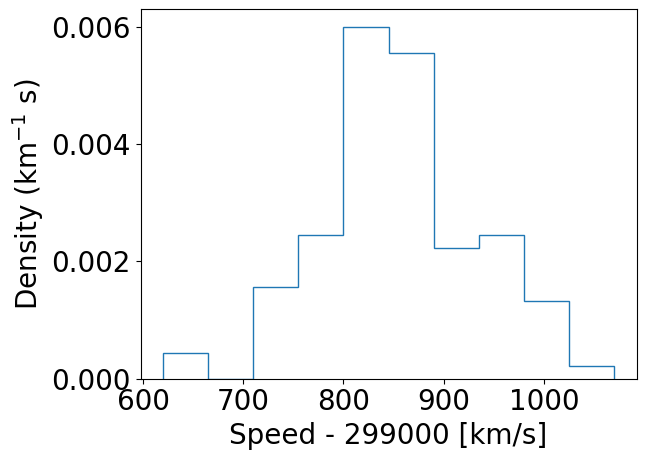

### And with density=True

plt.figure()

densities, edges, patches = plt.hist(speed, bins=10, density=True, histtype='step')

plt.xlabel("Speed - 299000 [km/s]", fontsize=14)

plt.ylabel("Density (km$^{-1}$ s)", fontsize=14)

plt.tick_params(axis='x', labelsize=12)

plt.tick_params(axis='y', labelsize=12)

plt.show()

### For demonstration purposes, check that the integral over densities add up to 1 (you don't normally need to do this!)

### We need to multiply the densities by the bin widths (the differences between adjacent edges) and sum the result.

print("Integrated densities =",np.sum(densities*(np.diff(edges))))

By using histtype='step' we can plot the histogram using lines and no colour fill (the default, corresponding to histtype='bar').

Integrated densities = 1.0

Note that there are 11 edges and 10 values for counts when 10 bins are chosen, because the edges define the upper and lower limits of the contiguous bins used to make the histogram. The bins argument of the histogram function is used to define the edges of the bins: if it is an integer, that is the number of equal-width bins which is used over range. The binning can also be chosen using various algorithms to optimise different aspects of the data, or specified in advance using a sequence of bin edge values, which can be used to allow non-uniform bin widths. For example, try remaking the plot using some custom binning below.

## First define the bin edges:

newbins=[600.,700.,750.,800.,850.,900.,950.,1000.,1100.]

## Now plot

plt.figure()

counts, edges, patches = plt.hist(speed, bins=newbins, density=False)

plt.xlabel("Speed - 299000 [km/s]", fontsize=20)

plt.ylabel("Counts per bin", fontsize=20)

plt.tick_params(axis='x', labelsize=20)

plt.tick_params(axis='y', labelsize=20)

plt.show()

Numpy also has a simple function, np.histogram to bin data into a histogram using similar function arguments to the matplotlib version. The numpy function also returns the edges and counts per bin. It is especially useful to bin univariate data, but for plotting purposes Matplotlib’s plt.hist command is better.

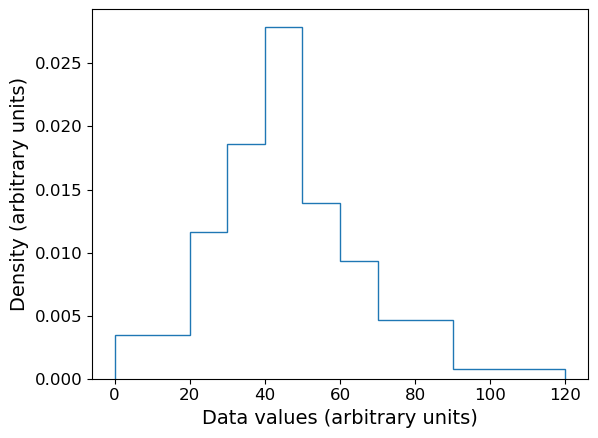

Plotting histograms with pre-assigned values

The matplotlib hist function is useful when we want to bin and plot data in one action, but sometimes we want to plot a histogram where the values are pre-assigned, e.g. from data already in the form of a histogram or where we have already binned our data using a separate function. There isn’t a separate function to do this in matplotlib, but it is easy to use the weights argument of hist to plot pre-assigned values, by using the following trick.

First, let’s define some data we have been given, in the form of bin edges and counts per bin:

counts = np.array([3,5,8,12,6,4,4,1])

bin_edges = np.array([0,20,30,40,50,60,70,90,120])

# We want to plot the histogram in units of density:

dens = counts/(np.diff(bin_edges)*np.sum(counts))

Now we trick hist by giving as input data a list of the bin centres, and as weights the input values we want to plot. This works because the weights are multiplied by the number of data values assigned to each bin, in this case one per bin (since we gave the bin centres as ‘dummy’ data), so we essentially just plot the weights we have given instead of the counts.

# Define the 'dummy' data values to be the bin centres, so each bin contains one value

dum_vals = (bin_edges[1:] + bin_edges[:-1])/2

plt.figure()

# Our histogram is already given as a density, so set density=False to switch calculation off

densities, edges, patches = plt.hist(dum_vals, bins=bin_edges, weights=dens, density=False, histtype='step')

plt.xlabel("Data values (arbitrary units)", fontsize=14)

plt.ylabel("Density (arbitrary units)", fontsize=14)

plt.tick_params(axis='x', labelsize=12)

plt.tick_params(axis='y', labelsize=12)

plt.show()

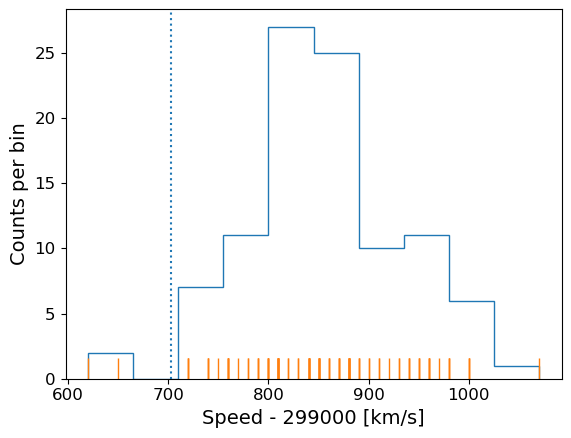

Combined rug plot and histogram

Going back to the speed of light data, the histogram covers quite a wide range of values, with a central peak and broad ‘wings’, This spread could indicate statistical error, i.e. experimental measurement error due to the intrinsic precision of the experiment, e.g. from random fluctuations in the equipment, the experimenter’s eyesight etc.

The actual speed of light in air is 299703 km/s. We can plot this on our histogram, and also add a ‘rug’ of vertical lines along the base of the plot to highlight the individual measurements and see if we can see any pattern in the scatter.

plt.figure()

# Make and plot histogram (note that patches are matplotlib drawing objects)

counts, edges, patches = plt.hist(speed, bins=10, density=False, histtype='step')

# We can plot the 'rug' using the x values and setting y to zero, with vertical lines for the

# markers and connecting lines switched off using linestyle='None'

plt.plot(speed, np.zeros(len(speed)), marker='|', ms=30, linestyle='None')

# Add a vertical dotted line at 703 km/s

plt.axvline(703,linestyle='dotted')

plt.xlabel("Speed - 299000 [km/s]", fontsize=14)

plt.ylabel("Counts per bin", fontsize=14)

plt.tick_params(axis='x', labelsize=12)

plt.tick_params(axis='y', labelsize=12)

plt.show()

The rug plot shows no clear pattern other than the equal 10 km/s spacing between many data points that is imposed by the rounding of speed values. However, the data are quite far from the correct value. This could indicate a systematic error, e.g. due to an mistake in the experimental setup or a flaw in the apparatus. A final, more interesting possibility is that the speed of light itself has changed!

Statistical and systematic error

When experimental results or observations are presented you will often hear discussion of two kinds of error:

- statistical error is a random error, due for example to measurement errors (i.e. related to the precision of the measuring device) or intrinsic randomness in the quantities being measured (so that, by chance, the data sample may be more or less representative of the true distribution or properties). Statistical errors do not have a preferred direction (they may be positive or negative deviations from the ‘true’ values) although their distribution may not be symmetric (e.g. positive fluctuations may on average be larger than negative, or vice versa)

- systematic error is a non-random error in a particular direction. For example, a mistake or design-flaw in the experimental setup may lead to the measurement being systematically too large or too small. Or, when data are collected via observations, a bias in the way a sample is selected may lead to the distrbution of the measured quantity being distorted (e.g. selecting a sample of stellar colours on bright stars will preferentially select luminous ones which are hotter and therefore bluer, causing blue stars to be over-represented). Systematic errors have a preferred direction and since they are non-random, dealing with them requires good knowledge of the experiment or sample selection approach (and underlying population properties), rather than simple application of statistical methods (although statistical methods can help in the simulation and caliibration of systematic errors, and to determine if they are present in your data or not).

Asking questions about the data: hypotheses

At this point we might be curious about our data and what it is really telling us, and we can start asking questions, for example:

- Is the deviation from the known speed of light in air real, e.g. due to systematic error in the experiment or a real change in the speed of light(!), or can it just be down to bad luck with measurement errors pushing us to one side of the curve (i.e. the deviation is within expectations for statistical errors)?

This kind of question about data can be answered using statistical methods. The challenge is to frame the question into a form called a hypothesis to which a statistical test can be applied, which will either rule the hypothesis out or not. We can never say that a hypothesis is really true, only that it isn’t true, to some degree of confidence.

To see how this works, we first need to think how we can frame our questions in a more quantifiable way, using summary statistics for our data.

Summary statistics: quantifying data distributions

So far we have seen what the data looks like and that most of the data are distributed on one side of the true value of the speed of light in air. To make further progress we need to quantify the difference and then figure out how to say whether that difference is expected by chance (statistical error) or is due to some other underlying effect (in this case, systematic error since we don’t expect a real change in the speed of light!).

However, the data take on a range of values - they follow a distribution which we can approximate using the histogram. We call this the sample distribution, since our data can be thought of as a sample of measurements from a much larger set of potential measurements (known in statistical terms as the population). To compare with the expected value of the speed of the light it is useful to turn our data into a single number, reflecting the ‘typical’ value of the sample distribution.

We can start with the sample mean, often just called the mean:

[\bar{x} = \frac{1}{n} \sum\limits_{i=1}^{n} x_{i}]

where our sample consists of \(n\) measurements \(x = x_{1}, x_{2}, ...x_{n}\).

It is also common to use a quantity called the median which corresponds to the ‘central’ value of \(x\) and is obtained by arranging \(x\) in numerical order and taking the middle value if \(n\) is an odd number. If \(n\) is even we take the average of the \((n/2)\)th and \((n/2+1)\)th ordered values.

Challenge: obtain the mean and median

Numpy has fast (C-based and Python-wrapped) implementation for most basic functions, among them the mean and median. Find and use these to calculate the mean and median for the speed values in the complete Michelson data set.

Solution

michelson_mn = np.mean(speed) + 299000 ## Mean speed with offset added back in michelson_md = np.median(speed) + 299000 ## Median speed with offset added back in print("Mean =",michelson_mn,"and median =",michelson_md)Mean = 299852.4 and median = 299850.0

We can also define the mode, which is the most frequent value in the data distribution. This does not really make sense for continuous data (then it makes more sense to define the mode of the population rather than the sample), but one can do this for discrete data.

Challenge: find the mode

As shown by our rug plot, the speed-of-light data here is discrete in the sense that it is rounded to the nearest ten km/s. Numpy has a useful function

histogramfor finding the histogram of the data without plotting anything. Apart from the plotting arguments, it has the same arguments for calculating the histogram as matplotlib’shist. Use this (and another numpy functions) to find the mode of the speed of light measurements.Hint

You will find the numpy functions

amin,amaxandargmaxuseful for this challenge.Solution

# Calculate number of bins needed for each value to lie in the centre of # a bin of 10 km/s width nbins = ((np.amax(speed)+5)-(np.amin(speed)-5))/10 # To calculate the histogram we should specify the range argument to the min and max bin edges used above counts, edges = np.histogram(speed,bins=int(nbins),range=(np.amin(speed)-5,np.amax(speed)+5)) # Now use argmax to find the index of the bin with the maximum counts and print the bin centre i_max = np.argmax(counts) print("Maximum counts for speed[",i_max,"] =",edges[i_max]+5.0)Maximum counts for speed[ 19 ] = 810.0However, if we print out the counts, we see that there is another bin with the same number of counts!

[ 1 0 0 1 0 0 0 0 0 0 3 0 3 1 5 1 2 3 5 10 2 2 8 8 3 4 10 3 2 2 1 2 3 3 4 1 3 0 3 0 0 0 0 0 0 1]If there is more than one maximum,

argmaxoutputs the index of the first occurence.Scipy provides a simpler way to obtain the mode of a data array:

## Remember that for scipy you need to import modules individually, you cannot ## simply use `import scipy` import scipy.stats print(scipy.stats.mode(speed))ModeResult(mode=array([810.]), count=array([10]))I.e. the mode corresponds to value 810 with a total of 10 counts. Note that

scipy.stats.modealso returns only the first occurrence of multiple maxima.

The mean, median and mode(s) of our data are all larger than 299703 km/s by more than 100 km/s, but is this difference really significant? The answer will depend on how precise our measurements are, that is, how tightly clustered are they around the same values? If the precision of the measurements is low, we can have less confidence that the data are really measuring a different value to the true value.

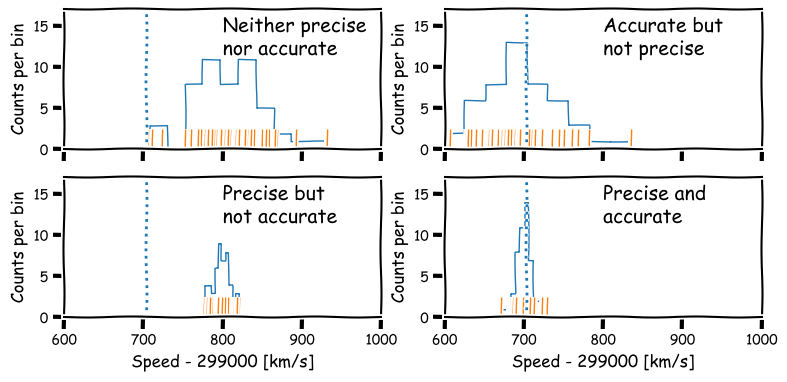

Precision and accuracy

In daily speech we usually take the words precision and accuracy to mean the same thing, but in statistics they have distinct meanings and you should be careful when you use them in a scientific context:

- Precision refers to the degree of random deviation, e.g. how broad a measured data distribution is.

- Accuracy refers to how much non-random deviation there is from the true value, i.e. how close the measured data are on average to the ‘true’ value of the quantity being measured.

In terms of errors, high precision corresponds to low statistical error (and vice versa) while high accuracy refers to low systematic error (or equivalently, low bias).

We will be be able to make statements comparing data with an expected value more quantitatively after a few more episodes, but for now it is useful to quantify the precision of our distribution in terms of its width. For this, we calculate a quantity known as the variance.

[s_{x}^{2} = \frac{1}{n-1} \sum\limits_{i=1}^{n} (x_{i}-\bar{x})^{2}]

Variance is a squared quantity but we can convert to the standard deviation \(s_{x}\) by taking the square root:

[s_{x} = \sqrt{\frac{1}{n-1} \sum\limits_{i=1}^{n} (x_{i}-\bar{x})^{2}}]

You can think of this as a measure of the ‘width’ of the distribution and thus an indication of the precision of the measurements.

Note that the sum to calculate variance (and standard deviation) is normalised by \(n-1\), not \(n\), unlike the mean. This is called Bessel’s correction and is a correction for bias in the calculation of sample variance compared to the ‘true’ population variance - we will see the origin of this in a couple of episodes time. The number subtracted from \(n\) (in this case, 1) is called the number of degrees of freedom.

Challenge: calculate variance and standard deviations

Numpy contains functions for both variance and standard deviation - find and use these to calculate these quantities for the speed data in the Michelson data set. Be sure to check and use the correct number of degrees of freedom (Bessel’s correction), as the default may not be what you want! Always check the documentation of any functions you use, to understand what the function does and what the default settings or assumptions are.

Solution

## The argument ddof sets the degrees of freedom which should be 1 here (for Bessel's correction) michelson_var = np.var(speed, ddof=1) michelson_std = np.std(speed, ddof=1) print("Variance:",michelson_var,"and s.d.:",michelson_std)Variance: 6242.666666666667 and s.d.: 79.01054781905178

Population versus sample

In frequentist approaches to statistics it is common to imagine that samples of data (that is, the actual measurements) are drawn from a much (even infinitely) larger population which follows a well-defined (if unknown) distribution. In some cases, the underlying population may be a real one, for example a sample of stellar masses will be drawn from the masses of an actual population of stars. In other cases, the population may be purely notional, e.g. as if the data are taken from an infinite pool of possible measurements from the same experiment (and associated errors). In either case the statistical term population refers to something for which a probability distribution can be defined.

Therefore, it is important to make distinction between the sample mean and variance, which are calculated from the data (and therefore not fixed, since the sample statistics are themselves random variates and population mean and variance (which are fixed and well-defined for a given probability distribution). Over the next few episodes, we will see how population statistics are derived from probability distributions, and how to relate them to the sample statistics.

Hypothesis testing with statistics

The sample mean, median, mode and variance that we have defined are all statistics.

A statistic is a single number calculated by applying a statistical algorithm/function to the values of the items in the sample (the data). We will discuss other statistics later on, but they all share the property that they are single numbers that are calculated from data.

Statistics are drawn from a distribution determined by the population distribution (note that the distribution of a statistic is not necessarily the same as that of the population which the data used to calculate the statistic is drawn from!).

A statistic can be used to test a hypothesis about the data, often by comparing it with the expected distribution of the statistic, given some assumptions about the population. When used in this way, it is called a test statistic.

So, let’s now reframe our earlier question about the data into a hypothesis that we can test:

- Is the deviation real or due to statistical error?

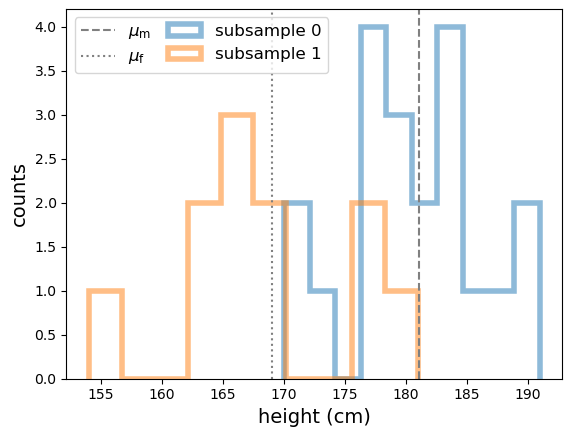

Since there is not one single measured value, but a distribution of measured values of the speed of light, it makes sense (in the absence of further information) to not prefer any particular measurement, but to calculate the sample mean of our measured values of the speed of light. Furthermore, we do not (yet) have any information that should lead us to prefer any of the 5 Michelson experiments, so we will weight them all equally and calculate the mean from the 100 measured values.

Our resulting sample mean is 299852.4 km/s. This compares with the known value (in air) of 299703 km/s, so there is a difference of \(\simeq 149\) km/s.

The standard deviation of the data is 79 km/s, so the sample mean is less than 2 standard deviations from the known value, which doesn’t sound like a lot (some data points are further than this difference from the mean). However, we would expect that the random variation on the mean should be smaller than the variation of individual measurements, since averaging the individual measurements should smooth out their variations.

To assess whether or not the difference is compatible with statistical errors or not, we need to work out what the ‘typical’ random variation of the mean should be. But we first need to deepen our understanding about probability distributions and random variables.

Why not use the median?

You might reasonably ask why we don’t use the median as our test statistic here. The simple answer is that the mean is a more useful test statistic with some very important properties. Most importantly it follows the central limit theorem, which we will learn about later on.

The median is useful in other situations, e.g. when defining a central value of data where the distribution is highly skewed or contains outliers which you don’t want to remove.

Key Points

Univariate data can be plotted using histograms, e.g. with

matplotlib.pyplot.hist. Histograms can also be calculated (without plotting) usingnumpy.hist.Pre-binned data can be plotted using

matplotlib.pyplot.histusing weights matching the binned frequencies/densities and bin centres used as dummy values to be binned.Statistical errors are due to random measurement errors or randomness of the population being sampled, while systematic errors are non-random and linked to faults in the experiment or limitations/biases in sample collection.

Precise measurements have low relative statistical error, while accurate measurements have low relative systematic error.

Data distributions can be quantified using sample statistics such as the mean and median and variance or standard-deviation (quantifying the width of the distribution), e.g. with

numpyfunctionsmean,median,varandstd. Remember to check the degrees of freedom assumed for the variance and standard deviation functions!Quantities calculated from data such as such as mean, median and variance are statistics. Hypotheses about the data can be tested by comparing a suitable test statistic with its expected distribution, given the hypothesis and appropriate assumptions.

Introducing probability distributions

Overview

Teaching: 30 min

Exercises: 10 minQuestions

How are probability distributions defined and described?

Objectives

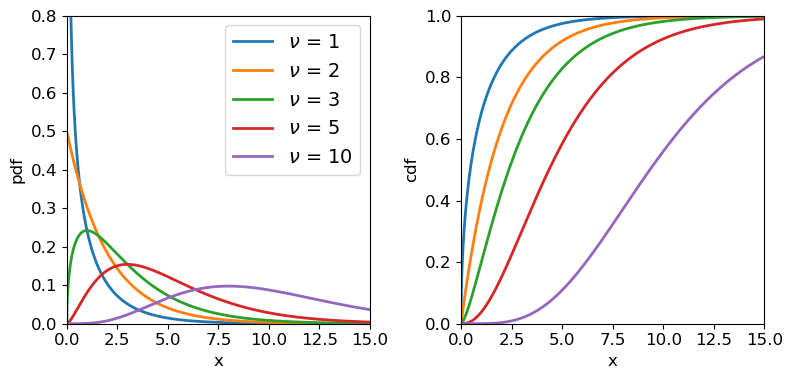

Learn how the pdf, cdf, quantiles, ppf are defined and how to plot them using

scipy.statsdistribution functions and methods.

In this episode we will be using numpy, as well as matplotlib’s plotting library. Scipy contains an extensive range of distributions in its ‘scipy.stats’ module, so we will also need to import it. Remember: scipy modules should be installed separately as required - they cannot be called if only scipy is imported.

import numpy as np

import matplotlib.pyplot as plt

import scipy.stats as sps

Michelson’s speed-of-light data is a form of random variable. Clearly the measurements are close to the true value of the speed of light in air, to within <0.1 per cent, but they are distributed randomly about some average which may not even be the true value (e.g. due to systematic error in the measurements).

We can gain further understanding by realising that random variables do not just take on any value - they are drawn from some probability distribution. In probability theory, a random measurement (or even a set of measurements) is an event which occurs (is ‘drawn’) with a fixed probability, assuming that the experiment is fixed and the underlying distribution being measured does not change over time (statistically we say that the random process is stationary).

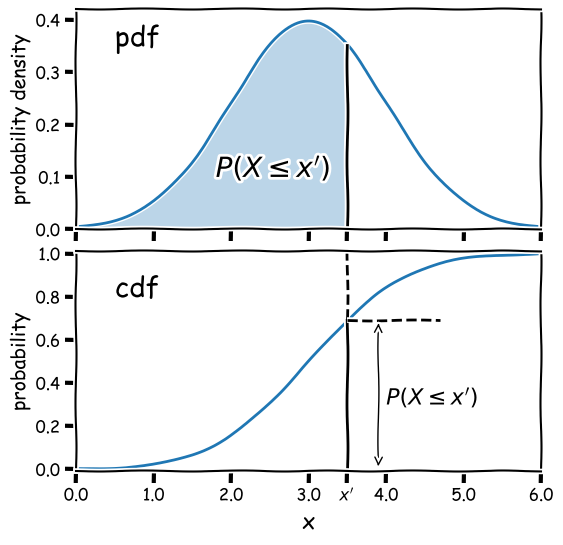

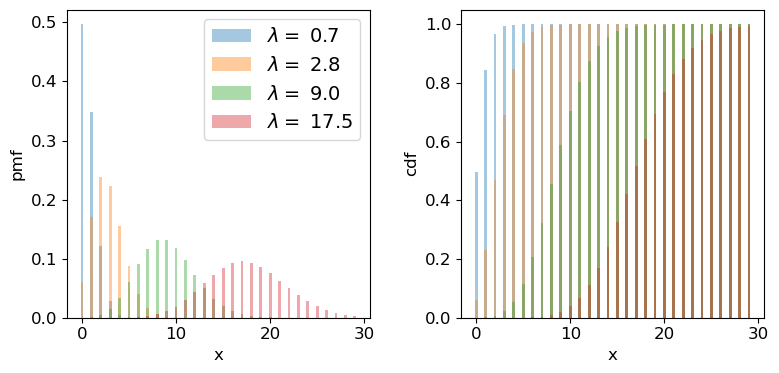

The cdf and pdf of a probability distribution

Consider a continuous random variable \(X\) (for example, a single measurement). For a fixed probability distribution over all possible values $x$, we can define the probability \(P\) that \(X\leq x\) as being the cumulative distribution function (or cdf), \(F(x)\):

[F(x) = P(X\leq x)]

We can choose the limiting values of our distribution, but since the variable must take on some value (i.e. the definition of an ‘event’ is that something must happen) it must satisfy:

\(\lim\limits_{x\rightarrow -\infty} F(x) = 0\) and \(\lim\limits_{x\rightarrow +\infty} F(x) = 1\)

From these definitions we find that the probability that \(X\) lies in the closed interval \([a,b]\) (note: a closed interval, denoted by square brackets, means that we include the endpoints \(a\) and \(b\)) is:

[P(a \leq X \leq b) = F(b) - F(a)]

We can then take the limit of a very small interval \([x,x+\delta x]\) to define the probability density function (or pdf), \(p(x)\):

[\frac{P(x\leq X \leq x+\delta x)}{\delta x} = \frac{F(x+\delta x)-F(x)}{\delta x}]

[p(x) = \lim\limits_{\delta x \rightarrow 0} \frac{P(x\leq X \leq x+\delta x)}{\delta x} = \frac{\mathrm{d}F(x)}{\mathrm{d}x}]

This means that the cdf is the integral of the pdf, e.g.:

[P(X \leq x) = F(x) = \int^{x}_{-\infty} p(x^{\prime})\mathrm{d}x^{\prime}]

where \(x^{\prime}\) is a dummy variable. The probability that \(X\) lies in the interval \([a,b]\) is:

[P(a \leq X \leq b) = F(b) - F(a) = \int_{a}^{b} p(x)\mathrm{d}x]

and \(\int_{-\infty}^{\infty} p(x)\mathrm{d}x = 1\).

Why use the pdf?

By definition, the cdf can be used to directly calculate probabilities (which is very useful in statistical assessments of data), while the pdf only gives us the probability density for a specific value of \(X\). So why use the pdf? One of the main reasons is that it is generally much easier to calculate the pdf for a particular probability distribution, than it is to calculate the cdf, which requires integration (which may be analytically impossible in some cases!).

Also, the pdf gives the relative probabilities (or likelihoods) for particular values of \(X\) and the model parameters, allowing us to compare the relative likelihood of hypotheses where the model parameters are different. This principle is a cornerstone of statistical inference which we will come to later on.

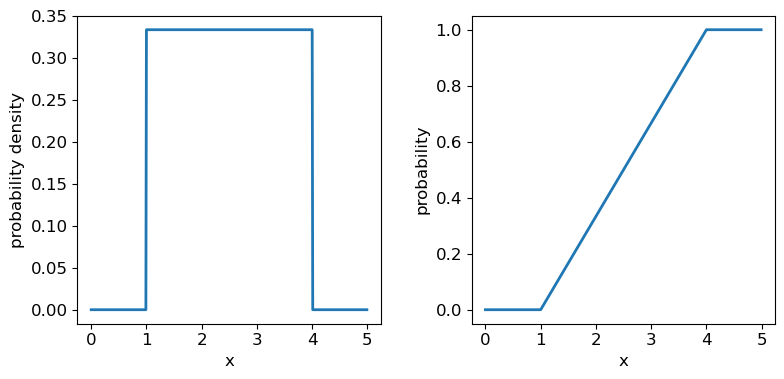

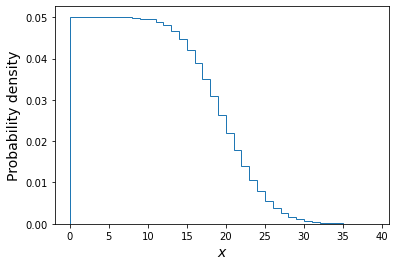

Probability distributions: Uniform

Now we’ll introduce two common probability distributions, and see how to use them in your Python data analysis. We start with the uniform distribution, which has equal probability values defined over some finite interval \([a,b]\) (and zero elsewhere). The pdf is given by:

[p(x\vert a,b) = 1/(b-a) \quad \mathrm{for} \quad a \leq x \leq b]

where the notation \(p(x\vert a,b)\) means ‘probability density at x, conditional on model parameters \(a\) and \(b\)‘. The vertical line \(\vert\) meaning ‘conditional on’ (i.e. ‘given these existing conditions’) is notation from probability theory which we will use often in this course.

## define parameters for our uniform distribution

a = 1

b = 4

print("Uniform distribution with limits",a,"and",b,":")

## freeze the distribution for a given a and b

ud = sps.uniform(loc=a, scale=b-a) # The 2nd parameter is added to a to obtain the upper limit = b

Distribution parameters: location, scale and shape

As in the above example, it is often useful to ‘freeze’ a distribution by fixing its parameters and defining the frozen distribution as a new function, which saves repeating the parameters each time. The common format for arguments of scipy statistical distributions which represent distribution parameters, corresponds to statistical terminology for the parameters:

- A location parameter (the

locargument in the scipy function) determines the location of the distribution on the \(x\)-axis. Changing the location parameter just shifts the distribution along the \(x\)-axis.- A scale parameter (the

scaleargument in the scipy function) determines the width or (more formally) the statistical dispersion of the distribution. Changing the scale parameter just stretches or shrinks the distribution along the \(x\)-axis but does not otherwise alter its shape.- There may be one or more shape parameters (scipy function arguments may have different names specific to the distribution). These are parameters which do something other than shifting, or stretching/shrinking the distribution, i.e. they change the shape in some way.

Distributions may have all or just one of these parameters, depending on their form. For example, normal distributions are completely described by their location (the mean) and scale (the standard deviation), while exponential distributions (and the related discrete Poisson distribution) may be defined by a single parameter which sets their location as well as width. Some distributions use a rate parameter which is the reciprocal of the scale parameter (exponential/Poisson distributions are an example of this).

The uniform distribution has a scale parameter \(\lvert b-a \rvert\). This statistical distribution’s location parameter is formally the centre of the distribution, \((a+b)/2\), but for convenience the scipy uniform function uses \(a\) to place a bound on one side of the distribution. We can obtain and plot the pdf and cdf by applying those named methods to the scipy function. Note that we must also use a suitable function (e.g. numpy.arange) to create a sufficiently dense range of \(x\)-values to make the plots over.

## You can plot the probability density function

fig, (ax1, ax2) = plt.subplots(1,2, figsize=(9,4))

# change the separation between the sub-plots:

fig.subplots_adjust(wspace=0.3)

x = np.arange(0., 5.0, 0.01)

ax1.plot(x, ud.pdf(x), lw=2)

## or you can plot the cumulative distribution function:

ax2.plot(x, ud.cdf(x), lw=2)

for ax in (ax1,ax2):

ax.tick_params(labelsize=12)

ax.set_xlabel("x", fontsize=12)

ax.tick_params(axis='x', labelsize=12)

ax.tick_params(axis='y', labelsize=12)

ax1.set_ylabel("probability density", fontsize=12)

ax2.set_ylabel("probability", fontsize=12)

plt.show()

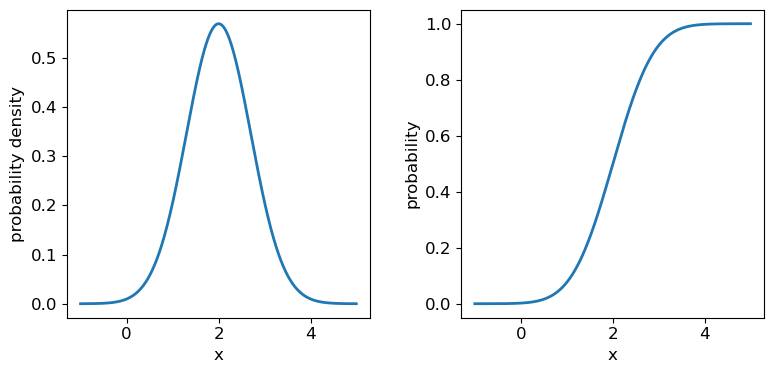

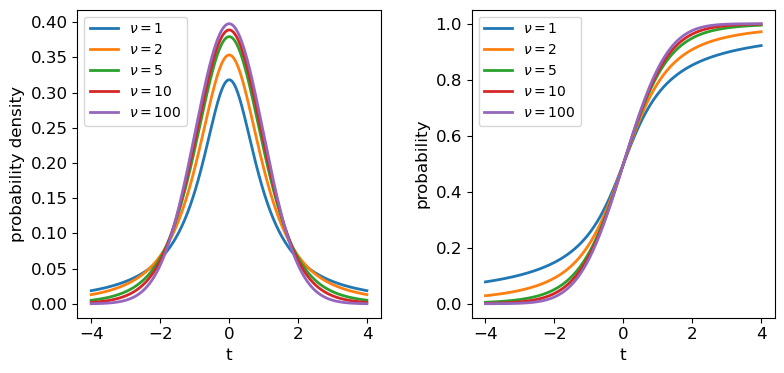

Probability distributions: Normal

The normal distribution is one of the most important in statistical data analysis (for reasons which will become clear) and is also known to physicists and engineers as the Gaussian distribution. The distribution is defined by location parameter \(\mu\) (often just called the mean, but not to be confused with the mean of a statistical sample) and scale parameter \(\sigma\) (also called the standard deviation, but again not to be confused with the sample standard deviation). The pdf is given by:

[p(x\vert \mu,\sigma)=\frac{1}{\sigma \sqrt{2\pi}} e^{-(x-\mu)^{2}/(2\sigma^{2})}]

It is also common to refer to the standard normal distribution which is the normal distribution with \(\mu=0\) and \(\sigma=1\):

[p(z\vert 0,1) = \frac{1}{\sqrt{2\pi}} e^{-z^{2}/2}]

The standard normal is important for many statistical results, including the approach of defining statistical significance in terms of the number of ‘sigmas’ which refers to the probability contained within a range \(\pm z\) on the standard normal distribution (we will discuss this in more detail when we discuss statistical significance testing).

Challenge: plotting the normal distribution

Now that you have seen the example of a uniform distribution, use the appropriate

scipy.statsfunction to plot the pdf and cdf of the normal distribution, for a mean and standard deviation of your choice (you can freeze the distribution first if you wish, but it is not essential).Solution

## Define mu and sigma: mu = 2.0 sigma = 0.7 ## Plot the probability density function fig, (ax1, ax2) = plt.subplots(1,2, figsize=(9,4)) fig.subplots_adjust(wspace=0.3) ## we will plot +/- 3 sigma on either side of the mean x = np.arange(-1.0, 5.0, 0.01) ax1.plot(x, sps.norm.pdf(x,loc=mu,scale=sigma), lw=2) ## and the cumulative distribution function: ax2.plot(x, sps.norm.cdf(x,loc=mu,scale=sigma), lw=2) for ax in (ax1,ax2): ax.tick_params(labelsize=12) ax.set_xlabel("x", fontsize=12) ax.tick_params(axis='x', labelsize=12) ax.tick_params(axis='y', labelsize=12) ax1.set_ylabel("probability density", fontsize=12) ax2.set_ylabel("probability", fontsize=12) plt.show()

It’s useful to note that the pdf is much more distinctive for different functions than the cdf, which (because of how it is defined) always takes on a similar, slanted ‘S’-shape, hence there is some similarity in the form of cdf between the normal and uniform distributions, although their pdfs look radically different.

Quantiles

It is often useful to be able to calculate the quantiles (such as percentiles or quartiles) of a distribution, that is, what value of \(x\) corresponds to a fixed interval of integrated probability? We can obtain these from the inverse function of the cdf (\(F(x)\)). E.g. for the quantile \(\alpha\):

[F(x_{\alpha}) = \int^{x_{\alpha}}{-\infty} p(x)\mathrm{d}x = \alpha \Longleftrightarrow x{\alpha} = F^{-1}(\alpha)]

Note that \(F^{-1}\) denotes the inverse function of \(F\), not \(1/F\)! This is called the percent point function (or ppf). To obtain a given quantile for a distribution we can use the scipy.stats method ppf applied to the distribution function. For example:

## Print the 30th percentile of a normal distribution with mu = 3.5 and sigma=0.3

print("30th percentile:",sps.norm.ppf(0.3,loc=3.5,scale=0.3))

## Print the median (50th percentile) of the distribution

print("Median (via ppf):",sps.norm.ppf(0.5,loc=3.5,scale=0.3))

## There is also a median method to quickly return the median for a distribution:

print("Median (via median method):",sps.norm.median(loc=3.5,scale=0.3))

30th percentile: 3.342679846187588

Median (via ppf): 3.5

Median (via median method): 3.5

Intervals

It is sometimes useful to be able to quote an interval, containing some fraction of the probability (and usually centred on the median) as a ‘typical’ range expected for the random variable \(X\). We will discuss intervals on probability distributions further when we discuss confidence intervals on parameters. For now, we note that the

.intervalmethod can be used to obtain a given interval centred on the median. For example, the Interquartile Range (IQR) is often quoted as it marks the interval containing half the probability, between the upper and lower quartiles (i.e. from 0.25 to 0.75):## Print the IQR for a normal distribution with mu = 3.5 and sigma=0.3 print("IQR:",sps.norm.interval(0.5,loc=3.5,scale=0.3))IQR: (3.2976530749411754, 3.7023469250588246)So for the normal distribution, with \(\mu=3.5\) and \(\sigma=0.3\), half of the probability is contained in the range \(3.5\pm0.202\) (to 3 decimal places).

Key Points

Probability distributions show how random variables are distributed. Two common distributions are the uniform and normal distributions.

Uniform and normal distributions and many associated functions can be accessed using

scipy.stats.uniformandscipy.stats.normrespectively.The probability density function (pdf) shows the distribution of relative likelihood or frequency of different values of a random variable and can be accessed with the scipy statistical distribution’s

The cumulative distribution function (cdf) is the integral of the pdf and shows the cumulative probability for a variable to be equal to or less than a given value. It can be accessed with the scipy statistical distribution’s

cdfmethod.Quantiles such as percentiles and quartiles give the values of the random variable which correspond to fixed probability intervals (e.g. of 1 per cent and 25 per cent respectively). They can be calculated for a distribution in scipy using the

percentileorintervalmethods.The percent point function (ppf) (

ppfmethod) is the inverse function of the cdf and shows the value of the random variable corresponding to a given quantile in its distribution.Probability distributions are defined by common types of parameter such as the location and scale parameters. Some distributions also include shape parameters.

Random variables

Overview

Teaching: 40 min

Exercises: 10 minQuestions

How do I calculate the means, variances and other statistical quantities for numbers drawn from probability distributions?

How is the error on a sample mean calculated?

Objectives

Learn how the expected means and variances of random variables (and functions of them) can be calculated from their probability distributions.

Discover the

scipy.statsfunctionality for calculated statistical properties of probability distributions and how to generate random numbers from them.Learn how key results such as the standard error on the mean and Bessel’s correction are derived from the statistics of sums of random variables.

In this episode we will be using numpy, as well as matplotlib’s plotting library. Scipy contains an extensive range of distributions in its ‘scipy.stats’ module, so we will also need to import it. Remember: scipy modules should be installed separately as required - they cannot be called if only scipy is imported.

import numpy as np

import matplotlib.pyplot as plt

import scipy.stats as sps

So far we have introduced the idea of working with data which contains some random component (as well as a possible systematic error). We have also introduced the idea of probability distributions of random variables. In this episode we will start to make the connection between the two because all data, to some extent, is a collection of random variables. In order to understand how the data are distributed, what the sample properties such as mean and variance can tell us and how we can use our data to test hypotheses, we need to understand how collections of random variables behave.

Properties of random variables: expectation

Consider a set of random variables which are independent, which means that the outcome of one does not affect the probability of the outcome of another. The random variables are drawn from some probability distribution with pdf \(p(x)\).

The expectation \(E(X)\) is equal to the arithmetic mean of the random variables as the number of sampled variates (realisations of the variable sampled from its probability distribution) increases \(\rightarrow \infty\). For a continuous probability distribution it is given by the mean of the distribution function, i.e. the pdf:

[E[X] = \mu = \int_{-\infty}^{+\infty} xp(x)\mathrm{d}x]

This quantity \(\mu\) is often just called the mean of the distribution, or the population mean to distinguish it from the sample mean of data.

More generally, we can obtain the expectation of some function of \(X\), \(f(X)\):

[E[f(X)] = \int_{-\infty}^{+\infty} f(x)p(x)\mathrm{d}x]

It follows that the expectation is a linear operator. So we can also consider the expectation of a scaled sum of variables \(X_{1}\) and \(X_{2}\) (which may themselves have different distributions):

[E[a_{1}X_{1}+a_{2}X_{2}] = a_{1}E[X_{1}]+a_{2}E[X_{2}]]

Properties of random variables: variance

The (population) variance of a discrete random variable \(X\) with (population) mean \(\mu\) is the expectation of the function that gives the squared difference from the mean:

[V[X] = \sigma^{2} = E[(X-\mu)^{2})] = \int_{-\infty}^{+\infty} (x-\mu)^{2} p(x)\mathrm{d}x]

It is possible to rearrange things:

\(V[X] = E[(X-\mu)^{2})] = E[X^{2}-2X\mu+\mu^{2}]\) \(\rightarrow V[X] = E[X^{2}] - E[2X\mu] + E[\mu^{2}] = E[X^{2}] - 2\mu^{2} + \mu^{2}\) \(\rightarrow V[X] = E[X^{2}] - \mu^{2} = E[X^{2}] - E[X]^{2}\)

In other words, the variance is the expectation of squares - square of expectations. Therefore, for a function of \(X\):

[V[f(X)] = E[f(X)^{2}] - E[f(X)]^{2}]

Means and variances of the uniform and normal distributions

The distribution of a random variable is given using the notation \(\sim\), meaning ‘is distributed as’. I.e. for \(X\) drawn from a uniform distribution over the interval \([a,b]\), we write \(X\sim U(a,b)\). For this case, we can use the approach given above to calculate the mean: \(E[X] = (b+a)/2\) and variance: \(V[X] = (b-a)^{2}/12\)

The

scipy.statsdistribution functions include methods to calculate the means and variances for a distribution and given parameters, which we can use to verify these results, e.g.:## Assume a = 1, b = 8 (remember scale = b-a) a, b = 1, 8 print("Mean is :",sps.uniform.mean(loc=a,scale=b-a)) print("analytical mean:",(b+a)/2) ## Now the variance print("Variance is :",sps.uniform.var(loc=a,scale=b-a)) print("analytical variance:",((b-a)**2)/12)Mean is : 4.5 analytical mean: 4.5 Variance is : 4.083333333333333 analytical variance: 4.083333333333333For the normal distribution with parameters \(\mu\), \(\sigma\), \(X\sim N(\mu,\sigma)\), the results are very simple because \(E[X]=\mu\) and \(V[X]=\sigma^{2}\). E.g.

## Assume mu = 4, sigma = 3 print("Mean is :",sps.norm.mean(loc=4,scale=3)) print("Variance is :",sps.norm.var(loc=4,scale=3))Mean is : 4.0 Variance is : 9.0

Generating random variables

So far we have discussed random variables in an abstract sense, in terms of the population, i.e. the continuous probability distribution. But real data is in the form of samples: individual measurements or collections of measurements, so we can get a lot more insight if we can generate ‘fake’ samples from a given distribution.

A random variate is the quantity that is generated while a random variable is the notional object able to assume different numerical values, i.e. the distinction is similar to the distinction in python between x=15 and the object to which the number is assigned x (the variable).

The scipy.stats distribution functions have a method rvs for generating random variates that are drawn from the distribution (with the given parameter values). You can either freeze the distribution or specify the parameters when you call it. The number of variates generated is set by the size argument and the results are returned to a numpy array.

# Generate 10 uniform variates from U(0,1) - the default loc and scale arguments

u_vars = sps.uniform.rvs(size=10)

print("Uniform variates generated:",u_vars)

# Generate 10 normal variates from N(20,3)

n_vars = sps.norm.rvs(loc=20,scale=3,size=10)

print("Normal variates generated:",n_vars)

Uniform variates generated: [0.99808848 0.9823057 0.01062957 0.80661773 0.02865487 0.18627394 0.87023007 0.14854033 0.19725284 0.45448424]

Normal variates generated: [20.35977673 17.66489157 22.43217609 21.39929951 18.87878728 18.59606091 22.51755213 21.43264709 13.87430417 23.95626361]

Remember that these numbers depend on the starting seed which is almost certainly unique to your computer (unless you pre-select it: see below). They will also change each time you run the code cell.

How random number generation works

Random number generators use algorithms which are strictly pseudo-random since (at least until quantum-computers become mainstream) no algorithm can produce genuinely random numbers. However, the non-randomness of the algorithms that exist is impossible to detect, even in very large samples.

For any distribution, the starting point is to generate uniform random variates in the interval \([0,1]\) (often the interval is half-open \([0,1)\), i.e. exactly 1 is excluded). \(U(0,1)\) is the same distribution as the distribution of percentiles - a fixed range quantile has the same probability of occurring wherever it is in the distribution, i.e. the range 0.9-0.91 has the same probability of occuring as 0.14-0.15. This means that by drawing a \(U(0,1)\) random variate to generate a quantile and putting that in the ppf of the distribution of choice, the generator can produce random variates from that distribution. All this work is done ‘under the hood’ within the

scipy.statsdistribution function.It’s important to bear in mind that random variates work by starting from a ‘seed’ (usually an integer) and then each call to the function will generate a new (pseudo-)independent variate but the sequence of variates is replicated if you start with the same seed. However, seeds are generated by starting from a system seed set using random and continuously updated data in a special file in your computer, so they will differ each time you run the code.

You can force the seed to take a fixed (integer) value using the

numpy.random.seed()function. It also works for thescipy.statsfunctions, which usenumpy.randomto generate their random numbers. Include your chosen seed value as a function argument - the sequence of random numbers generated will only change if you change the seed (but their mapping to the distribution - which is via the ppf - will mean that the values are different for different distributions). Use the function with no argument in order to reset back to the system seed.

Sums of random variables

Since the expectation of the sum of two scaled variables is the sum of the scaled expectations, we can go further and write the expectation for a scaled sum of variables \(Y=\sum\limits_{i=1}^{n} a_{i}X_{i}\):

[E[Y] = \sum\limits_{i=1}^{n} a_{i}E[X_{i}]]

What about the variance? We can first use the expression in terms of the expectations:

[V[Y] = E[Y^{2}] - E[Y]^{2} = E\left[ \sum\limits_{i=1}^{n} a_{i}X_{i}\sum\limits_{j=1}^{n} a_{j}X_{j} \right] - \sum\limits_{i=1}^{n} a_{i}E[X_{i}] \sum\limits_{j=1}^{n} a_{j}E[X_{j}]]

and convert to linear sums of expectations in terms of \(X_{i}\):

[V[Y] = \sum\limits_{i=1}^{n} a_{i}^{2} E[X_{i}^{2}] + \sum\limits_{i=1}^{n} \sum\limits_{j\neq i} a_{i}a_{j}E[X_{i}X_{j}] - \sum\limits_{i=1}^{n} a_{i}^{2} E[X_{i}]^{2} - \sum\limits_{i=1}^{n} \sum\limits_{j\neq i} a_{i}a_{j}E[X_{i}]E[X_{j}]\;.]

Of the four terms on the LHS, the squared terms only in \(i\) are equal to the summed variances while the cross-terms in \(i\) and \(j\) can be paired up to form the so-called covariances (which we will cover when we discuss bi- and multivariate statistics):

[V[Y] = \sum\limits_{i=1}^{n} a_{i}^{2} \sigma_{i}^{2} + \sum\limits_{i=1}^{n} \sum\limits_{j\neq i} a_{i}a_{j} \sigma_{ij}^{2}\,.]

For independent random variables, the covariances (taken in the limit of an infinite number of trials, i.e. the expectations of the sample covariance) are equal to zero. Therefore, the expected variance is equal to the sum of the individual variances multiplied by their squared scaling factors:

[V[Y] = \sum\limits_{i=1}^{n} a_{i}^{2} \sigma_{i}^{2}]

Intuition builder: covariance of independent random variables

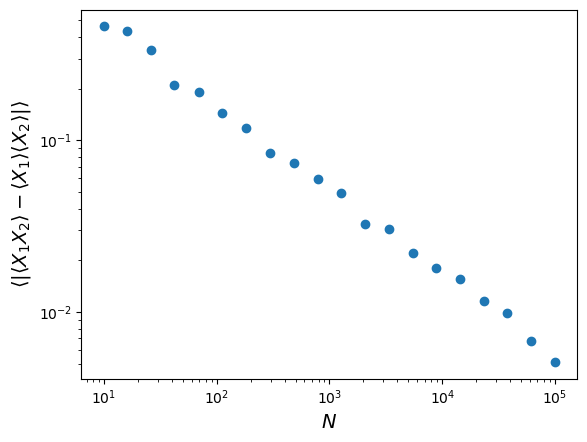

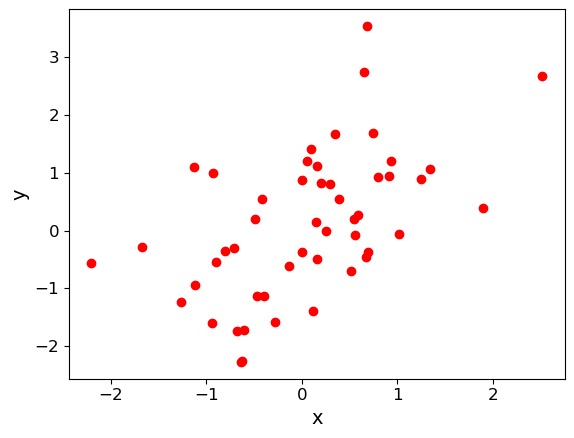

The covariant terms above disappear because for pairs of independent variables \(X_{i}\), \(X_{j}\) (where \(i \neq j\)), \(E[X_{i}X_{j}]=E[X_{i}]E[X_{j}]\). By generating many (\(N\)) pairs of independent random variables (from either the uniform or normal distribution), show that this equality is approached in the limit of large \(N\), by plotting a graph of the average of the absolute difference \(\lvert \langle X_{1}X_{2} \rangle - \langle X_{1} \rangle \langle X_{2} \rangle \rvert\) versus \(N\), where \(X_{1}\) and \(X_{2}\) are independent random numbers and angle-brackets denote sample means over the \(N\) pairs of random numbers. You will need to repeat the calculation many (e.g. at least 100) times to find the average for each \(N\), since there will be some random scatter in the absolute difference. To give a good range of \(N\), step through 10 to 20 values of \(N\) which are geometrically spaced from \(N=10\) to \(N=10^{5}\).

Hint

It will be much faster if you generate the values for \(X_{1}\) and \(X_{2}\) using numpy arrays of random variates, e.g.:

x1 = sps.uniform.rvs(loc=3,scale=5,size=(100,N))will produce 100 sets of \(N\) uniformly distributed random numbers. The multiplied arrays can be averaged over the \(N\) values using theaxis=1argument withnp.mean, before being averaged again over the 100 (or more) sets.Solution

ntrials = np.geomspace(10,1e5,20,dtype=int) # Set up the array to record the differences diff = np.zeros(len(ntrials)) for i, N in enumerate(ntrials): # E.g. generate uniform variates drawn from U[3,8] x1 = sps.uniform.rvs(loc=3,scale=5,size=(100,N)) x2 = sps.uniform.rvs(loc=3,scale=5,size=(100,N)) diff[i] = np.mean(np.abs(np.mean(x1*x2,axis=1)-np.mean(x1,axis=1)*np.mean(x2,axis=1))) plt.figure() plt.scatter(ntrials,diff) # We should use a log scale because of the wide range in values on both axis plt.xscale('log') plt.yscale('log') plt.xlabel('$N$',fontsize=14) # We add an extra pair of angle brackets outside, since we are averaging again over the 100 sets plt.ylabel(r'$\langle |\langle X_{1}X_{2} \rangle - \langle X_{1} \rangle \langle X_{2} \rangle|\rangle$',fontsize=14) plt.show()

Note that the size of the residual difference between the product of averages and average of products scales as \(1/\sqrt{N}\).

The standard error on the mean

We’re now in a position to estimate the uncertainty on the mean of our speed-of-light data, since the sample mean is effectively equal to a sum of scaled random variables (our \(n\) individual measurements \(x_{i}\)):

[\bar{x}=\frac{1}{n}\sum\limits_{i=1}^{n} x_{i}]

The scaling factor is \(1/n\) which is the same for all \(i\). Now, the expectation of our sample mean (i.e. over an infinite number of trials, that is repeats of the same collection of 100 measurements) is:

[E[\bar{x}] = \sum\limits_{i=1}^{n} \frac{1}{n} E[x_{i}] = n\frac{1}{n}E[x_{i}] = \mu]

Here we also implicitly assume that the measurements \(X_{i}\) are all drawn from the same (i.e. stationary) distribution, i.e \(E[x_{i}] = \mu\) for all \(i\). If this is the case, then in the absence of any systematic error we expect that \(\mu=c_{\mathrm{air}}\), the true value for the speed of light in air.

However, we don’t measure \(\mu\), which corresponds to the population mean!! We actually measure the scaled sum of measurements, i.e. the sample mean \(\bar{x}\), and this is distributed around the expectation value \(\mu\) with variance \(V[\bar{x}] = \sum\limits_{i=1}^{n} n^{-2} \sigma_{i}^{2} = \sigma^{2}/n\) (using the result for variance of a sum of random variables with scaling factor \(a_{i}=1/n\) assuming the measurements are all drawn from the same distribution, i.e. \(\sigma_{i}=\sigma\)). Therefore the expected standard deviation on the mean of \(n\) values, also known as the standard error is:

[\sigma_{\bar{x}} = \frac{\sigma}{\sqrt{n}}]

where \(\sigma\) is the population standard deviation. If we guess that the sample standard deviation is the same as that of the population (and we have not yet shown whether this is a valid assumption!), we estimate a standard error of 7.9 km/s for the 100 measurements, i.e. the observed sample mean is \(\sim19\) times the standard error away from the true value!

We have already made some progress, but we still cannot formally answer our question about whether the difference of our sample mean from \(c_{\mathrm{air}}\) is real or due to statistical error. This is for two reasons:

- The standard error on the sample mean assumes that we know the population standard deviation. This is not the same as the sample standard deviation, although we might expect it to be similar.

- Even assuming that we know the standard deviation and mean of the population of sample means, we don’t know what the distribution of that population is yet, i.e. we don’t know the probability distribution of our sample mean.

We will address these remaining issues in the next episodes.

Estimators, bias and Bessel’s correction

An estimator is a method for calculating from data an estimate of a given quantity. The results of biased estimators may be systematically biased away from the true value they are trying to estimate, in which case corrections for the bias are required. The bias is equivalent to systematic error in a measurement, but is intrinsic to the estimator rather than the data itself. An estimator is biased if its expectation value (i.e. its arithmetic mean in the limit of an infinite number of experiments) is systematically different to the quantity it is trying to estimate.

The sample mean is an unbiased estimator of the population mean. This is because for measurements \(x_{i}\) which are random variates drawn from the same distribution, the population mean \(\mu = E[x_{i}]\), and the expectation value of the sample mean of \(n\) measurements is:

\[E\left[\frac{1}{n}\sum\limits_{i=1}^{n} x_{i}\right] = \frac{1}{n}\sum\limits_{i=1}^{n} E[x_{i}] = \frac{1}{n}n\mu = \mu\]We can write the expectation of the summed squared deviations used to calculate sample variance, in terms of differences from the population mean \(\mu\), as follows:

\[E\left[ \sum\limits_{i=1}^{n} \left[(x_{i}-\mu)-(\bar{x}-\mu)\right]^{2} \right] = \left(\sum\limits_{i=1}^{n} E\left[(x_{i}-\mu)^{2} \right]\right) - nE\left[(\bar{x}-\mu)^{2} \right] = \left(\sum\limits_{i=1}^{n} V[x_{i}] \right) - n V[\bar{x}]\]But we know that (assuming the data are drawn from the same distribution) \(V[x_{i}] = \sigma^{2}\) and \(V[\bar{x}] = \sigma^{2}/n\) (from the standard error) so it follows that the expectation of the average of squared deviations from the sample mean is smaller than the population variance by an amount \(\sigma^{2}/n\), i.e. it is biased:

\[E\left[\frac{1}{n} \sum\limits_{i=1}^{n} (x_{i}-\bar{x})^{2} \right] = \frac{n-1}{n} \sigma^{2}\]and therefore for the sample variance to be an unbiased estimator of the underlying population variance, we need to correct our calculation by a factor \(n/(n-1)\), leading to Bessel’s correction to the sample variance:

\[\sigma^{2} = E\left[\frac{1}{n-1} \sum\limits_{i=1}^{n} (x_{i}-\bar{x})^{2} \right]\]A simple way to think about the correction is that since the sample mean is used to calculate the sample variance, the contribution to population variance that leads to the standard error on the mean is removed (on average) from the sample variance, and needs to be added back in.

Key Points

Random variables are drawn from probability distributions. The expectation value (arithmetic mean for an infinite number of sampled variates) is equal to the mean of the distribution function (pdf).

The expectation of the variance of a random variable is equal to the expectation of the squared variable minus the squared expectation of the variable.

Sums of scaled random variables have expectation values equal to the sum of scaled expectations of the individual variables, and variances equal to the sum of scaled individual variances.

The means and variances of summed random variables lead to the calculation of the standard error (the standard deviation) of the mean.

scipy.statsdistributions have methods to calculate the mean (.mean), variance (.var) and other properties of the distribution.

scipy.statsdistributions have a method (.rvs) to generate arrays of random variates drawn from that distribution.

The Central Limit Theorem

Overview

Teaching: 30 min

Exercises: 0 minQuestions

What happens to the distributions of sums or means of random data?

Objectives

Discover the wide range of numerical methods that are available in Scipy sub-packages

See how some of the subpackages can be used for interpolation, integration, model fitting and Fourier analysis of time-series.

In this episode we will be using numpy, as well as matplotlib’s plotting library. Scipy contains an extensive range of distributions in its ‘scipy.stats’ module, so we will also need to import it. Remember: scipy modules should be installed separately as required - they cannot be called if only scipy is imported.

import numpy as np

import matplotlib.pyplot as plt

import scipy.stats as sps

So far we have learned about probability distributions and the idea that sample statistics such as the mean should be drawn from a distribution with a modified variance (with standard deviation given by the standard error), due to the summing of independent random variables. An important question is what happens to the distribution of summed variables?

The distributions of random numbers

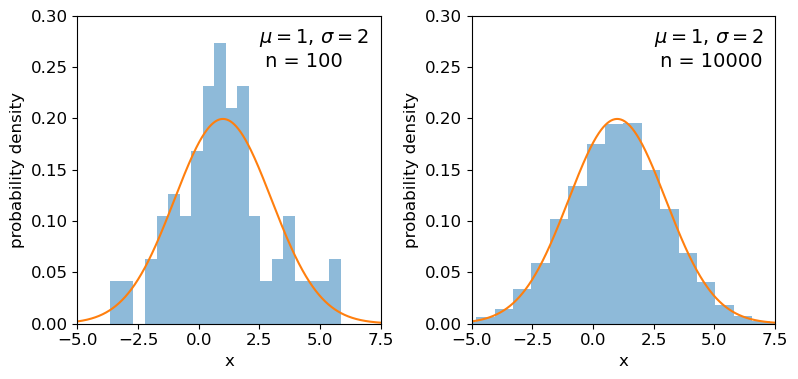

In the previous episode we saw how to use Python to generate random numbers and calculate statistics or do simple statistical experiments with them (e.g. looking at the covariance as a function of sample size). We can also generate a larger number of random variates and compare the resulting sample distribution with the pdf of the distribution which generated them. We show this for the uniform and normal distributions below:

mu = 1

sigma = 2

## freeze the distribution for the given mean and standard deviation

nd = sps.norm(mu, sigma)

## Generate a large and a small sample

sizes=[100,10000]

x = np.arange(-5.0, 8.0, 0.01)

fig, (ax1, ax2) = plt.subplots(1,2, figsize=(9,4))

fig.subplots_adjust(wspace=0.3)

for i, ax in enumerate([ax1,ax2]):

nd_rand = nd.rvs(size=sizes[i])

# Make the histogram semi-transparent

ax.hist(nd_rand, bins=20, density=True, alpha=0.5)

ax.plot(x,nd.pdf(x))

ax.tick_params(labelsize=12)

ax.set_xlabel("x", fontsize=12)

ax.tick_params(axis='x', labelsize=12)

ax.tick_params(axis='y', labelsize=12)

ax.set_ylabel("probability density", fontsize=12)

ax.set_xlim(-5,7.5)

ax.set_ylim(0,0.3)

ax.text(2.5,0.25,

"$\mu=$"+str(mu)+", $\sigma=$"+str(sigma)+"\n n = "+str(sizes[i]),fontsize=14)

plt.show()

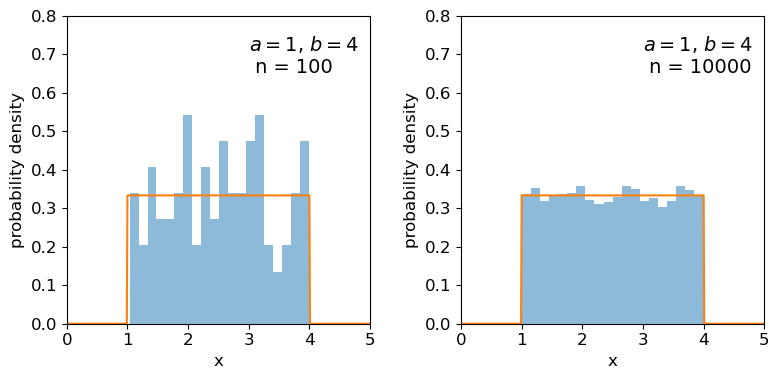

## Repeat for the uniform distribution

a = 1

b = 4

## freeze the distribution for given a and b

ud = sps.uniform(loc=a, scale=b-a)

sizes=[100,10000]

x = np.arange(0.0, 5.0, 0.01)

fig, (ax1, ax2) = plt.subplots(1,2, figsize=(9,4))

fig.subplots_adjust(wspace=0.3)

for i, ax in enumerate([ax1,ax2]):

ud_rand = ud.rvs(size=sizes[i])

ax.hist(ud_rand, bins=20, density=True, alpha=0.5)

ax.plot(x,ud.pdf(x))

ax.tick_params(labelsize=12)

ax.set_xlabel("x", fontsize=12)

ax.tick_params(axis='x', labelsize=12)

ax.tick_params(axis='y', labelsize=12)

ax.set_ylabel("probability density", fontsize=12)

ax.set_xlim(0,5)

ax.set_ylim(0,0.8)

ax.text(3.0,0.65,

"$a=$"+str(a)+", $b=$"+str(b)+"\n n = "+str(sizes[i]),fontsize=14)

plt.show()

Clearly the sample distributions for 100 random variates are much more scattered compared to the 10000 random variates case (and the ‘true’ distribution).

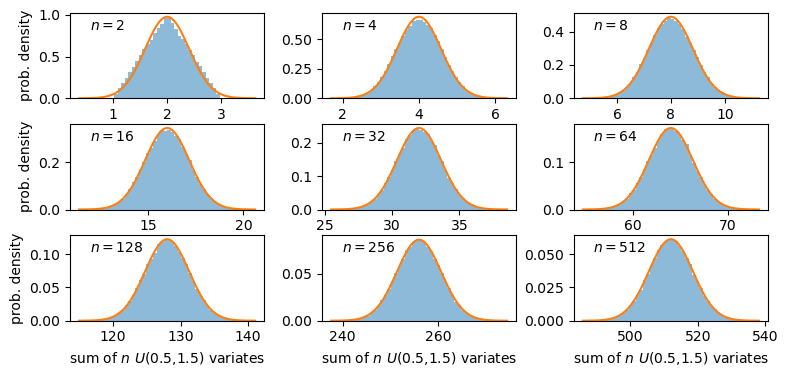

The distributions of sums of uniform random numbers

Now we will go a step further and run a similar experiment but plot the histograms of sums of random numbers instead of the random numbers themselves. We will start with sums of uniform random numbers which are all drawn from the same, uniform distribution. To plot the histogram we need to generate a large number (ntrials) of samples of size given by nsamp, and step through a range of nsamp to make a histogram of the distribution of summed sample variates. Since we know the mean and variance of the distribution our variates are drawn from, we can calculate the expected variance and mean of our sum using the approach for sums of random variables described in the previous episode.

# Set ntrials to be large to keep the histogram from being noisy

ntrials = 100000

# Set the list of sample sizes for the sets of variates generated and summed

nsamp = [2,4,8,16,32,64,128,256,512]

# Set the parameters for the uniform distribution and freeze it

a = 0.5

b = 1.5

ud = sps.uniform(loc=a,scale=b-a)

# Calculate variance and mean of our uniform distribution

ud_var = ud.var()

ud_mean = ud.mean()

# Now set up and plot our figure, looping through each nsamp to produce a grid of subplots

n = 0 # Keeps track of where we are in our list of nsamp

fig, ax = plt.subplots(3,3, figsize=(9,4))

fig.subplots_adjust(wspace=0.3,hspace=0.3) # Include some spacing between subplots

# Subplots ax have indices i,j to specify location on the grid

for i in range(3):

for j in range(3):

# Generate an array of ntrials samples with size nsamp[n]

ud_rand = ud.rvs(size=(ntrials,nsamp[n]))

# Calculate expected mean and variance for our sum of variates

exp_var = nsamp[n]*ud_var

exp_mean = nsamp[n]*ud_mean

# Define a plot range to cover adequately the range of values around the mean

plot_range = (exp_mean-4*np.sqrt(exp_var),exp_mean+4*np.sqrt(exp_var))

# Define xvalues to calculate normal pdf over

xvals = np.linspace(plot_range[0],plot_range[1],200)

# Calculate histogram of our sums

ax[i,j].hist(np.sum(ud_rand,axis=1), bins=50, range=plot_range,

density=True, alpha=0.5)

# Also plot the normal distribution pdf for the calculated sum mean and variance

ax[i,j].plot(xvals,sps.norm.pdf(xvals,loc=exp_mean,scale=np.sqrt(exp_var)))

# The 'transform' argument allows us to locate the text in relative plot coordinates

ax[i,j].text(0.1,0.8,"$n=$"+str(nsamp[n]),transform=ax[i,j].transAxes)

n = n + 1

# Only include axis labels at the left and lower edges of the grid:

if j == 0:

ax[i,j].set_ylabel('prob. density')

if i == 2:

ax[i,j].set_xlabel("sum of $n$ $U($"+str(a)+","+str(b)+"$)$ variates")

plt.show()

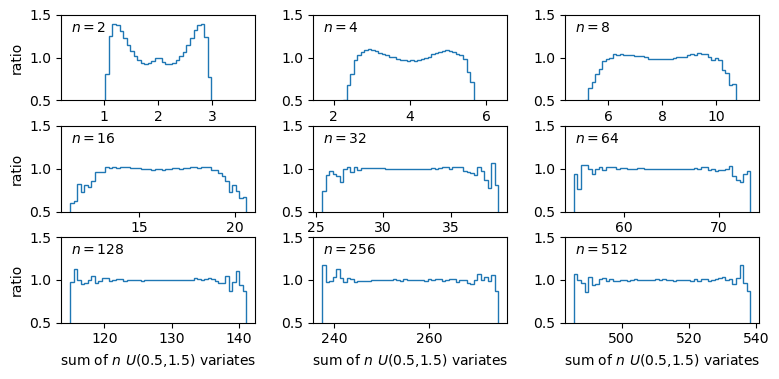

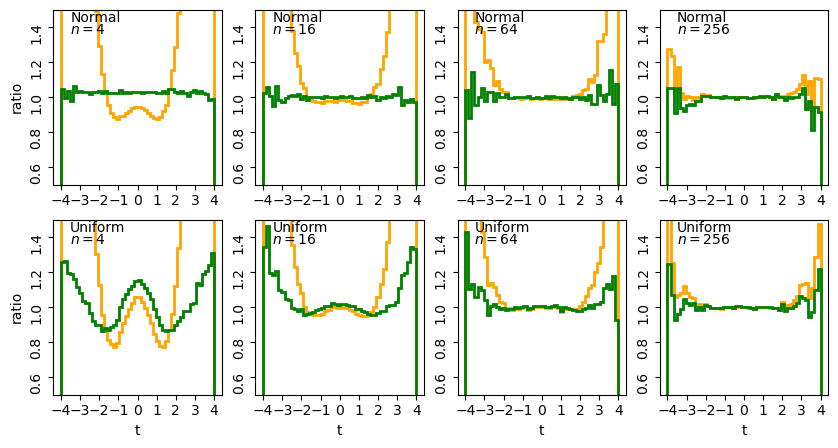

A sum of two uniform variates follows a triangular probability distribution, but as we add more variates we see that the distribution starts to approach the shape of the normal distribution for the same (calculated) mean and variance! Let’s show this explicitly by calculating the ratio of the ‘observed’ histograms for our sums to the values from the corresponding normal distribution.

To do this correctly we should calculate the average probability density of the normal pdf in bins which are the same as in the histogram. We can calculate this by integrating the pdf over each bin, using the difference in cdfs at the upper and lower bin edge (which corresponds to the integrated probability in the normal pdf over the bin). Then if we normalise by the bin width, we get the probability density expected from a normal distribution with the same mean and variance as the expected values for our sums of variates.

# For better precision we will make ntrials 10 times larger than before, but you

# can reduce this if it takes longer than a minute or two to run.

ntrials = 1000000

nsamp = [2,4,8,16,32,64,128,256,512]

a = 0.5

b = 1.5

ud = sps.uniform(loc=a,scale=b-a)

ud_var = ud.var()

ud_mean = ud.mean()

n = 0

fig, ax = plt.subplots(3,3, figsize=(9,4))

fig.subplots_adjust(wspace=0.3,hspace=0.3)

for i in range(3):

for j in range(3):

ud_rand = ud.rvs(size=(ntrials,nsamp[n]))

exp_var = nsamp[n]*ud_var

exp_mean = nsamp[n]*ud_mean

nd = sps.norm(loc=exp_mean,scale=np.sqrt(exp_var))

plot_range = (exp_mean-4*np.sqrt(exp_var),exp_mean+4*np.sqrt(exp_var))

# Since we no longer want to plot the histogram itself, we will use the numpy function instead

dens, edges = np.histogram(np.sum(ud_rand,axis=1), bins=50, range=plot_range,

density=True)

# To get the pdf in the same bins as the histogram, we calculate the differences in cdfs at the bin

# edges and normalise them by the bin widths.

norm_pdf = (nd.cdf(edges[1:])-nd.cdf(edges[:-1]))/np.diff(edges)

# We can now plot the ratio as a pre-calculated histogram using the trick we learned in Episode 1

ax[i,j].hist((edges[1:]+edges[:-1])/2,bins=edges,weights=dens/norm_pdf,density=False,

histtype='step')

ax[i,j].text(0.05,0.8,"$n=$"+str(nsamp[n]),transform=ax[i,j].transAxes)

n = n + 1

ax[i,j].set_ylim(0.5,1.5)

if j == 0:

ax[i,j].set_ylabel('ratio')

if i == 2:

ax[i,j].set_xlabel("sum of $n$ $U($"+str(a)+","+str(b)+"$)$ variates")

plt.show()

The plots show the ratio between the distributions of our sums of \(n\) uniform variates, and the normal distribution with the same mean and variance expected from the distribution of summed variates. There is still some scatter at the edges of the distributions, where there are only relatively few counts in the histograms of sums, but the ratio plots still demonstrate a couple of important points:

- As the number of summed uniform variates increases, the distribution of the sums gets closer to a normal distribution (with mean and variance the same as the values expected for the summed variable).

- The distribution of summed variates is closer to normal in the centre, and deviates more strongly in the ‘wings’ of the distribution.

The Central Limit Theorem

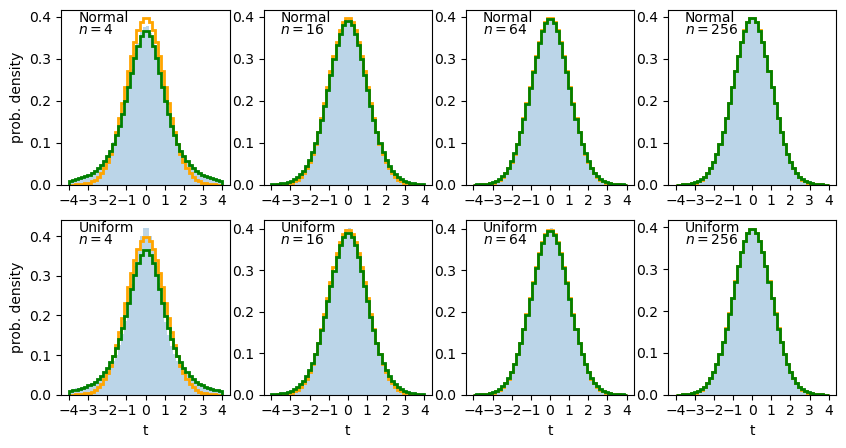

The Central Limit Theorem (CLT) states that under certain general conditions (e.g. distributions with finite mean and variance), a sum of \(n\) random variates drawn from distributions with mean \(\mu_{i}\) and variance \(\sigma_{i}^{2}\) will tend towards being normally distributed for large \(n\), with the distribution having mean \(\mu = \sum\limits_{i=1}^{n} \mu_{i}\) and variance \(\sigma^{2} = \sum\limits_{i=1}^{n} \sigma_{i}^{2}\).

It is important to note that the limit is approached asymptotically with increasing \(n\), and the rate at which it is approached depends on the shape of the distribution(s) of variates being summed, with more asymmetric distributions requiring larger \(n\) to approach the normal distribution to a given accuracy. The CLT also applies to mixtures of variates drawn from different types of distribution or variates drawn from the same type of distribution but with different parameters. Note also that summed normally distributed variables are always distributed normally, whatever the combination of normal distribution parameters.

The Central Limit Theorem tells us that when we calculate a sum (or equivalently a mean) of a sample of \(n\) randomly distributed measurements, for increasing \(n\) the resulting quantity will tend towards being normally distributed around the true mean of the measured quantity (assuming no systematic error), with standard deviation equal to the standard error.

Key Points

Sums of samples of random variates from non-normal distributions with finite mean and variance, become asymptotically normally distributed as their sample size increases.

The theorem holds for sums of differently distributed variates, but the speed at which a normal distribution is approached depends on the shape of the variate’s distribution, with symmetric distributions approaching the normal limit faster than asymmetric distributions.

Means of large numbers (e.g. 100 or more) of non-normally distributed measurements are distributed close to normal, with distribution mean equal to the population mean that the measurements are drawn from and standard deviation given by the standard error on the mean.

Distributions of means (or other types of sum) of non-normal random data are closer to normal in their centres than in the tails of the distribution, so the normal assumption is most reliable for smaller deviations of sample mean from the population mean.

Significance tests: the z-test - comparing with a population of known mean and variance

Overview

Teaching: 30 min

Exercises: 10 minQuestions

How do I compare a sample mean with an expected value, when I know the true variance that the data are sampled from?

Objectives

Learn the general approach to significance testing, including how to formulate a null hypothesis and calculate a p-value.

See how you can formulate statements about the signficance of a test.

Learn how to compare a normally distributed sample mean with an expected value for a given hypothesis, assuming that you know the variance of the distribution the data are sampled from.

In this episode we will be using numpy, as well as matplotlib’s plotting library. Scipy contains an extensive range of distributions in its ‘scipy.stats’ module, so we will also need to import it. Remember: scipy modules should be installed separately as required - they cannot be called if only scipy is imported.

import numpy as np

import matplotlib.pyplot as plt

import scipy.stats as sps

Significance testing

We want to test the idea that our data are drawn from a distribution with a given mean value. For the Michelson case, this is the speed of light in air, i.e. we are testing the null hypothesis that the difference between our data distribution’s mean and the speed of light in air is consistent with being zero. To do this, we need to carry out a significance test, which is a type of hypothesis test where we compare a test statistic calculated from the data with the distribution expected it if the null hypothesis is true.

The procedure for significance testing is:

- Choose a test statistic appropriate to test the null hypothesis.

- Calculate the test statistic for your data.

- Compare the test statistic with the distribution expected for it if your null hypothesis is true: the percentile (and whether or not the test is two-tailed) of the test statistic in the distribution gives the \(p\)-value, which is the probability that you would obtain the observed test statistic if your null hypothesis is true.

The \(p\)-value is an estimate of the statistical significance of your hypothesis test. It represents the probability that the test statistic is equal to or more extreme than the one observed, Formally, the procedure is to pre-specify (before doing the test, or ideally before even looking at the data!) a required significance level \(\alpha\), below which one would reject the null hypothesis, but one can also conduct exploratory data analysis (e.g. when trying to formulate more detailed hypotheses for testing with additional data) where a \(p\)-value is simply quoted as it is and possibly used to define a set of conclusions.

The null hypothesis itself should be framed according to the scientific question you are trying to answer. We cannot write a simple prescription for this as the range of questions and ways to address them is too vast, but we give some starting points further below and you will see many examples for inspiration in the remainder of this course.

Significance testing: two-tailed case

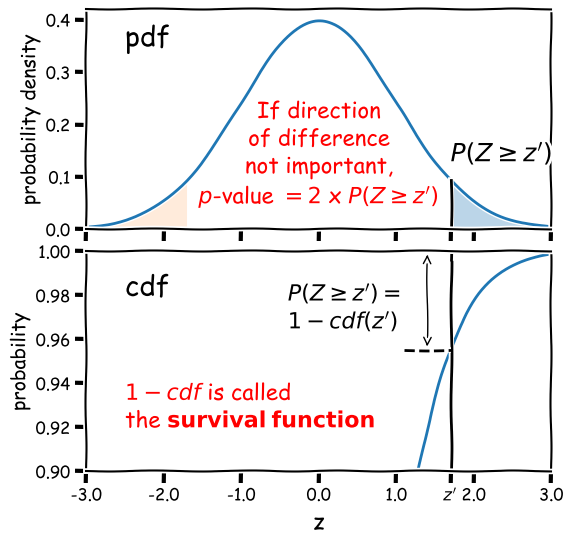

Often the hypothesis we are testing predicts a test statistic with a (usually symmetric) two-tailed distribution, because only the magnitude of the deviation of the test statistic from the expected value matters, not the direction of that deviation. A good example is for deviations from the expected mean due to statistical error: if the null hypothesis is true we don’t expect a preferred direction to the deviations and only the size of the deviation matters.

Calculation of the 2-tailed \(p\)-value is demonstrated in the figure below. For a positive observed test statistic \(Z=z^{\prime}\), the \(p\)-value is twice the integrated probability density for \(z\geq z^{\prime}\), i.e. \(2\times(1-cdf(z^{\prime}))\).

Note that the function 1-cdf is called the survival function and it is available as a separate method for the statistical distributions in

scipy.stats, which can be more accurate than calculating 1-cdf explicitly for very small \(p\)-values.For example, for the graphical example above, where the distribution is a standard normal:

print("p-value = ",2*sps.norm.sf(1.7))p-value = 0.08913092551708608In this example the significance is low and the null hypothesis is not ruled out at better than 95% confidence.

Significance levels and reporting

How should we choose our significance level, \(\alpha\)? It depends on how important is the answer to the scientific question you are asking!